In my previous article, we discussed “HHow to use QVD to make your QlikView application more efficient?”. In this article, we will take a step forward to make our application more efficient when dealing with large transactional data. As I mentioned in my previous article, I was working on a QlikView application, where I had to show sales across multiple channels for predefined frequencies (for instance, Daily, Monthly, Annually).

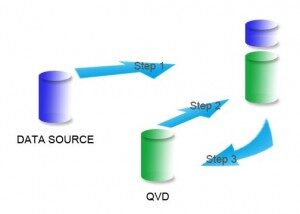

Initially, I was reloading the entire transaction table on a daily basis, although I already had the data until yesterday with me. This not only took a long time, it also increased the load on the database server and the network. This is where incremental loading with QVD made a big difference by loading only new or updated data from the database into a table.

Incremental loads:

Incremental load is defined as the activity of loading only new or updated records from the database in an established QVD. Incremental loads are useful because they run very efficiently compared to full loads, particularly for large data sets.

Incremental loading can be implemented in different ways, common methods are as follows:

- Insert only (do not validate for duplicate records)

- Insert and update

- Insert, update and delete

Let's understand each of these 3 scenarios with an example

1. Insert only:

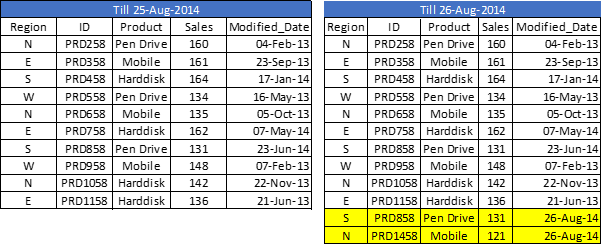

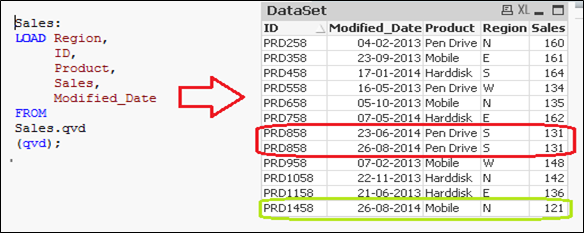

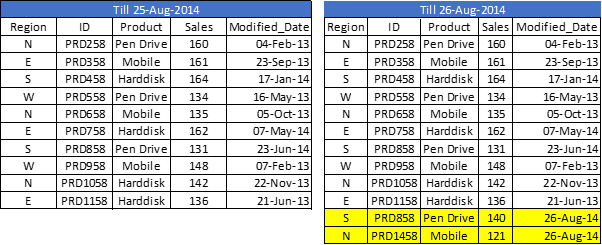

Let's say we have raw sales data (in excel) and every time a new sale is registered, is updated with basic details about the sale by modification date. Since we are working on QVD, We already have QVD created until yesterday (25 August 2014 in this case). Now, I want to load only the incremental records (highlighted in yellow below).

To perform this exercise, first create a QVD for the data up to 25 August 2014. To identify new incremental records, we need to know the date until which, QVD is already updated. This can be identified by checking the maximum of Modified_date in the available QVD file.

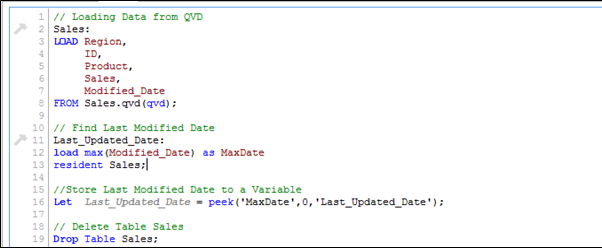

As mentioned earlier, i assumed that “Sales. qvd”It is updated with data up to 25 August 2014. To identify the last modification date of “Sales. qvd”, The following code can help:

Here, I loaded the last updated QVD into memory and then I identified the date of the last modification by storing a maximum of “Modification date”. Then, we store this date in a variable “Last_Updated_Date“And drop the table”Sales”. In the above code, I have used Look() function to store maximum modification date. Here is your syntax:

Peek (field name, row number, table name)

This function returns the content of a given field for a specified row from the internal table. FieldName and TableName must be provided as a string and Row must be an integer. 0 denotes the first record, 1 the second and so on. Negative numbers indicate the order from the end of the table. -1 denotes the last record.

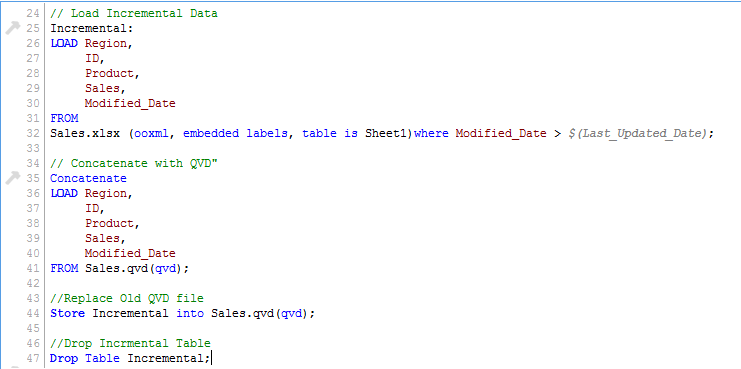

Since we know the date after which the records will be considered new records, we can load incremental records from the dataset (Where clause in the Load statement) and merge them with available QVD (look at the snapshot below).

Now, load updated QVD (Sales), would have incremental records.

As you can see, two records were added from 26 August 2014. But nevertheless, we have also inserted a duplicate record. Now we can say that an INSERT only method does not validate duplicate records because we have not accessed the available records.

What's more, in this method we cannot update the value of existing records.

To sum up, the following are the steps to load only the incremental records in QVD using the INSERT only method:

1) Identify new records and upload them

2) Concatenate this data with a QVD file

3) Replace the old QVD file with a new concatenated table

2. Insert and update method:

As seen in the previous example, we are unable to perform duplicate record check and update existing record. This is where, the Insert and Update method comes to help:

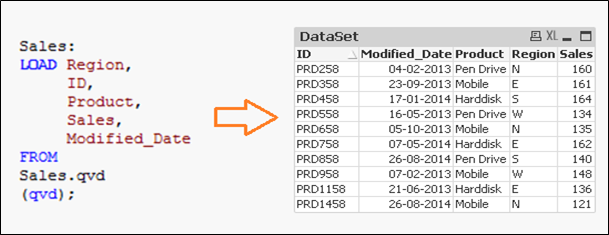

In the data set above (right table), we have a record (ID = PRD1458) to add and another (ID = PRD858) to actualize (sales value of 131 a 140). Now, to update and verify duplicate records, we need a primary key in our dataset.

Suppose id is primary key and, according to the modification date and identification, we should be able to identify and classify new or changed records.

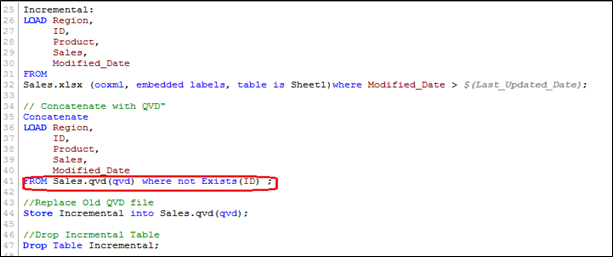

To run this method, follow similar steps to identify the new records as we have done in the INSERT only method and while concatenating the incremental data with an existing one, we apply duplicate record checking or update the value of existing records.

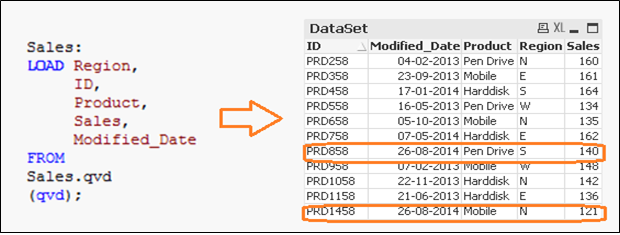

Here, we have loaded only those records where the primary key (ID) is new and using the Exists function () prevents QVD from loading outdated records since the UPDATED version is currently in memory, so existing record values are automatically updated.

Now, we have all the unique records available in QVD with an updated sale value for ID (PRD858).

3. INSERT method, UPDATE AND DELETE:

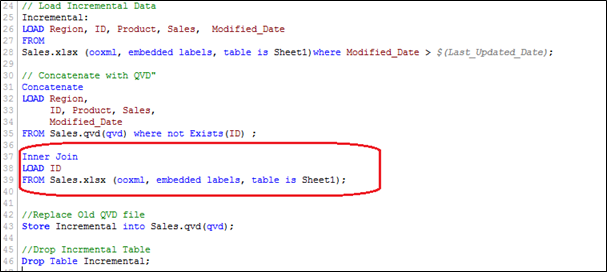

The script for this method is very similar to INSERT & UPDATE, but nevertheless, here we have an extra step needed to delete the deleted records.

We will load the primary keys of all the records of the current dataset and apply an inner join with the concatenated dataset (Ancient + Incremental). The inner join will retain only the common registers and, Thus, will delete unwanted records. Suppose we want to delete a record from (ID PRD1058) in the example above.

Here, we have a data set with the addition of a record (ID PRD1458), modifying a record (ID PRD158) and deleting a record (ID PRD1058).

Final notes:

In this article, we have discussed how incremental loads are better and provide an efficient way to load data compared to FULL load. As good practice, you should have a regular backup of your data because it may be affected or data loss may occur, if there are problems with the database server and the network.

Depending on your industry and the need for the application, you can select which method works for you. Most of the common applications in the BFSI industry are based on Insert and Update. Record deletion is not normally used.

Have you faced a similar situation or do you have another trick to improve the efficiency of Qlikview applications under your hat?? If so, I'd love to hear your thoughts through the comments below., as it also benefits someone else trying to handle a similar situation.