Introduction

Have you ever solved a one-time machine learning problem??

Solving a problem using machine learning is not easy. It involves several steps to arrive at a precise solution. The process / steps to follow to solve an ml problem known as ML Pipeline / ML Cycle.

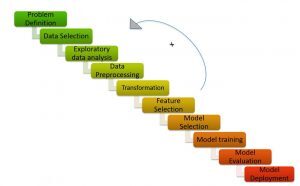

ML Pipeline / ML Cycle (Credits: https://medium.com/analytics-vidhya/machine-learning-development-life-cycle-dfe88c44222e)

as the picture shows, The Machine Learning pipeline consists of different steps such as:

Understand the problem statement, hypothesis generation, exploratory data analysis, data preprocessing, feature engineering, feature selection, model building, model fitting and model implementation.

I would recommend reading the articles below to get a detailed understanding of the machine learning pipeline:

- Explanation of the machine learning life cycle!

- Steps to complete a machine learning project

The process of solving a machine learning problem takes a lot of time and human effort. Hurray! It is no longer a tedious and time consuming process! Thanks to AutoML for providing instant solutions to machine learning problems.

AutoML is all about automatically creating the high-performance model with the least human intervention.

AutoML libraries offer low-code and no-code programming.

You've probably heard of the terms “low code” Y “without code”.

- Without code Frameworks are simple UIs that allow even non-technical users to build models without writing a single line of code.

- Low code refers to the minimum encoding.

Although no-code platforms make it easy to train a machine learning model using a drag-and-drop interface, are limited in terms of flexibility. The low-code ML, Secondly, is the optimal point and the middle term, as they offer flexibility and easy-to-use code.

In this article, Let's understand how to build a text classification model within a few lines of code using a low-code AutoML library, PyCaret.

Table of Contents

- What is PyCaret?

- Why do we need PyCaret?

- Different Approaches to Solving Text Classification in PyCaret

- Theme modeling

- Count Vectorizer

- Case study: text classification with PyCaret

What is PyCaret?

PyCaret is an open source, low-code machine learning library in Python that allows you to go from preparing your data to implementing your model in a few minutes.

PyCaret (Credits: https://pycaret.org/)

PyCaret is essentially a low-code library that replaces hundreds of lines of code in scikit learn a 5-6 lines of code. Increases team productivity and helps the team focus on understanding the problem and engineering features rather than optimizing the model.

PyCaret (Credits: https://pycaret.org/about/)

PyCaret is built on top of a scikit learn library. As a result, all machine learning algorithms available in scikit learn are available in pycaret. Hereinafter, PyCaret can solve classification related problems, regression, grouping, anomaly detection, text classification, mining associated rules and time series.

Now, Let's analyze the reasons behind the use of PyCaret.

Why do we need PyCaret?

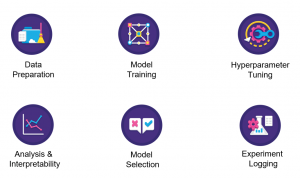

PyCaret automatically creates the reference model given a dataset within 5-6 lines of code. Let's see how pycaret simplifies every step in the machine learning pipeline.

- Data preparation: PyCaret performs cleaning and data preprocessing with the least manual intervention.

- Function engineering: PyCaret creates the mathematical characteristics automatically and selects the most important characteristics needed for the model

- Construction of the model: Greatly simplifies the modeling part of your project. We can build different models and select the best performing models with a single line of code.

- Model fit: PyCaret tunes the model without explicitly passing hyperparameters to each model.

Then, we will focus on solving a text classification problem in PyCaret.

Different Approaches to Solving Text Classification in PyCaret

Let's solve a text classification problem in PyCaret using 2 different techniques:

- Theme modeling

- Count Vectorizer

I will touch every focus in detail

Theme modeling

Theme modeling, As the name implies, is a technique to identify different themes present in the text data.

Themes are defined as a repeating group of symbols (or words) statistically significant in a corpus. Here, statistical significance refers to important words in the document. In general, words that appear frequently with higher TF-IDF scores are considered statistically significant words.

Topic modeling is an unsupervised technique to automatically find hidden topics in text data. It can also be called the text mining approach to find recurring patterns in text documents.

Theme modeling (Credits: https://medium.com/analytics-vidhya/topic-modeling-using-lda-and-gibbs-sampling-explained-49d49b3d1045)

Some common use cases for theme modeling include the following:

-  Solve classification problems / text regression

- Create relevant tags for documents

- Generate information for customer feedback forms, customer reviews, survey results, etc.

Theme modeling example

Let's say you work for a law firm and you are working with a company where some money has been embezzled and you know that there is key information in the emails that have been distributed in the company.

- Then, check emails and there are hundreds of thousands of emails. Now, what you need to do is find out which ones are related to money compared to other topics.

- You can label them by hand based on what you read in the text, which would take a long time, or you can use the technique called theme modeling to find out what these tags are and automatically tag all these emails.

As explained above, the goal of theme modeling is to extract different themes from the raw text. But, What is the underlying algorithm to achieve it?

This brings us to the different algorithms / techniques for modeling themes: latent dirichlet assignment (LDA), non-negative matrix factorization (NNMF), latent semantic assignment (LSA).

I would recommend that you refer to the following resources to read in detail about the algorithms

- Part 2: Theme modeling and latent Dirichlet assignment (LDA) using Gensim and Sklearn

- Beginner's Guide to Theme Modeling in Python

- Theme modeling with LDA: a practical introduction

Coming to theme modeling, is a process of 2 Steps:

- Distribution from topic to term: Find the most important topics in the corpus.

- Document-to-topic distribution: Assign scores for each topic to each document.

Having understood theme modeling, we will see how to solve text classification using topic modeling with the help of an example.

Consider a corpus:

- Document 1: I want to have fruits for breakfast.

- Document 2: I like to eat almonds, eggs and fruits.

- Document 3: I'll take fruits and cookies with me when I go to the zoo.

- Document 4: The zookeeper feeds the lion very carefully.

- Document 5: Good quality biscuits should be given to your dogs.

The theme modeling algorithm (LDA) identifies the most important topics in the documents.

- Theme 1: 30% fruits, 15% eggs, 10% biscuits,… (meal)

- Theme 2: 20% Lion, 10% dogs, 5% Zoo,… (animals)

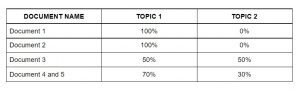

Then, assign scores for each topic to documents as follows.

Assign topics to each document using LDA

This matrix acts as characteristics of the machine learning algorithm. Then, we'll see the bag of words.

Bag of words

Bag Of Words (BOW) is another popular algorithm for representing text in numbers. It depends on the frequency of the words in the document. BOW has numerous applications such as document classification, theme modeling and text similarity. And BOW, each document is represented as the frequency of words present in the document. Then, the frequency of the words represents the importance of the words in the document.

Bag of words (Credits: Jurafsky et al., 2018)

Follow the article below to get a detailed understanding of Bag Of Words:

In the next section, we will solve the text classification problem in PyCaret.

Case study: text classification with PyCaret

Let's understand the problem statement before solving it.

Understanding the problem statement

Steam is a digital video game distribution service with a vast community of players worldwide. Many players write reviews on the game page and have the option to choose whether they would recommend this game to others or not.. But nevertheless, determining this sentiment automatically from the text can help Steam automatically tag reviews pulled from other internet forums and can help them better judge the popularity of games.

Given the review text with the user's recommendation, the task is to predict whether the reviewer recommended the game titles available in the test set based on the review text and other information.

In simpler terms, the task at hand is to identify whether a given user review is good or bad. You can download the dataset from here.

Implementation

To rate Steam game reviews using PyCaret, I have discussed 2 different approaches in the article.

- The first approach uses theme modeling using PyCaret.

- The second approach uses the features of Bag Of Words. Use these functions for classification using PyCaret.

We will implement the BOW approach now.

Note: The tutorial is implemented in Google Colab. I would recommend running the code in it.

PyCaret Installation

You can install PyCaret like any other Python library.

- Install PyCaret on Google Colab or Azure Notebooks

Libraries import

Loading data

How PyCaret does not support the count vectorizer, import the CountVectorizer module from sklearn.feature_extraction.

Later, I initialize a CountVectorizer object called 'tf_vectorizer'.

What exactly does the fit_transform function do with your data?

- “Adjust” extracts the characteristics of the data set.

- “To transform” actually performs the transformations on the dataset.

Let's convert the output of fit_transform to the data frame.

Now, concatenate the characteristics and the objective along the column.

Then, we will divide the dataset into test and train data.

Now that the feature extraction is done. Let's use these functions to build different models. Then, the next step is to configure the environment in PyCaret.

Setting the environment

- This function sets up the training framework and builds the transition process. The setup function must be called before any other function can be called.

- The only mandatory parameter is the data and the objective.

Creation of models

Model fit

From the previous output, we can see that the metrics of the fitted model are better than the metrics of the base model.

Evaluate and predict the model

Here, I have predicted the flag values for our processed data set, ‘tuned_lightgbm’.

Final notes

PyCaret, training machine learning models in a low-code environment, piqued my interest. From your preferred laptop environment, PyCaret helps you go from data preparation to model implementation in seconds. Before using PyCaret, I tried other traditional methods to solve the JanataHack NLP hackathon problem, But the results were not very satisfactory!!

PyCaret has proven to be exponentially fast and efficient compared to the other open source machine learning libraries and also has the advantage of replacing multiple lines of code with just a few words..

Here, if you avoid the first part of my approach where I use the counting vectorizer embedding techniques in my dataset and then move on to configuring and creating models using PyCaret, then you can notice that all transformations, like hot coding , the imputation of lost values, etc., will happen behind the scenes automatically, and then you will get a data frame with predictions, Like what we got!

I hope I have made my general approach to the hackathon clear.