This article was published as part of the Data Science Blogathon

A recommendation system is one of the main applications of data science. Every consumer internet company requires a recommendation system like Netflix, Youtube, a news service, etc. What you want to show from a wide range of articles is a recommendation system.

Table of Contents

- Introduction to a recommendation system

- Types of recommendation system

- Book recommendation system

- Content-based filtering

- Collaborative filtering

- Hybrid filtering

- System of practical recommendations

- Description of the data set

- Preprocess data

- Perform EDA

- Grouping

- Predictions

- Final notes

What really is the recommendation system?

A recommendation engine is a machine learning class that offers relevant suggestions to the customer. Before the recommendation system, the biggest tendency to buy was to accept a suggestion from friends. But now Google knows what news it will read, Youtube knows what kind of videos you will see based on your search history, watch history or purchase history.

A recommendation system helps an organization build loyal customers and build trust in the desired products and services for those who came to your site.. The current recommendation system is so powerful that it can also handle the new customer who has visited the site for the first time.. They recommend products that are trending or highly rated and can also recommend products that bring the maximum benefit to the company.

Types of recommendation system

A recommendation system is generally built using 3 techniques that are content-based filtering, collaborative filtering and a combination of both.

1) Content-based filtering

The algorithm recommends a product similar to the ones used as gazes. In simple words, in this algorithm, we try to find an element that looks like. For instance, a person likes to see shots of Sachin Tendulkar, so you may also like to see shots of Ricky Ponting because the two videos have similar tags and categories.

Only it looks similar between content and doesn't focus more on the person who is viewing this. Only recommend the product that has the highest score based on past preferences.

2) Collaboration-based filtering

Collaboration-based filtering recommendation systems are based on past interactions of users and target elements. In simple words, We try to find similar customers and offer products based on what they have chosen their appearance. Let's understand with an example. X and Y are two similar users and user X has watched movies A, B y C. AND user Y has watched B movies, C y D, then we will recommend a movie to user Y and a movie D to user X.

YouTube has changed its recommendation system from a content-based filtering technique to one based on collaboration.. If you have ever experienced, there are also videos that have nothing to do with your history, but it also recommends it because the other person similar to you has seen it.

3) Hybrid filtering method

It is basically a combination of the two previous methods. It is too complex a model that recommends a product based on its history and similar users like you.

There are some organizations that use this method such as Facebook that shows news that is important to you and others also in your network and the same is used by Linkedin as well..

Book recommendation system

A book recommendation system is a type of recommendation system in which we have to recommend similar books to the reader based on their interest. The book recommendation system is used by online websites that provide e-books such as Google Play Books, open library, good readings, etc.

In this article, we will use the collaborative based filtering method to create a book recommendation system. You can download the dataset from here

Practical implementation of the recommendation system

Let's get our hands dirty while trying to implement a book recommendation system using collaborative filtering.

Description of the data set

have 3 files in our dataset that are drawn from some books that sell websites.

- Books: first it is books that contain all the information related to the books, as the author, title, the year of publication, etc.

- Users: the second file contains information of the registered user, as user id, Location.

- ratings: grades contain information such as which user has given the grade to which book.

Then, based on these three files, we can build a powerful collaborative filtering model. let us begin.

Load data

let's start by importing libraries and loading datasets. while loading the file we have some problems like.

- The values in the CSV file are separated by semicolons, not by coma.

- There are some lines that don't work like we couldn't import them with pandas and it throws an error because Python is an interpreted language.

- The encoding of a file is in Latin

Then, while loading data, we have to handle these exceptions and after executing the following code, you will get a warning and it will show which lines have an error that we have missed while loading.

import numpy as np

import pandas as pd

books = pd.read_csv("BX-Books.csv", sep=';', encoding="latin-1", error_bad_lines=False)

users = pd.read_csv("BX-Users.csv", sep=';', encoding="latin-1", error_bad_lines=False)

ratings = pd.read_csv("BX-Book-Ratings.csv", sep=';', encoding="latin-1", error_bad_lines=False)

Data pre-processing

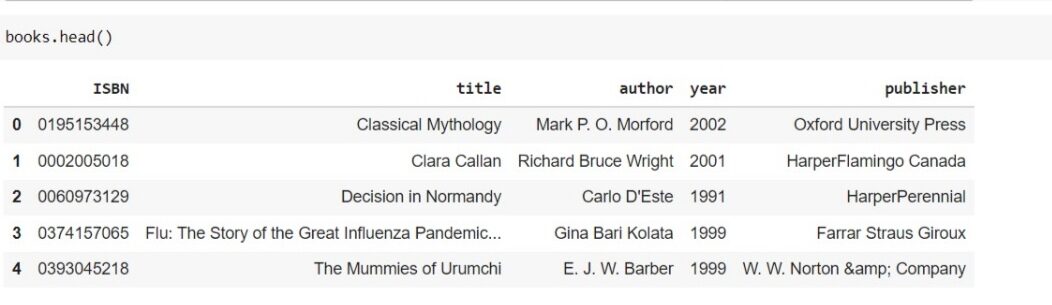

Now, in the book archive, we have some extra columns that are not needed for our task, as the urls of the images. And we will rename the columns of each file as the column name contains space and capital letters for us to correct it to make it easy to use.

books = books[['ISBN', 'Book-Title', 'Book-Author', 'Year-Of-Publication', 'Publisher']]

books.rename(columns = {'Book-Title':'title', 'Book-Author':'author', 'Year-Of-Publication':'year', 'Publisher':'publisher'}, inplace=True)

users.rename(columns = {'User-ID':'user_id', 'Location':'location', 'Age':'age'}, inplace=True)

ratings.rename(columns = {'User-ID':'user_id', 'Book-Rating':'rating'}, inplace=True)

Now, if you see the header of each data frame, you can see something like this.

The data set is reliable and can be considered as a large data set. Have 271360 data books and the total number of users registered on the website is approximately 278000 and have given a rating of close to 11 lakh. Thus, we can say that the data set we have is good and reliable.

Approach to posing a problem

We do not want to find similarities between users or books. we want to do that If there is a user A who has read and liked books x and y, and user B has also liked these two books and now user A has read and liked some book z that is not read by B, so we have to recommend z book to user B. This is what collaborative filtering is.

Then, this is achieved using matrix factorization, we will create a matrix where the columns will be the users and the indexes will be the books and the value will be the grade. As if we had to create a pivot table.

A big flaw with a problem statement in the dataset.

If we take all the books and all the users to model, Don't you think it will create a problem? So what we have to do is reduce the number of users and books because we cannot consider a user who has only registered on the website or who has only read one or two books. In such user, we cannot rely on recommending books to others because we have to extract knowledge from the data. Then, we will limit this number and take a user who has rated at least 200 books and we will also limit the books and take only those books that have received at least 50 user ratings.

Exploratory data analysis

So let's start with the analysis and prepare the dataset as we discussed for modeling. Let's see how many users have given ratings and extract those users who have given more than 200 ratings.

ratings['user_id'].value_counts()

Paso 1) Extract users and ratings from more than 200

when you run the above code, we can see that only 105283 people have given a rating between 278000. Now we will extract the user ids that have granted more than 200 grades and when we have the user ids we will extract the qualifications from just this user ids from the qualification data frame.

x = ratings['user_id'].value_counts() > 200

y = x[x].index #user_ids

print(y.shape)

ratings = ratings[ratings['user_id'].ray(Y)]

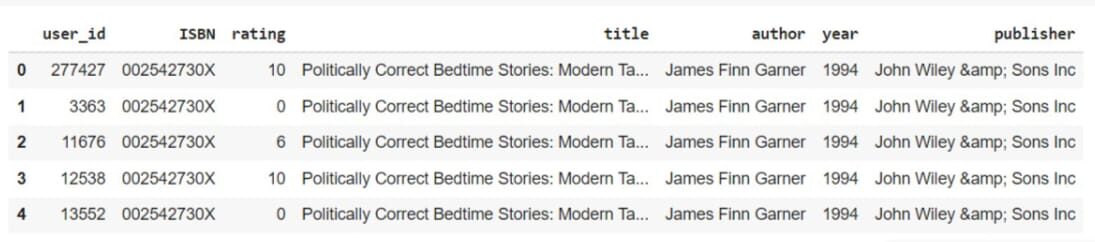

paso-2) Merge grades with books

Then there is 900 users who have given a rating of 5.2 lakh and this is what we want. Now we will merge the ratings with the books based on the ISBN so that we get the rating of each user on each book ID and the user who has not rated that book ID, the value will be zero.

rating_with_books = ratings.merge(books, on='ISBN') rating_with_books.head()

paso-3) Check out books that have received more than 50 ratings.

Now the size of the data frame has decreased and we have 4.8 lakh because when we merge the data frame, we did not have all the identification data of the book. Now we will count the grade of each book, so we will group the data based on title and aggregated data based on rating.

number_rating = rating_with_books.groupby('title')['rating'].count().reset_index()

number_rating.rename(columns= {'rating':'number_of_ratings'}, inplace=True)

final_rating = rating_with_books.merge(number_rating, on='title')

final_rating.shape

final_rating = final_rating[final_rating['number_of_ratings'] >= 50]

final_rating.drop_duplicates(['user_id','title'], inplace=True)

we have to remove duplicate values because if the same user has rated the same book multiple times, will create a problem. Finally, we have a dataset with that user who has rated more than 200 books and books that received more than 50 ratings. the form of the final data frame is 59850 rows and 8 columns.

Paso 4) Create pivot table

As we discussed earlier, we will create a pivot table in which the columns will be the user identifiers, the index will be the title of the book and the value the grades. And the user id that has not rated any book will have value as NAN, so impute it with zero.

book_pivot = final_rating.pivot_table(columns="user_id", index='title', values="rating") book_pivot.fillna(0, inplace=True)

We can see that the more than 11 Users have been removed because their grades were in those books that do not receive more than 50 ratings, so they are removed from the image.

Modeling

We have prepared our dataset to model. We will use the nearest neighbors algorithm, which is the same as the closest K, which is used for grouping based on Euclidean distance.

But here, in the pivot table, we have many zero values and in the grouping, this computing power will increase to calculate the distance from zero values, so we will convert the pivot table to the sparse matrix and then feed it to the model.

from scipy.sparse import csr_matrix book_sparse = csr_matrix(book_pivot)

Now we will train the nearest neighbors algorithm. here we have to specify an algorithm that is rough means to find the distance from each point to all other points.

from sklearn.neighbors import NearestNeighbors model = NearestNeighbors(algorithm='brute') model.fit(book_sparse)

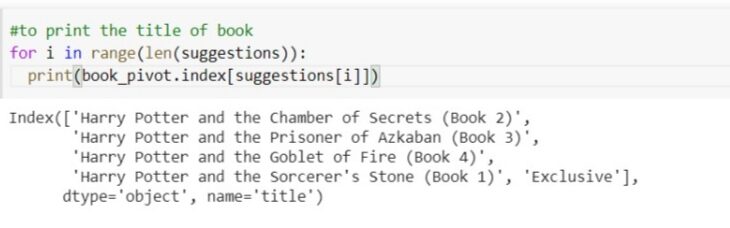

Let's make a prediction and see if it suggests books or not. we will find the closest neighbors to the entry book id and after that, we will print the 5 main books that are closest to those books. It will provide us with the distance and the identification of the book at that distance. let's move on to harry potter, what's wrong with it 237 indices.

distances, suggestions = model.kneighbors(book_pivot.iloc[237, :].values.reshape(1, -1))

let's print all suggested books.

for i in range(len(suggestions)): print(book_pivot.index[suggestions[i]])

Thus, we have successfully built a book recommendation system.

Final notes

¡Viva! We have to build a reliable book recommendation system and you can modify it and turn it into a final project. This is a wonderful unsupervised learning project where we have done a lot of preprocessing and you can further explore the dataset and, if you find something more interesting, share it in the comment box.

Hope it was easy to catch up on each method and follow the article. If you have any query, post it in the comment section below. I will be happy to help you with any questions.

About the Author

Raghav Agrawal

I am pursuing my degree in computer science. I really like data science and big data. I love working with data and learning new technologies. Please, feel free to connect with me on Linkedin.

If you like my article, please, read it also to others. Link

The media shown in this article is not the property of DataPeaker and is used at the author's discretion.