Introduction

Convolutional neural networks (CNN): the concept behind recent advancements and developments in deep learning.

CNN broke the mold and ascended the throne to become the latest technology. computer vision technique. Among the different types of Neural networks (others include recurrent neural networks (RNN), long term short term memory (LSTM), artificial neural networks (ANN), etc.), CNNs are easily the most popular.

These convolutional neural network models are ubiquitous in the image data space. They work phenomenally well on computer vision tasks like image classification, object detection, image recognition, etc.

Then, Where can you practice your CNN skills? Good, You have come to the right place!

There are several data sets that you can leverage to apply convolutional neural networks. Here are three popular data sets:

In this article, we will create image classification models using CNN on each of these data sets. That's right! We will explore MNSIT, CIFAR-10 and ImageNet to understand, in a practical way, how CNN works for image classification task.

You can learn all about convolutional neural networks (CNN) in this free course: Convolutional neural networks (CNN) right from the start

My inspiration for writing this article is to help the community apply theoretical knowledge in a practical way. This is a very important exercise, as it not only helps you develop a deeper understanding of the underlying concept, it will also teach you practical details that can only be learned through the implementation of the concept.

If you are new to the world of neural networks, CNN, image classification, I recommend that you follow these excellent detailed tutorials:

And if you are looking to learn computer vision and deep learning in depth, you should check out our popular courses:

Table of Contents

- Using CNN to classify handwritten digits in the MNIST dataset

- Image identification from the CIFAR-10 dataset using CNN

- Image Categorization of the ImageNet Dataset Using CNN

- Where to go from here?

Note: I will use Keras to demonstrate image classification using CNN in this article. Keras is an excellent framework to learn when you are just starting out in deep learning..

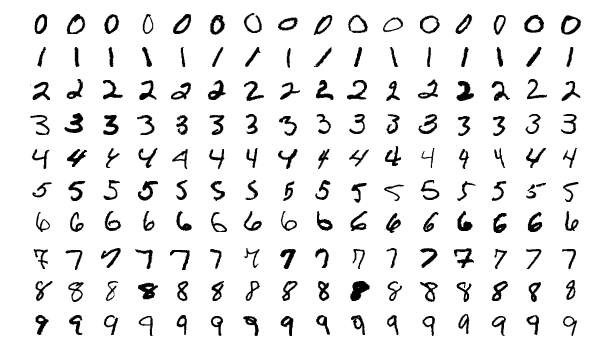

Using CNN to classify handwritten digits in the MNIST dataset

MNIST (National Modified Institute of Standards and Technology) is a well known data set that is used in Computer vision which was built by Yann Le Cun et. Alabama. It is made up of images that are handwritten digits (0-9), divided into a training set of 50,000 pictures and a test set of 10,000 where each image has 28 x 28 pixels width and height.

This data set is often used to practice any algorithm created for image classification., since the dataset is quite easy to conquer. Therefore, I recommend this to be your first dataset if you are only dabbling in the field.

MNIST comes with Keras by default and you can simply load the train and test files using a few lines of code:

from keras.datasets import mnist # loading the dataset (X_train, y_train), (X_test, y_test) = mnist.load_data() # let's print the shape of the dataset

print("X_train shape", X_train.shape)

print("y_train shape", y_train.shape)

print("X_test shape", X_test.shape)

print("y_test shape", y_test.shape)

Here is the X shape (features) and and (objective) for training and validation data:

X_train shape (60000, 28, 28) y_train shape (60000,) X_test shape (10000, 28, 28) y_test shape (10000,)

Before training a CNN model, let's build a basic model Fully connected neural network for the data set. The basic steps to build an image classification model using a neural network are:

- Flatten the dimensions of the input image to 1D (pixels wide x pixels high)

- Normalize image pixel values (divide by 255)

- One-Hot Encode the Categorical Column

- Build a model architecture (sequential) with dense layers

- Train the model and make predictions

Then, we show you how you can create a neural network model for MNIST. I have commented the relevant parts of the code for a better understanding:

After running the above code, realized that we are getting good validation accuracy of around the 97% easily.

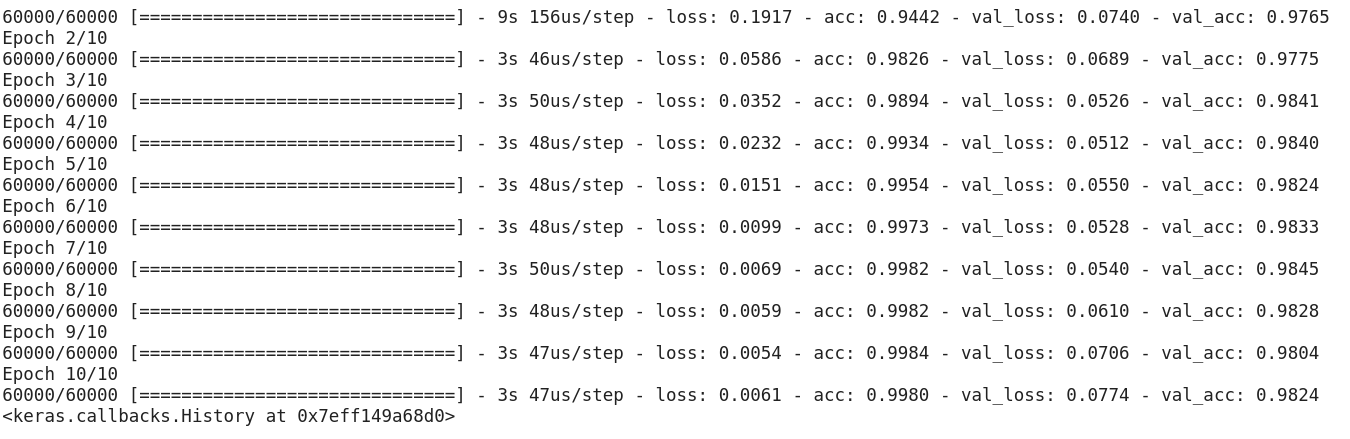

Let's modify the above code to build a CNN model.

One of the main advantages of using CNN over NN is that it is not necessary to flatten the input images to 1D, since they can work with 2D image data. This helps retain the properties “spatial” of the images.

Here is the full code for the CNN model:

Although our maximum validation precision using a simple neural network model was around 97%, CNN's model is able to get more than 98% with a single convolution layer.

You can go ahead and add more Conv2D layers, and also play with the hyperparameters of the CNN model.

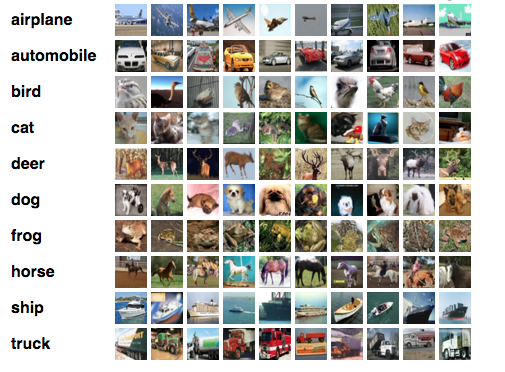

Image identification from the CIFAR-10 dataset using CNN

MNIST is a beginner-friendly dataset in computer vision. It's easy to get a score of more than 90% in validation by using a CNN model. But, What if you are beyond a beginner and need something challenging to put your concepts into practice?

That's where the CIFAR-10 data set enters to scene!

Here's how the developers behind CIFAR (Canadian Institute for Advanced Research) describe the data set:

The CIFAR-10 data set consists of 60.000 color images of 32 x 32 in 10 lessons, with 6.000 images per class. There is 50.000 training images and 10.000 test images.

The important points that distinguish this data set from the MNIST are:

- Images are colored in CIFAR-10 compared to MNIST's black and white texture

- Each image is of 32 x 32 pixels

- 50.000 training images and 10.000 test images

Now, These pictures are taken in different lighting conditions and at different angles, and since these are colored images, you will see that there are many variations in the color itself of similar objects (for instance, the color of ocean water). If you use the simple CNN architecture we saw in the MNIST example above, you will get a low validation precision of around 60%.

That's a key reason why I recommend CIFAR-10 as a good dataset to practice your hyperparameter tuning skills for CNN.. The good thing is that, like MNIST, CIFAR-10 is also readily available in Keras.

You can simply load the dataset using the following code:

from keras.datasets import cifar10 # loading the dataset (X_train, y_train), (X_test, y_test) = cifar10.load_data()

Then, we show you how you can build a decent CNN model (around the 78-80% in validation) for CIFAR-10. Notice how the values of the shapes have been updated from (28, 28, 1) a (32, 32, 3) according to the size of the images:

This is what I changed in the model:

- Increased the number of Conv2D layers to build a deeper model

- Greater number of filters to know more functions

- Abandonment added for regularization

- More dense layers added

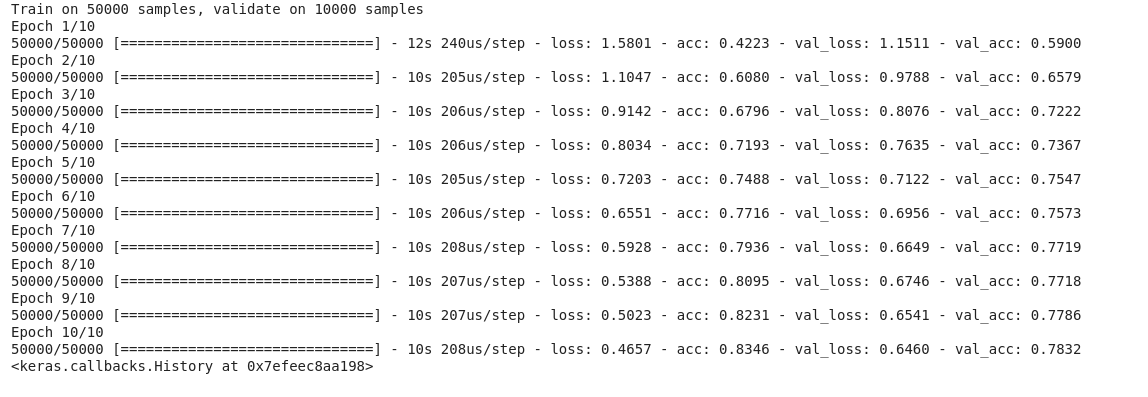

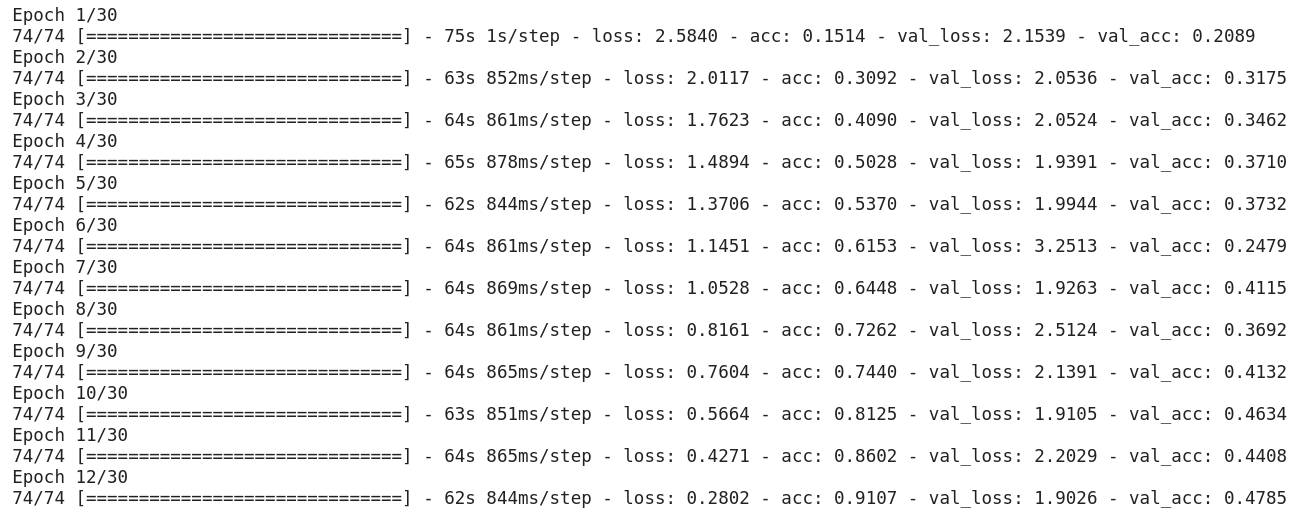

Accuracy of training and validation in all ages:

You can easily outshine this performance by tuning the older model. Once you have mastered CIFAR-10, there is also CIFAR-100 available in Keras that you can use to practice more. Since it has 100 lessons, It won't be an easy task to accomplish!

Categorize ImageNet Images Using CNN

Now that you have mastered MNIST and CIFAR-10, let's take this problem to a higher level. Here, we'll take a look at the famous ImageNet dataset.

ImageNet is the main database behind the ImageNet Large Scale Recon Challenge (ILSVRC). This is like the Olympics Computer vision. This is the competition that did CNN popular for the first time and every year, top research teams from industries and academia compete with their best algorithms on computer vision tasks.

About the ImageNet dataset

The ImageNet dataset has more than 14 million images, hand-labeled in 20.000 categories.

What's more, unlike the MNIST and CIFAR-10 data sets we have already discussed, images on ImageNet are decent resolution (224 x 224) and that's what challenges us: 14 million images, each of 224 by 224 pixels. Processing a data set of this size requires a great deal of computing power in terms of CPUs, GPU y RAM.

The disadvantage: that might be too much for an everyday laptop. Then, What is the workaround? How can an enthusiast work with the ImageNet dataset?

That's where the Imagenette dataset from Fast.ai comes in.

Imagenette is a dataset that is drawn from ImageNet's large collection of images. The reason behind the launch of Imagenette is that researchers and students can practice with ImageNet level images without the need for as many computing resources..

In Jeremy Howard's own words:

"Me (Jeremy Howard, namely) I primarily created Imagenette because I wanted a small vision dataset that I could use to quickly see if my algorithm ideas might have any chance of working. They usually don't, but testing them on Imagenet takes me a long time to figure it out, especially since I'm interested in algorithms that work particularly well in the end of training.

But I think this can also be a useful dataset for others “.

And that is what we will also use to practice!

1. Download the Imagenette dataset

This is how you can get the dataset (commands for your terminal):

$ wget https://s3.amazonaws.com/fast-ai-imageclas/imagenette2.tgz $ tar -xf imagenette2.tgz

Once you have downloaded the dataset, you will notice that you have two folders: “train” Y “val”. These contain the training and validation set respectively. Inside each folder, there are separate folders for each class. Here is the mapping of the classes:

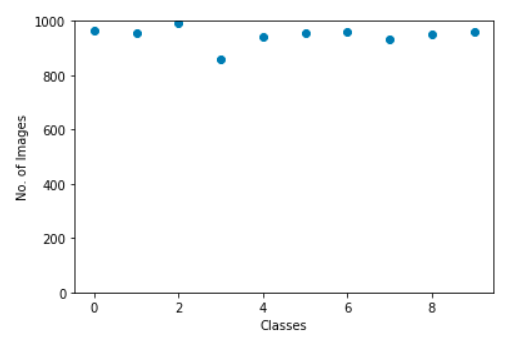

These classes have the same ID in the original ImageNet dataset. Each of the classes has approximately 1000 images, hence, in general, is a balanced data set.

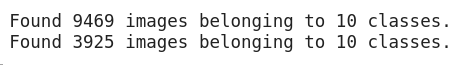

2. Loading images with ImageDataGenerator

Keras has this useful functionality for loading large images (like the one we have here) without maximizing RAM, doing it in small batches. ImageDataGenerator in combination with fit_generator provides this functionality:

The ImageDataGenerator itself deduces the class labels and number of classes from the folder names.

3. Creating a basic CNN model for image classification

Let's build a basic CNN model for our Imagenette dataset (for the purpose of classifying images):

When we compare the validation precision of the previous model, will realize that, although it is a deeper architecture than the one we have used so far, we can only get a validation precision of around the 40-50%.

There can be many reasons for this, as our model is not complex enough to learn the underlying patterns of the images, or maybe the training data is too small to generalize accurately between classes.

Step up: transfer learning.

4. Uso de Transfer Learning (VGG16) to improve accuracy

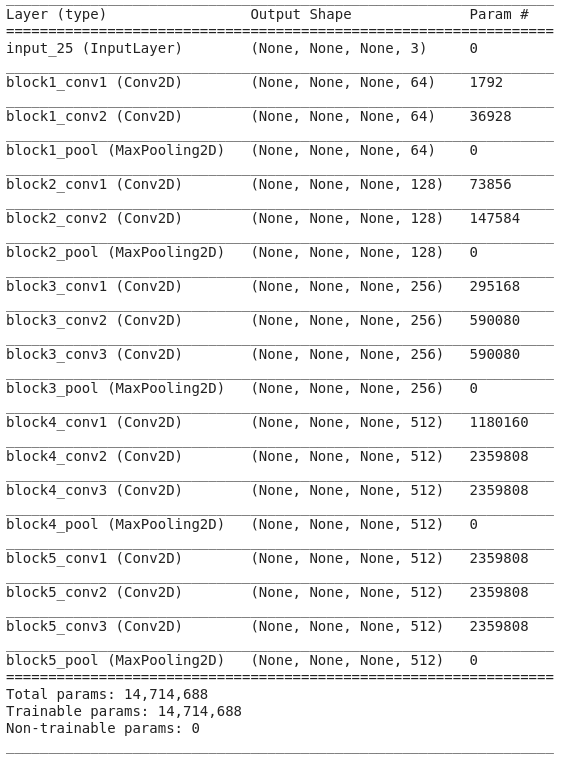

VGG16 is a CNN architecture that was the first runner-up in the 2014 ImageNet Challenge. It is designed by Visual Graphics Group in Oxford and has 16 layers in total, with 13 convolutional layers. We will load the previously trained weights of this model so that we can use the useful features that this model has learned for our task..

VGG16 Weights Download

from keras.applications import VGG16 # include top should be False to remove the softmax layer pretrained_model = VGG16(include_top=False, weights="imagenet") pretrained_model.summary()

Here is the architecture of the model:

Generate functions from VGG16

Let's extract useful features that VGG16 already knows from the images in our dataset:

from keras.utils import to_categorical # extract train and val features vgg_features_train = pretrained_model.predict(train) vgg_features_val = pretrained_model.predict(val)

# OHE target column train_target = to_categorical(train.labels) val_target = to_categorical(val.labels)

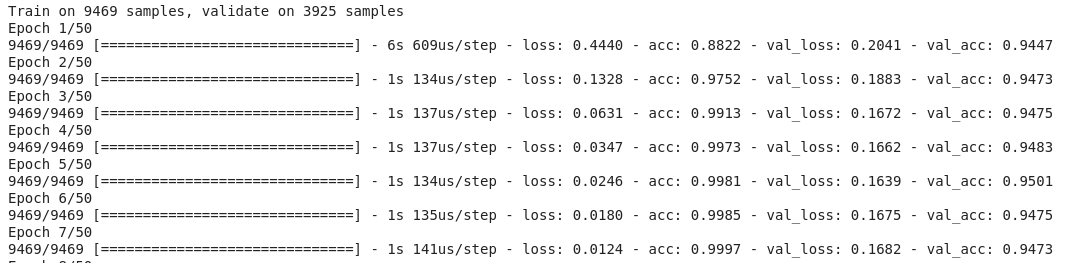

Notice how quickly your model begins to converge. In solo 10 epochs, has a validation precision of more than 94%. Isn't it amazing?

In case you have mastered the Imagenette dataset, fastai has also released two variants that include classes that you will find difficult to classify:

- Imagewoof: 10 kinds of dog breeds, a more difficult problem to classify

- Imagen Net (“wang”): A combination of Imagenette and Imagewoof and a couple of tricks that make the problem more complicated

Where to go from here?

In addition to the data sets we mentioned above, You can also use the following data sets to create computer vision algorithms. In fact, consider it a challenge. Can you apply your knowledge of CNN to beat the benchmark score in these data sets?

- Fashion MNIST – MNIST-like data set for clothing and apparel. Instead of digits, the pictures show a type of garment (T shirt, pants, handbag, etc.)

- Caltech 101 – Another challenging dataset I found for image classification

I also suggest that before opting for transfer learning, try improving your basic CNN models. You can learn from VGG16 architectures, ZFNet, etc. for some clues on hyperparameter tuning and you can use the same ImageDataGenerator to enlarge your images and increase the size of the dataset.