The world of object detection

I love working in the deep learning space. Frankly, is a vast field with a plethora of techniques and frameworks to analyze and learn. And the real thrill of modeling computer vision and deep learning comes when I see real-world applications like facial recognition and ball tracking in cricket., among other things.

And one of my favorite concepts of machine vision and deep learning is object detection.. The ability to build a model that can go through images and tell me what objects are present, It's a priceless feeling!

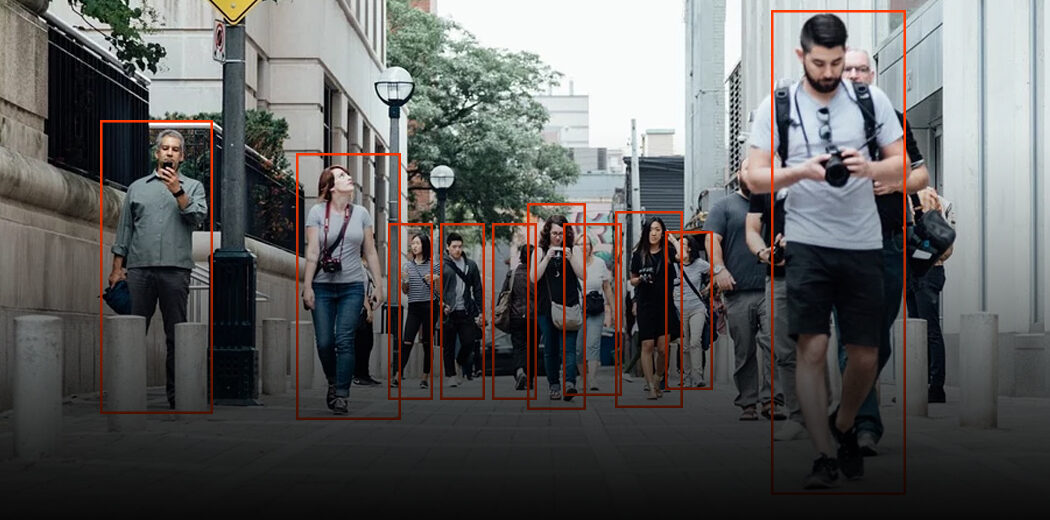

When humans look at an image, we recognize the object of interest in a matter of seconds. This is not the case with machines. Therefore, object detection is a computer vision problem to locate instances of objects in an image.

This is the good news: object detection applications are easier to develop than ever. Today's current approaches focus on end-to-end pipeline which has significantly improved performance and also helped develop real-time use cases.

In this article, I'll walk you through how to build an object detection model using the popular TensorFlow API. If you are a newcomer to deep learning, computer vision and the world of object detection, I recommend that you consult the following resources:

Table of Contents

- A general framework for object detection

- What is an API? Why do we need an API?

- TensorFlow Object Detection API

A general framework for object detection

Normally, We follow three steps when creating an object detection framework:

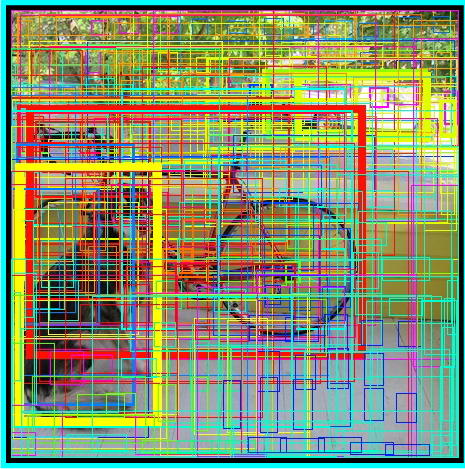

- First, a deep learning algorithm or model is used to generate a large set of bounding boxes that span the entire image (namely, an object locator component)

- Then, visual characteristics are extracted for each of the bounding boxes. They are evaluated and it is determined if and what objects are present in the boxes based on the visual characteristics (namely, an object classification component)

- In the last post-processing step, overlapping boxes are combined into a single bounding box (namely, non-maximum suppression)

That's it, You're ready with your first object detection framework!

What is an API? Why do we need an API?

API stands for Application Programming Interface. An API provides developers with a set of common operations so they don't have to write code from scratch.

Think of an API like a restaurant menu that provides a list of dishes along with a description of each dish. When we specify which dish we want, the restaurant does the work and provides us with finished dishes. We don't know exactly how the restaurant prepares that food, and it really is not necessary.

In some way, APIs save a lot of time. They also offer convenience to users in many cases. Think about it: Facebook users (including me!) They appreciate the ability to log into many apps and sites using their Facebook ID. How do you think this works? Using the Facebook APIs, of course!

Then, in this article, we will see the TensorFlow API developed for the object detection task.

TensorFlow Object Detection API

The TensorFlow Object Detection API is the framework for creating a deep learning network that solves object detection problems.

There are already previously trained models in their framework which they refer to as Model Zoo. This includes a collection of previously trained models trained on the COCO dataset., the KITTI data set and the open image data set. These models can be used for inferences if we are interested in categories only in this dataset.

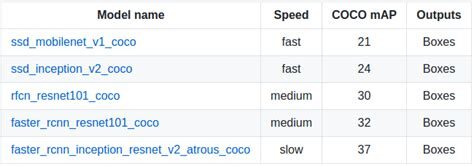

They are also useful for initializing your models when training on the new dataset. The various architectures used in the pretrained model are described in this table:

MobileNet-SSD

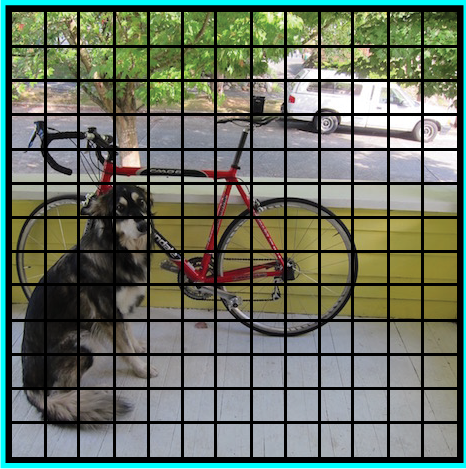

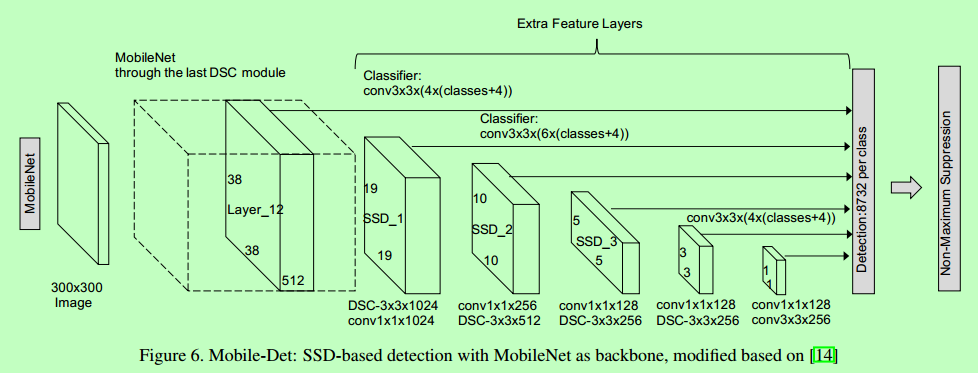

The SSD architecture is a unique convolution network that learns to predict bounding box locations and to classify these locations in a single pass. Therefore, SSD can be trained end to end. The SSD network consists of a base architecture (MobileNet in this case) followed by several layers of convolution:

SSD operates on feature maps to detect the location of bounding boxes. Remember: a feature map has the size Df * Df * M. For each location on the feature map, k bounding boxes are predicted. Each bounding box carries with it the following information:

- Bounding box 4 corners make up for locations (cx, cy, w, h)

- Class C probabilities (c1, c2,… cp)

SSD no predict the shape of the box, rather where is the box. The k bounding boxes each have a default shape. Forms are set before actual training. For instance, in the previous figure, there is 4 casillas, which means k = 4.

Loss on MobileNet-SSD

With the final set of paired squares, we can calculate the loss in this way:

L = 1/N (L class + L box)

Here, N is the total number of paired boxes. The class L is the softmax loss for the classification and the ‘box L’ is the soft loss L1 that represents the error of the paired boxes. The L1 soft loss is a modification of the L1 loss that is more robust to outliers. In the case that N is 0, loss is also set to 0.

MobileNet

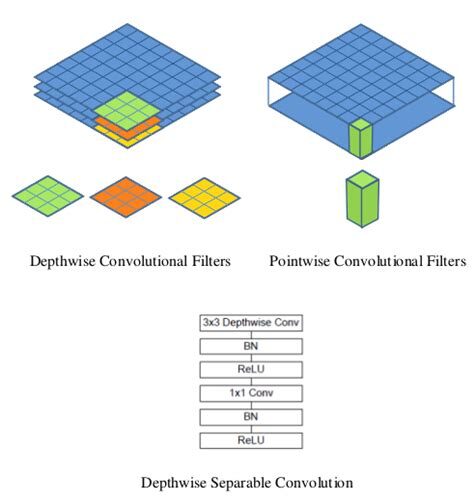

The MobileNet model is based on depth separable convolutions which are a form of factored convolutions. These factor a standard convolution into a depth convolution and a convolution of 1 × 1 called point convolution.

For MobileNets, depth convolution applies a single filter to each input channel. Point convolution then applies a convolution 1 × 1 to combine the outputs of the convolution in depth.

A standard convolution filters and combines inputs into a new set of outputs in one step. Depth separable convolution splits this into two layers: a separate layer to filter and a separate layer to combine. This factorization has the effect of drastically reducing the calculation and size of the model..

How to load the model?

Below is the step-by-step process to follow in Google Colab so that you can easily visualize object detection. You can also follow the code.

Install the model

Make sure you have pycocotools installed:

Get tensorflow/models O cd to the main directory of the repository:

Build protobufs and install the object_detection package:

Import the required libraries

Import the object detection module:

Model preparation

Charger

Loading label map

Label map index maps with category names so that when our convolution network predicts 5, let us know that this corresponds to an airplane:

For the sake of simplicity, we will test in 2 images:

Object detection model using the TensorFlow API

Load an object detection model:

Check the input signature of the model (expect a batch of images from 3 int8 type colors):

Add a wrapper function to call the model and clean the outputs:

Run it on each test image and display the results:

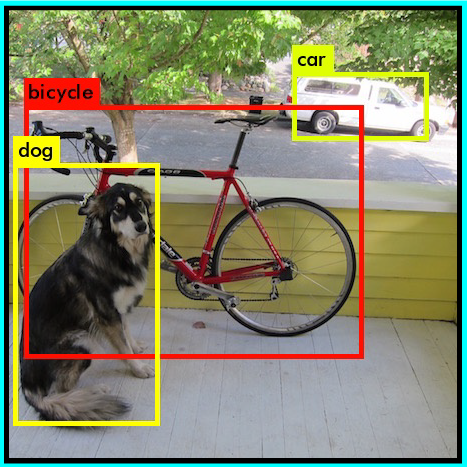

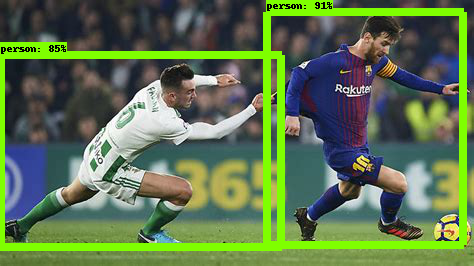

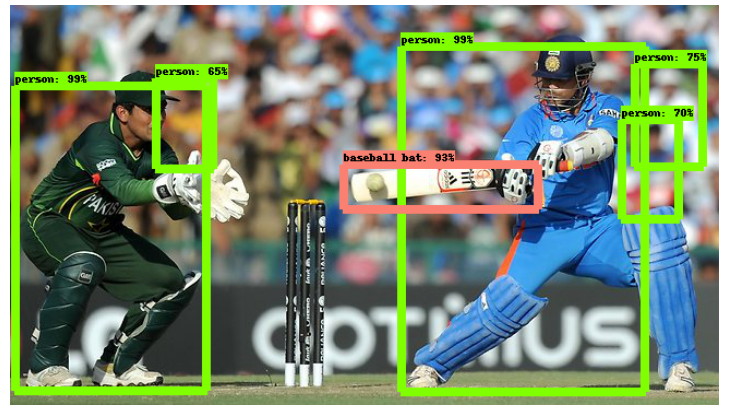

Below is the example image tested on ssd_mobilenet_v1_coco (MobileNet-SSD enabled on COCO dataset):

Home-SSD

The architecture of the Inception-SSD model is similar to that of the previous MobileNet-SSD. The difference is that the base architecture here is the Inception model. To know more about the home network, Go here: Understanding the startup network from scratch.

How to load the model?

Just change the model name in the Discovery part of the API:

Later, make the prediction by following the steps we followed above. ¡Voila!

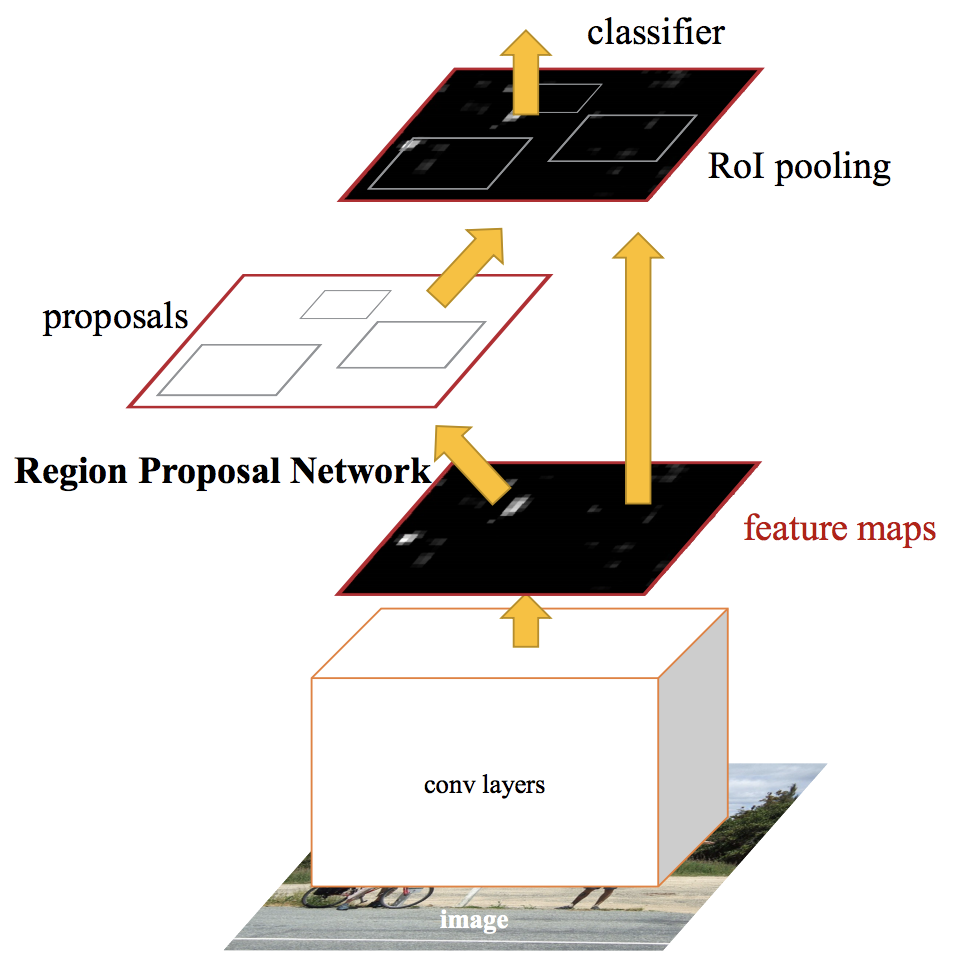

Faster RCNN

State-of-the-art object detection networks rely on region proposition algorithms to formulate hypotheses about the location of objects. Advances such as SPPnet and Fast R-CNN have reduced the execution time of these detection networks, exposing the region proposal calculation as a bottleneck.

A Faster RCNN, we feed the input image to the convolutional neural network to generate a map of convolutional characteristics. From the convolutional characteristics map, we identify the region of proposals and deform them into squares. And when using a grouping layer of RoI (region of interest layer), we reshape them into a fixed size so it can fit into a fully connected layer.

From the feature vector RoI, we use a softmax layer to predict the class of the proposed region and also the offset values for the bounding box.

To read more in depth about Faster RCNN, read this amazing article – A practical implementation of the Faster R-CNN algorithm for object detection (Part 2 – with Python codes).

How to load the model?

Just change the model name in the Discovery part of the API again:

Later, make the prediction following the same steps we followed previously. Below is the example image when provided to a faster RCNN model:

As you can see, this is much better than the SSD-Mobilenet model. But it comes with a trade-off: it is much slower than the previous model. These are the kinds of decisions you'll need to make when choosing the right object detection model for your deep learning and computer vision project..

Which Object Detection Model Should I Choose?

Depending on your specific requirements, you can choose the correct model from the TensorFlow API. If we want a high speed model that can work in the detection of the video transmission at a high fps, the single shot detection network (SSD) works better. As its name suggests, SSD network determines all bounding box probabilities at once; therefore, it's a much faster model.

But nevertheless, with single shot detection, gain speed at the cost of precision. Con FasterRCNN, we will get high precision but low speed. So explore and in the process, you will realize how powerful this TensorFlow API can be.