This article was published as part of the Data Science Blogathon

Introduction

Obtaining complete and high-performance data is not always the case in Machine Learning. While working on any real world problem statement or trying to build any kind of project like Machine Learning Practioner, need the data.

To meet the need for data most of the time, you need to get data from the API and, if the website does not provide the API, the only option left is Web Scraping.

In this tutorial, we will learn how you can use API, extract data and save as data frame.

Table of Contents

- Getting data from an API

-

- What is API

- Importance of using API

- How to get an API

- Practical code to extract data from the API

- Obtaining data using SQL databases

- EndNote

Getting data from an API

What is API

API stands for Application Programming Interface. API basically works as an interface between two software communication. Now let's understand how?

Importance of using API

Consider an example, if we have to book a train ticket, then we have multiple options like IRCTC website, Yatra, make my trip, etc. Now, these are all different organizations, and suppose we have reserved seat number 15 from wagon B15, if someone visits and tries to reserve the same seat from different software, Will it be reserved or not? It will show as reserved.

Although they are different companies, different software, are able to share this information. Therefore, information sharing occurs between multiple websites via API, that's why APIs are important.

Each organization provides services on multiple operating systems such as ios, android, que están integrados con una sola databaseA database is an organized set of information that allows you to store, Manage and retrieve data efficiently. Used in various applications, from enterprise systems to online platforms, Databases can be relational or non-relational. Proper design is critical to optimizing performance and ensuring information integrity, thus facilitating informed decision-making in different contexts..... Therefore, they also use API to get data from the database to multiple applications.

Now let's practically understand how to get data using a data frame using Python.

How to get an API?

We will use the official TMDB website, which provides different APIs to get different types of data. we are going to get top rated movie data in our data frame. To get the data, must pass the API.

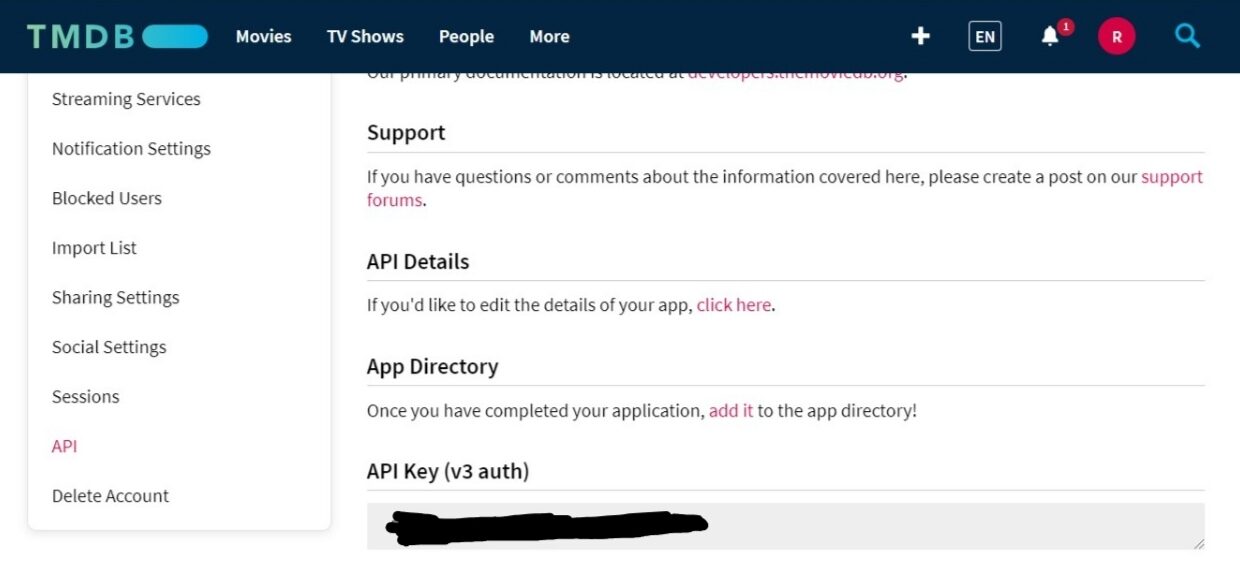

Visit the TMDB site y regístrese e inicie we can apply transformations once for the whole cluster and not for different partitions separatelyThe "Session" It is a key concept in the field of psychology and therapy. Refers to a scheduled meeting between a therapist and a client, where thoughts are explored, Emotions and behaviors. These sessions can vary in length and frequency, and its main purpose is to facilitate personal growth and problem-solving. The effectiveness of the sessions depends on the relationship between the therapist and the therapist.. con su cuenta de Google. Thereafter, in your profile section, visit settings. In the panelA panel is a group of experts that meets to discuss and analyze a specific topic. These forums are common at conferences, seminars and public debates, where participants share their knowledge and perspectives. Panels can address a variety of areas, from science to politics, and its objective is to encourage the exchange of ideas and critical reflection among the attendees.... de configuración de la izquierda, in the last second option, you can find an option like API, just click on it and generate your API.

Use API key to get top rated movie data

Now that you have your own API key, visit the TMDB API developer site which you can see in the API section at the top. Click Movies and the tour gets the highest rating Now, in the best rating window, visit the Try Now option, where you can see on the right side of the submit request button, has a link to the highest rated movies.

https://api.themoviedb.org/3/movie/top_rated?api_key=<<api_key>>&language=en-US&page=1

Copy the link and, instead of the API key, paste the API key you have generated and open the link, podrá ver los datos similares a JSONJSON, o JavaScript Object Notation, It is a lightweight data exchange format that is easy for humans to read and write, and easy for machines to analyze and generate. It is commonly used in web applications to send and receive information between a server and a client. Its structure is based on key-value pairs, making it versatile and widely adopted in software development...

Now, to understand this data, there are several tools like the JSON viewer. If you wish, you can open it and paste the code into the viewer. It is a dictionary and the required information about films is present in the result key.

The total data is present in 428 pages and the total number of movies is 8551. Therefore, we have to create a data frame that will have 8551 rows and the fields that we will extract are id, movie title, release date, general description, popularity, vote. average, vote count. Therefore, the data frame that we will receive will have the form 8551 * 7.

Practical code to get data from the API

Open your Jupyter Notebook to write the code and extract the data into the data frame. Install pandas library and requests if you don't have using pip command

pip install pandas pip install requests

Ahora defina su clave de API en el enlace y haga una solicitud al sitio web de TMDB para extraer datos y guardar la respuesta en una variableIn statistics and mathematics, a "variable" is a symbol that represents a value that can change or vary. There are different types of variables, and qualitative, that describe non-numerical characteristics, and quantitative, representing numerical quantities. Variables are fundamental in experiments and studies, since they allow the analysis of relationships and patterns between different elements, facilitating the understanding of complex phenomena.....

api_key = your API key

link = "https://api.themoviedb.org/3/movie/top_rated?api_key=<<api_key>>&language=en-US&page=1"

response = requests.get(link)

Don't forget to mention your API key in the link. And after running the above code, yes print the answer, you can see the answer in 200, which means everything is working fine and you got the data in the form of JSON.

The data we want is in key results, so try to print the result key.

response.json()["results"]

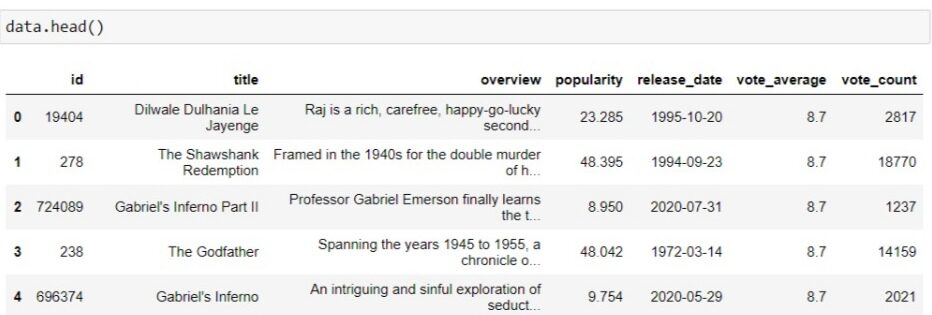

To create the data frame of the required columns, we can use pandas data frame and it will get data frame from 20 ranks that has the best movies on the page 1.

data = pd.DataFrame(response.json()["results"])[['id','title','overview','popularity','release_date','vote_average','vote_count']]

We want the data of the 428 full pages, so we will put the code in the for loop and request the website again and again to different pages and each time we will get 20 rows and seven columns.

for i in range(1, 429):

response = requests.get("https://api.themoviedb.org/3/movie/top_rated?api_key=<api_key>&language=en-US&page={}".format(i))

temp_df = pd.DataFrame(response.json()["results"])[['id','title','overview','popularity','release_date','vote_average','vote_count']]

data.append(temp_df, ignore_index=False)

Therefore, we got the complete data frame with 8551 rows. we have formatted a page number to request a different page each time. And mention your API key in the link by removing the HTML tag. It will take at least 2 minutes to run. The data frame we got looks like this.

Save the data to a CSV file so you can use it to analyze, process and create a project on it.

Get data from a SQL database

Working with SQL databases is easy with Python. Python provides various libraries to connect to database and read SQL queries and extract data from SQL table to Pandas Dataframe.

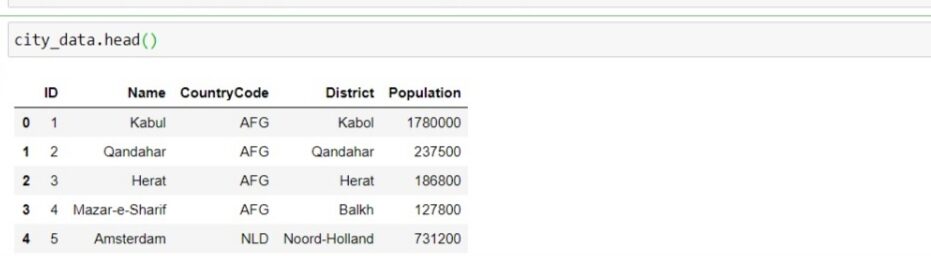

For demonstration purposes, we are using a population data set of districts and cities of the world loaded in Kaggle in SQL query format. You can access the dataset from here.

Download the file and upload it to your local database. You can use MySQL, XAMPP, SQLite or any database of your choice. ALL database offers import option, just click on it, select the downloaded file and upload it.

Now we are ready to connect Python to the database and extract the SQL data into Pandas Dataframe. To make a connection, install MySQL connector library.

!pip install mysql.connector

After install, import the required libraries and direct the connection to the database using the connect method.

import numpy as np import pandas as pd import mysql.connector conn = mysql.connector.connect(host="localhost", user="root", password="", database="World")

After connecting with the database successfully, we can query a database and extract data into a data frame.

city_data = pd.read_sql_query("SELECT * FROM city", conn)

Therefore, we have extracted data to dataframe successfully and it is easy to work with databases with the help of Python. You can also extract data by filtering with SQL queries.

EndNote

I hope it was an amazing article that helps you learn how to extract data from different sources. Obtaining data with the help of API is mainly used by Data Scientist to collect data from the large and vast data set for better analysis and improved model performance..

As a beginner, most of the time you get the precise data file, but this is not the case all the time, you need to bring the data from different sources that will be noisy and work on it to make better business decisions.

The media shown in this article is not the property of DataPeaker and is used at the author's discretion.