Introduction

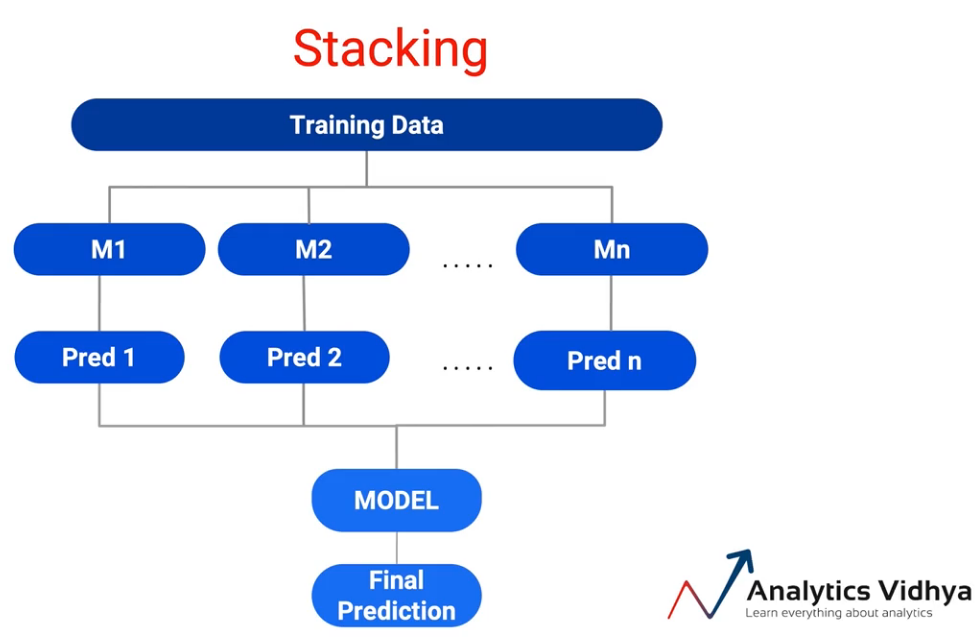

Stacking is an ensemble learning technique that uses predictions for multiple nodes (for instance, kNN, decision trees or SVM) to build a new model. This final model is used to make predictions on the test data set..

***Video***

Note: If you are more interested in learning concepts in an audiovisual format, we have this full article explained in the video below. If that is not the case, you can keep reading.

Then, what we do when stacking is take the training data and run it through multiple models, M1 a Mn. And all these models are normally known as basic learners or basic models.. And we generate predictions from these models.

Therefore, Prev 1 a Pred n are the predictions, and this input is sent to the model, instead of the maximum or average vote. And the model takes them as inputs and gives us the final prediction. And depending on whether it was a regression problem or a classification problem, i can choose which is the correct model to do this. Then, the stacking concept is very interesting and opens up many possibilities.

But stacking in this way opens up a great danger of Over-adjustment the model because I am using all my training data to create the model and also to create predictions on it.

Then, the question is, Can I get smarter and can I use training data and test data in a different way to reduce the danger of overfitting? And that is what we will discuss in this particular article.. Then, what i am going to cover is one of the most popular ways stacking is used.

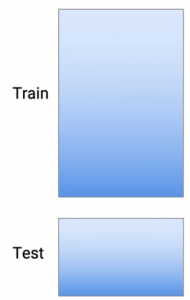

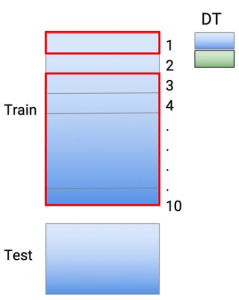

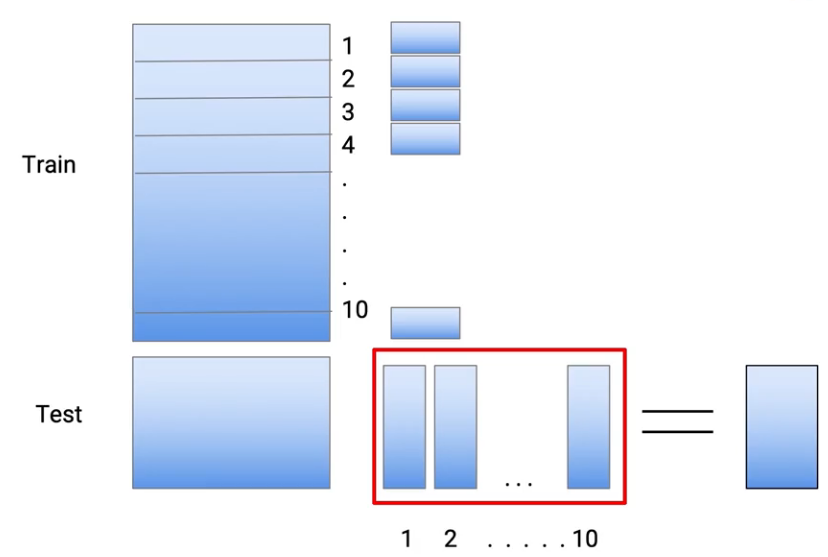

Let's say we have these training and test data sets:

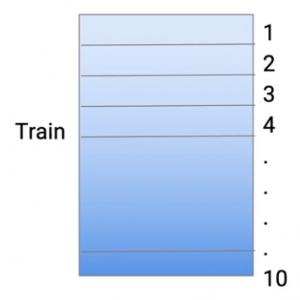

And to reduce overfitting, I take the data from my train and divide it into 10 parts. So this is done randomly. So I take the entire dataset from the train and turn it into 10 smaller data sets.

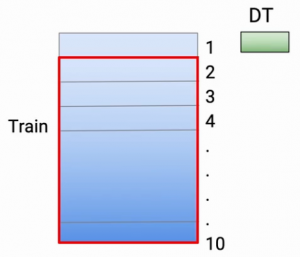

And now, to reduce overfitting, what I do is train my model in 9 of this 10 parts and I make my predictions in the tenth part. Then, in this particular case, I do my part training 2 to the part 10. And let's say I'm using decision tree as my modeling techniques, so I train my model and do my prediction cases, who were there in the part 1-

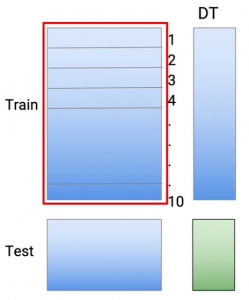

Then, the part 1 it's basically prediction. Then, green color represents prediction, what i did in the points, that were in the dataset 1, I do the same exercise for each of these parts. Then, for the part 2, I retrain my model using the part 1 data and part 3 to the part 10 of data. And I make my predictions in the second part.

Then, this way, I make my predictions for all these 10 parts. Then, in summary, each of these predictions came from a model that had not seen the same train data points. And to create a test data set, I use all the train data. So, again, I train the model, performed on the entire train data set, and I make predictions in the test.

Then, if you think about it, we create 10 models to get the predictions on the train data and the eleventh model to get predictions on the test data. And these are all decision tree models. Then, this gives me a set of predictions or the equivalent of predictions that came from the M1 model.

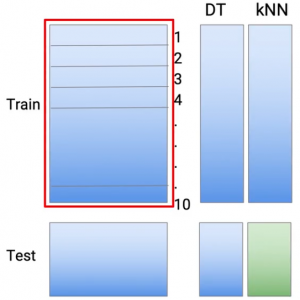

I do the same with a second modeling technique. let's say KNN. Then, again, the same concept that I make predictions part by part 1 to the part 10. And again, to get predictions on the test data set, I run the eleventh KNN model.

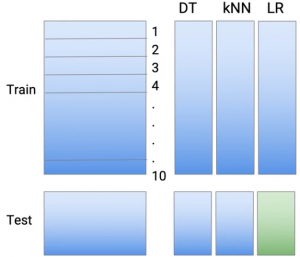

I do the same with the third part, which could be a linear or logistic regression, depending on the type of problem you are handling.

Then, these are my new basic learners somehow. Now I have predictions of three different types of modeling techniques, but I have avoided the danger of overfitting.

Now i might ask, Why am i using 10? And what is sacrosanct about this number 10? So there is nothing sacrosanct about the number 10. It is based on the fact that if I use something less than two or three, it doesn't give me much benefit. And if I take something more than say 15 O 20, so my number of calculations increases. So just a trade-off between reducing overfitting and not increasing my complexity much. You can also go ahead with 7 u 8, there's nothing specific you have to do with 10.

So feel free to choose your own number. Could be seven, could be eight, but I usually see people who use between five and maybe 11, 12, depending on the situation. And you will see this again and again together that there are guidelines, but at the end of the day, you must make decisions based on how many resources you have, how much complexity is there and what are your production guidelines and what can you afford in production?

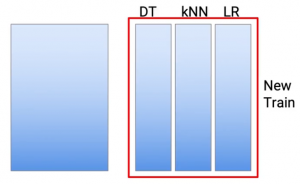

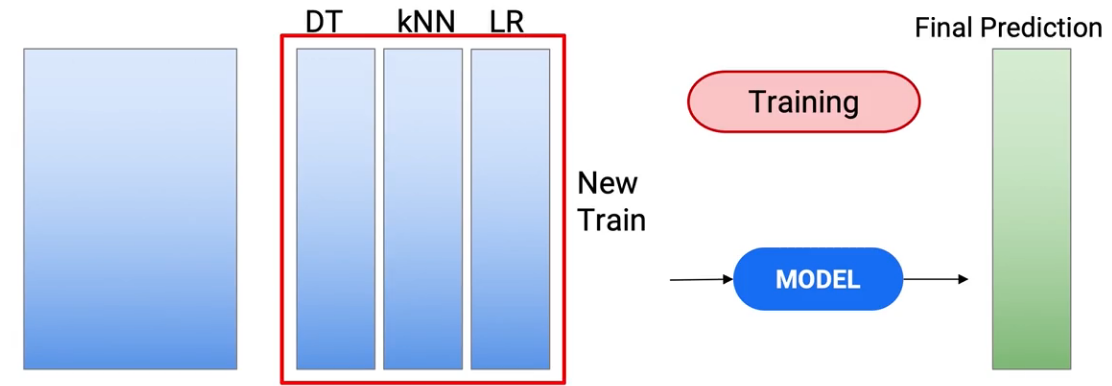

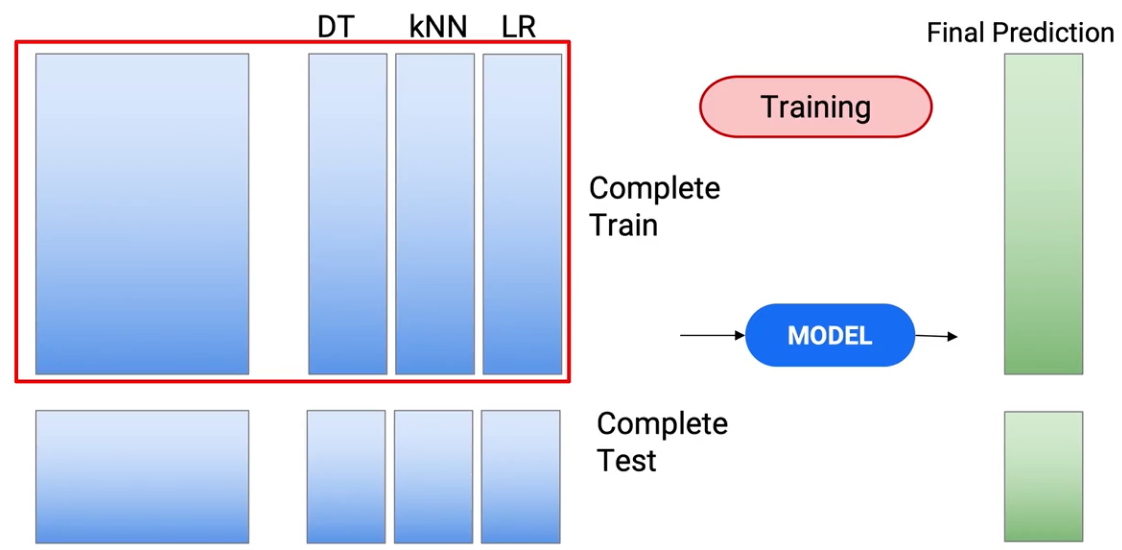

So i've taken 10 as an example, but you can also use any other number. Re-stacking. So we had these predictions from three different types of models. So this becomes my new train dataset.

and the predictions I had in my test become my new test dataset. And now I create a model on these test and train data sets to arrive at my final predictions.

So we use this new train to create the train model and make predictions in my test to get my final test predictions.

Then, this is the most popular variant of stacking, that is used in the industry. Let's look at some more variations, that can be used:

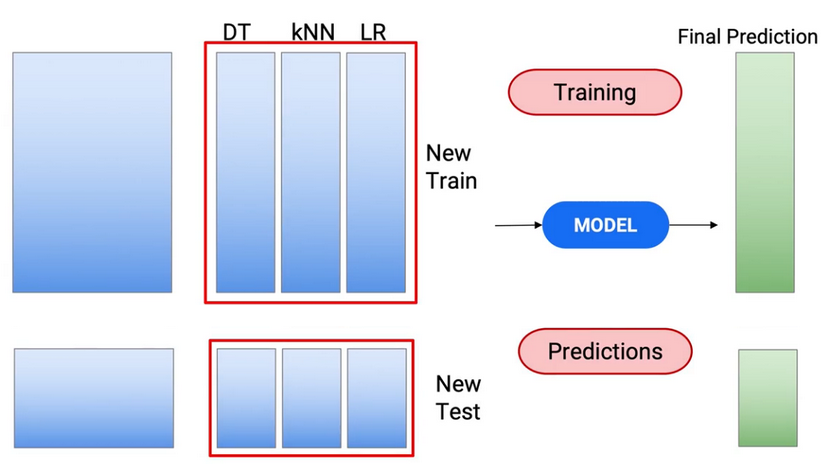

1. Use the provided functions together with the new predictions.

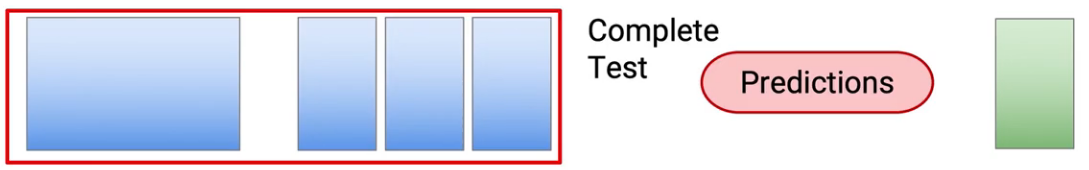

So currently, if you think about it, we have only used the new predictions as features of our final model. What I can also do is include the original functions along with the new function. Then, instead of using this red box to train and test,

I can use the full function to train my model, the functions that were there originally and the predictions that came out. So I am opening my trains dataset to include more functions.

And I do the same with the tests. And this gives me a new set of predictions.

So that's one way stacking is implemented as well.

2. Generate multiple predictions to test and add them

The second way to implement stacking is to make multiple predictions on the test dataset and aggregate them. Again, if you remember what we did, we create these 10 predictions for each of these train files and we use one of the full models to create the predictions from the test data set. Now, what you could also do is do this for 10, each one of these 10 Models, that were created, and then add them instead of the whole model.

Then, again, the same models that he was using to make the predictions for 1, 2 and each of these data sets, I use the same model to create my predictions for the test. And then I averaged them to get to my final test, what will i use for the final model.

Again, as I said, these are all different models and different ways to implement stacking and assembling. You have complete freedom to be creative and find new ways to reduce overfitting.. Therefore, the overall objectives are to ensure that:

- Our precision increases

- Complexity remains as low as possible

- And we avoid overfitting

As long as you do something to achieve these three goals, would be a valid strategy, truth?

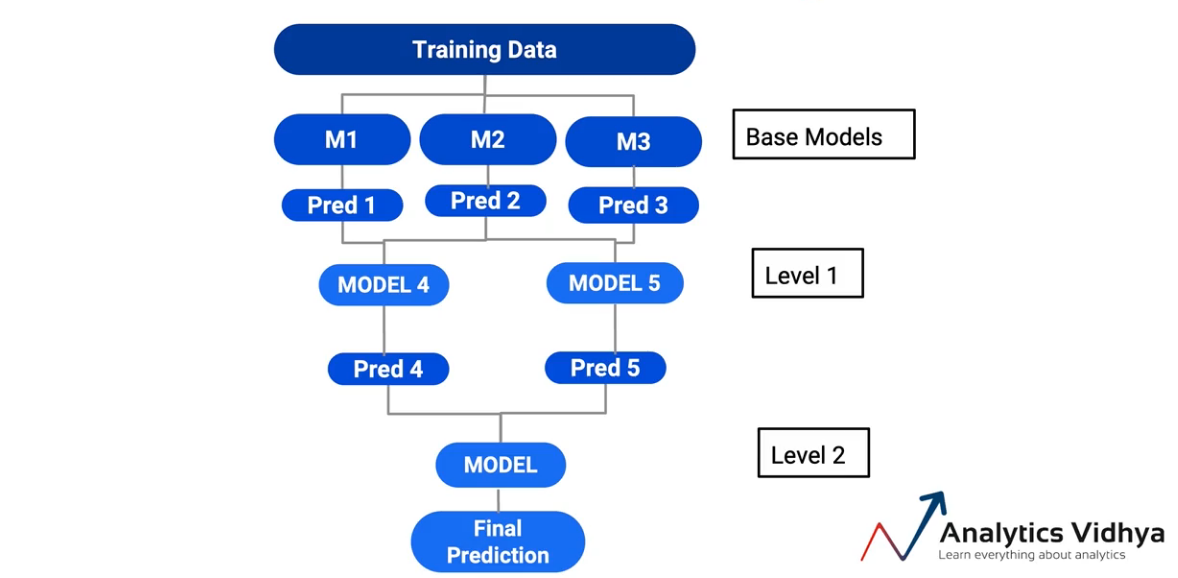

3. Increase the number of levels to stack models.

Then, the third stacking variant is where, instead of keeping a single model in all predictions, I ended up creating model layers. Then, for instance, in this particular case-

I took predictions from M1 and M2 and passed them to another model, M4. Similarly, took predictions from the Model 2 and the Model 3 and fed them to the Model 5. And the final model was actually a model in the Model 4 and the Model 5. So I ended up creating two levels of models in my base models. And again, is a valid way to stack. And depending on the situation, you can choose these.

So these were the stacking variants, as I said, as long as you make sure all three assembly requirements are met, which we: making sure you don't overfit your models, making sure to keep the models as simple as possible, and increases your accuracy. Remember that you can be as creative as possible with stacking or any other ensemble modeling if you only keep these three points in mind..

Final notes

I've covered a few variants for stacking. So feel free to use them. And with those three limitations or with those thoughts, any variation you can think of would be a valid variation.

If you are looking to start your data science journey and want all topics under one roof, your search stops here. Take a look at DataPeaker's certified AI and ML BlackBelt Plus Program

If you have any question, let me know in the comment section!