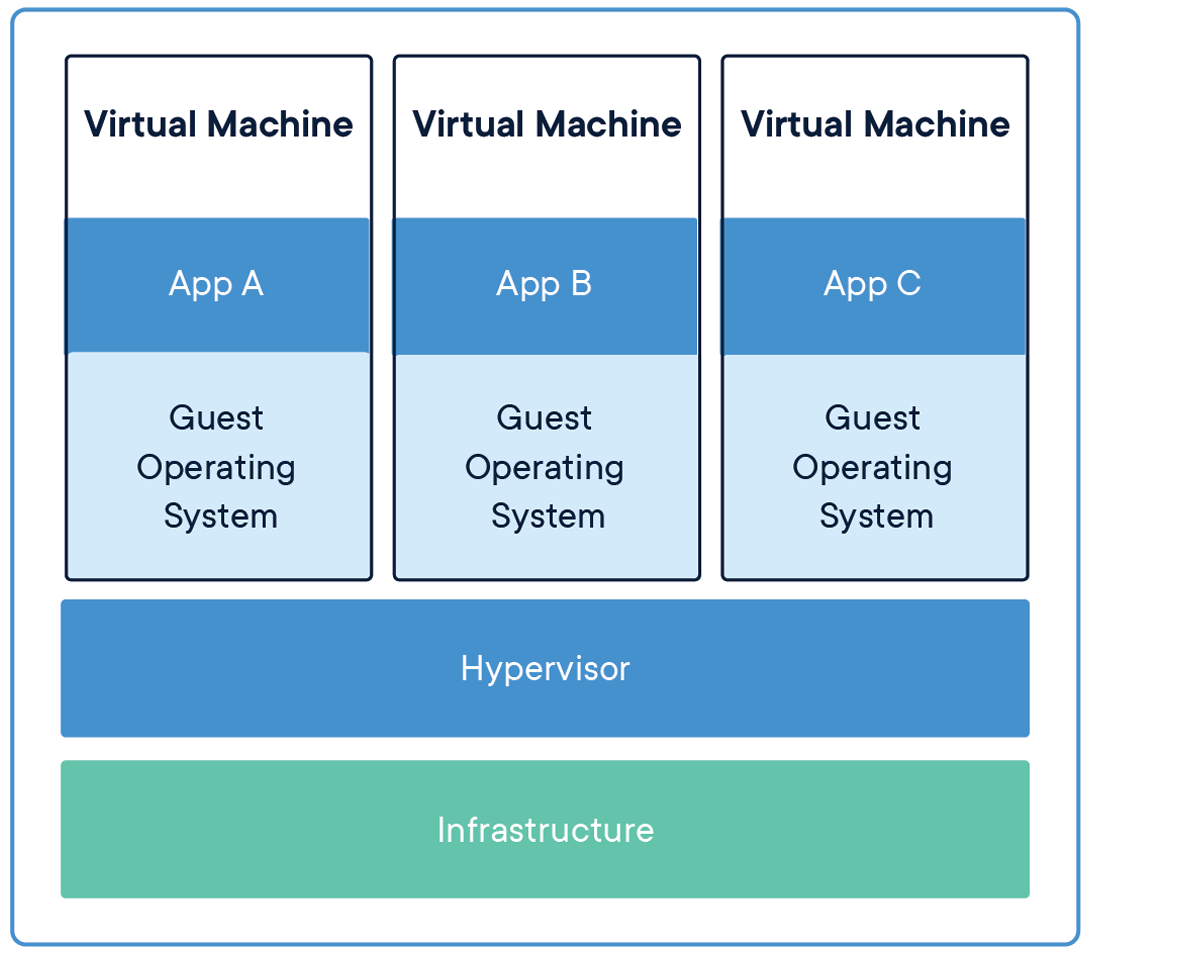

Years ago, virtual machines (VM) they were the main tool for hosting an application, as it encapsulates the code and configuration files along with the dependencies needed to run an application. Provides the same functionality as a physical system.

To run multiple applications, we have to start up multiple virtual machines and to manage a set of virtual machines, we need a hypervisor.

Source: Application sharing infrastructure

Move from virtual machines to containers

The limitation of this mechanism (Virtual machines) is that it is not efficient, since running multiple applications will replicate your own operating systems, which consumes a large amount of resources and, as running applications increase, we need more space to allocate resources.

Another downside to this is, suppose we have to share our application with others, and when they try to run the application most of the time it does not run due to dependency issues and for that, we just have to say that "Works on my laptop / system“. Then, for others to run the applications, must set up the same environment it ran in on the host side, which means a lot of manual configuration and component installation.

The solution to these limitations is a technology called Containers.

Building machine learning models in Jupyter Notebooks is not the final solution for any POC / draft, we need to bring it to production to solve real life problems in real time.

Then, the first step is to pack / package our application so that we can run our application on any cloud platform to take advantage of managed services and autoscaling and reliability, and many more.

To package our application we need tools like Docker. So let's get to work on awesome tools and see the magic..

Containers

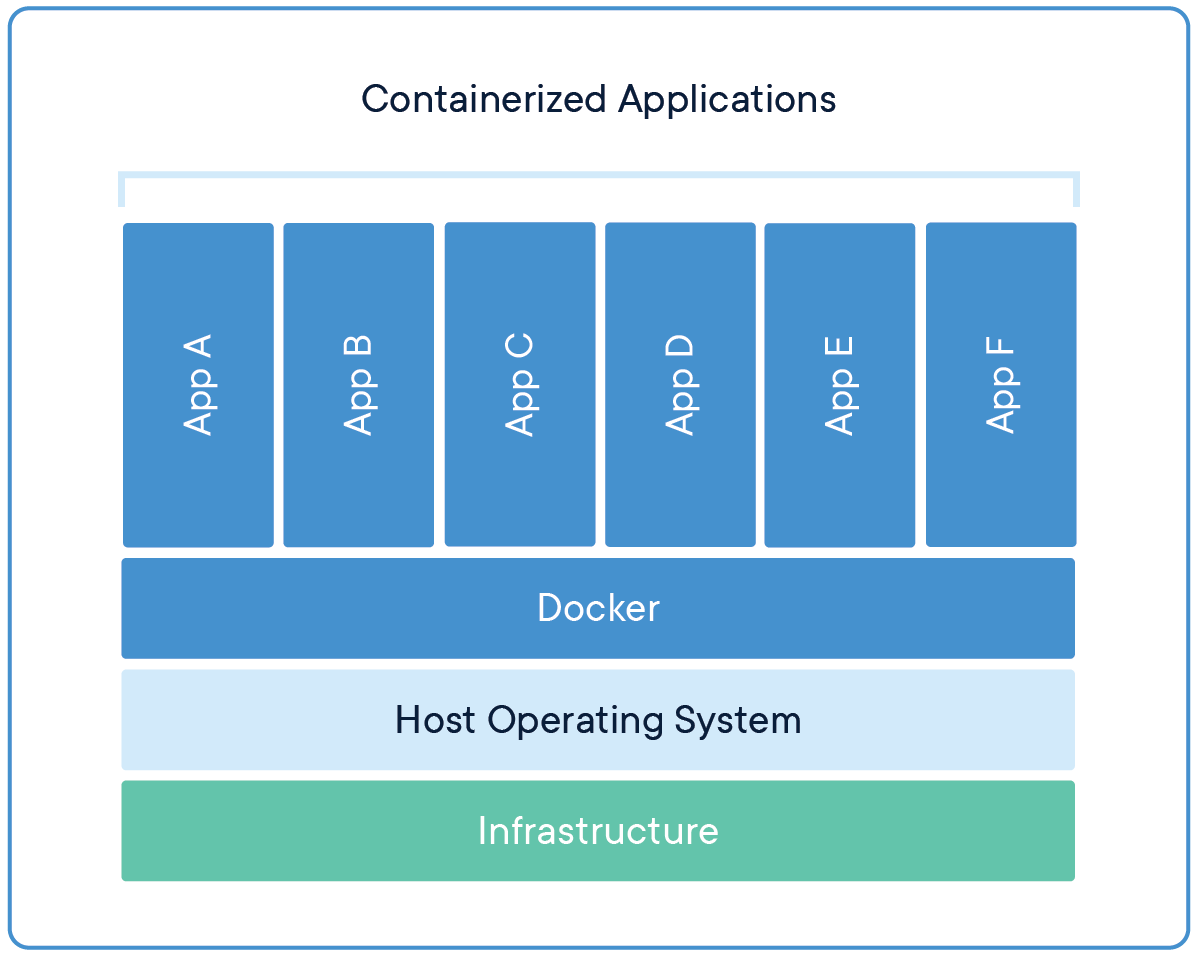

A container is a standard unit of software that packages the code and all its dependencies so that the application runs quickly and reliably from one computing environment to another..

Now, multiple virtual machines are replaced with multiple containers running on a single host operating system. Applications running in containers are completely isolated and have access to the file system, operating system resources and packages. For creating and running containers, we need container management tools, What Stowage.

Source: Applications that share the operating system

Stowage

Docker is a container management tool that packages the application code, configuration and dependencies in a portable image that can be shared and run on any platform or system. Con Docker, we can contain multiple applications and run them on the same machine / system, since they will all share the same operating system kernel services, use fewer resources than virtual machines (VM).

A Docker container image is a lightweight software package, standalone and executable that includes everything you need to run an application: code, execution time, System tools, system libraries and settings.

Container images are converted to containers at runtime and, for Docker containers, images become containers when run on Docker Engine.

Engine the Docker

Docker Engine is the container runtime that runs on various Linux operating systems (CentOS, Debian, Fedora, Oracle Linux, RHEL, SUSE y Ubuntu) y Windows Server.

Docker Engine enables containerized applications to run anywhere consistently on any infrastructure, solving the “dependency hell” for developers and operations teams, and eliminating the “It works on my laptop!!” trouble.

The Docker containers that run on the Docker Engine are:

- Standard: Docker created the industry standard for containers, so they could be portable anywhere.

- Light: Containers share the kernel of the machine's operating system and, Thus, do not require an operating system per application, resulting in higher server efficiency and lower server and licensing costs.

- Sure: Applications are more secure in containers and Docker provides the industry's strongest default isolation capabilities.

Docker installation

Docker is an open platform for developing, send and run applications. Docker allows us to separate our applications from our infrastructure so that we can deliver software quickly.

We can download and install Docker on multiple platforms. Check out the official Docker page to install Docker according to the operating system of your local system.

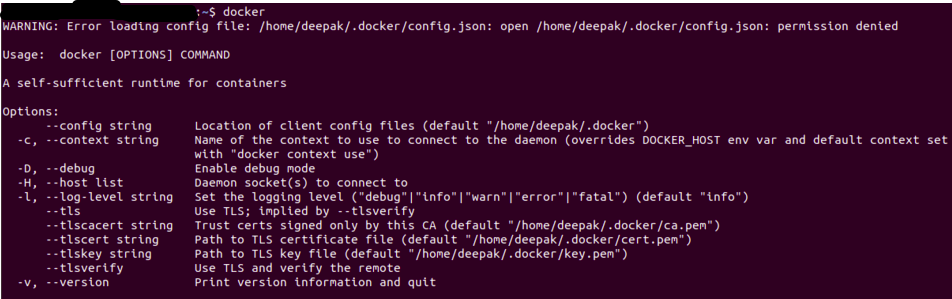

Once you have installed Docker, You can verify that the installation was successful by running the stevedore command in terminal / symbol of the system.

The output will be similar to the following, if you get a permission error, try running it in root user mode (in Linux it is used Sudo Docker).

Source: Author

Dockerfile

A simple file consisting of instructions for creating a Docker image. Every statement in a docker file is a command / operation, for instance, what operating system to use, what dependencies to install or how to compile the code, and a lot of those instructions that act like a layer.

The best part is that all layers are cached and if we modify some instructions in the Dockerfile, during the build process, the modified layer will simply be rebuilt.

A sample Dockerfile will look like below

FROM ubuntu:18.04 WORKDIR /app COPY . /app RUNpip install -r requirements.txtEXPOSE 5000 CMD python app.py

Each instruction creates a layer:

- FROM create a layer from the base image, here we have used ubuntu: 18.04 Docker image

- WORKDIR specify working directory

- COPY add files from the current directory of your Docker client or host system, here we are adding current directory files to container app directory

- TO RUN specify what commands to run inside the container, here running the pip command to install the dependencies from the requirements.txt file

- EXPOSE specify which port to expose our application, here it is 5000

- CMD specifies what command to run at container startup

Docker Image

Once a docker file is created, we can create docker image from it. Docker Image provides the runtime environment for an application, which includes all the code, the required configuration files and dependencies.

A Docker image consists of read-only layers, each of which represents a Dockerfile statement. Layers are stacked and each is a delta of the previous layer's changes.

We can build a docker image with a docker file using the docker build command.

Once the docker image is created, we can test it using the Docker run command, which will create a container using docker image and run the application.

Docker registry

Once the docker image is built and tested, we can share it with others so they can use our app. For that, we need to send docker image to public docker image registry, como DockerHub, Google Container Registry (GCR) or any other registration platform.

We can also send our docker images to private records to restrict docker image access.

Machine learning application

The machine learning application will consist of a complete workflow from input processing, the engineering of functions to the generation of results. We will see a simple Sentiment Analysis application, which we will containerize using Docker and push that app to the DockerHub to make it available to others.

Sentiment analysis

We will not go into details about machine learning applications, just an overview, we will containerize a Twitter sentiment analysis app. The code and data files can be found at Github.

You can clone this application or it may contain your own application, the process will be the same.

The git repository will have the following files

- app.py: Main application

- train.py: Script to train and save the trained model

- sentiment.tsv: data file

- requirements.txt: contains the packages / required dependencies

- Dockerfile: to create the docker image

- Template folder: contains our web page for the application

- model folder: contains our trained model

Here's how requirements.txt it will be seen, we can also specify the version for each library we need to install

numpy pandas scikit-learn flask nltk regex

In our app.py, we will load our trained model and do the same preprocessing that we did in the training.

The Flask application will serve two endpoints, home, Y predict

@app.route('/')

def home():

return render_template('home.html')

@app.route('/predict',methods=['POST'])

def predict():

if request.method == 'POST':

message = request.form['message']

clean_test = remove_pattern(test,"@[w]*")

tokenized_clean_test = clean_test.split()

stem_tokenized_clean_test = [voices.stem(i) for i in tokenized_clean_test]

message=" ".join(stem_tokenized_clean_test)

data = [message]

data = cv.transform(data)

my_prediction = clf.predict(data)

return render_template('result.html',prediction = my_prediction)

We have to load the trained model, the vectorizer and the stemmer (used in training) and we have also configured to receive requests on the port 5000 in localhost (0.0.0.0)