Overview

- Here's a quick introduction to building ML pipelines with PySpark

- The ability to build these machine learning pipelines is a must-have skill for any aspiring data scientist.

- This is a practical article with a structured code approach from PySpark, So prepare your favorite python IDE!

Introduction

Take a moment to reflect on this.: What are the skills an aspiring data scientist must possess to obtain a position in the industry?

A machine learning The project has many moving components that need to come together before we can run it successfully. The ability to know how to build an end-to-end machine learning pipeline is a valuable asset. As a data scientist (aspiring or established), you should know how these machine learning pipelines work.

This is, in a nutshell, the fusion of two disciplines: data science and software engineering. These two go hand in hand for a data scientist. It's not just about building models, we need to have the software skills to build enterprise-grade systems.

Then, in this article, we will focus on the basic idea behind building these machine learning pipelines using PySpark. This is a practical article, so start your favorite python IDE and let's get going!!

Note: This is the part 2 from my PySpark series for beginners. You can check out the introductory article below:

Table of Contents

- Perform Basic Operations on a Spark Data Frame

- Read a CSV file

- Defining the schema

- Data exploration using PySpark

- Check the dimensions of the data

- Describe the data

- Missing value count

- Find count of unique values in a column

- Encode categorical variables using PySpark

- String indexing

- A hot coding

- Vector Assembler

- Building Machine Learning Pipelines with PySpark

- Transformers and estimators

- Examples of pipes

Perform Basic Operations on a Spark Data Frame

An essential step (and first) in any data science project is to understand the data before building any Machine learning model. Most data science wannabes stumble here, they just don't spend enough time understanding what they're working with. There is a tendency to rush and build models, a fallacy that should to avoid.

We will follow this principle in this article.. I will follow a structured approach at all times to ensure that we do not miss any critical steps.

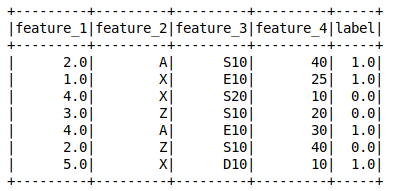

First, Let's take a moment and understand each variableIn statistics and mathematics, a "variable" is a symbol that represents a value that can change or vary. There are different types of variables, and qualitative, that describe non-numerical characteristics, and quantitative, representing numerical quantities. Variables are fundamental in experiments and studies, since they allow the analysis of relationships and patterns between different elements, facilitating the understanding of complex phenomena.... that we'll be working with here. We are going to use a data set of a India vs Bangladesh cricket match. Let's see the different variables we have in the data set:

- Batter: Unique identification of the batter (whole)

- Batsman_Name: Batter's name (String)

- Bowler: Unique identification of the bowler (whole)

- Bowler_Name: Bowler's Name (String)

- Comment: Description of the event as broadcast (chain)

- Detail: Another chain that describes events as windows and extra deliveries (Chain)

- Fired: Unique identification of the batter if discarded (String)

- ID: unique queue id (chain)

- Isball: Whether the delivery was legal or not (boolean)

- Isboundary: Whether the batter hit a limit or not (tracks)

- Iswicket: Whether the batter fired or not (tracks)

- Upon: About the number (Double)

- Careers: It runs on that particular installment (whole)

- Timestamp: Time the data was recorded (timestamp)

So let's get started, agree?

Read a CSV file

When we turn on Spark, the SparkSession The variable is appropriately available under the name ‘Spark – spark‘. We can use this to read various types of files, as CSV, JSONJSON, o JavaScript Object Notation, It is a lightweight data exchange format that is easy for humans to read and write, and easy for machines to analyze and generate. It is commonly used in web applications to send and receive information between a server and a client. Its structure is based on key-value pairs, making it versatile and widely adopted in software development.., TEXT, etc. This allows us to save the data as a Spark data frame.

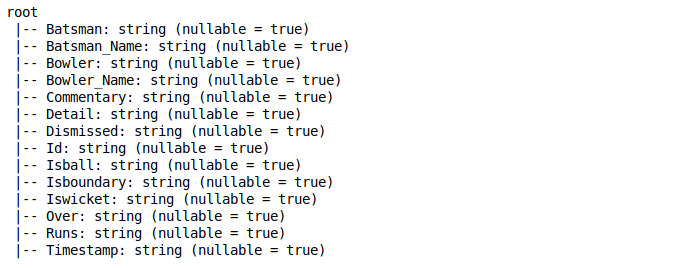

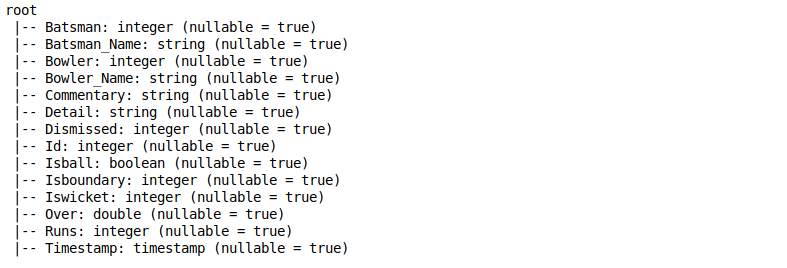

By default, treats the data type of all columns as a string. You can check the data types using the printSchema function in the data frame:

Defining the schema

Now, we don't want all columns in our dataset to be treated as strings. Then, What can we do about it?

We can define the custom schema for our data frame in Spark. For this, we need to create an object of StructType which has a list of StructField. And of course, we should define StructField with a column name, the data type of the column and whether null values are allowed for the particular column or not.

Please refer to the following code snippet to understand how to create this custom schema:

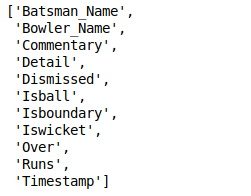

Remove columns from data

In any machine learning project, we always have some columns that are not needed to solve the problem. I'm sure you've faced this dilemma before too, either in industry or in a online hackathon.

In our case, we can use the drop function to remove the column from the data. Use the asterisk

pyspark machine learning pipeline

Data exploration using PySpark

Check the dimensions of the data

Unlike Pandas, Spark dataframes do not have the shape function to check the dimensions of the data. Instead, we can use the code below to check the dimensions of the dataset:

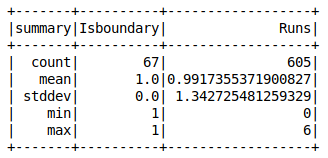

Describe the data Sparks describe The function gives us most of the statistical results as the mean, count, minimum, maximum and standard deviation. You can use the abstract

pyspark machine learning pipeline

Missing value count

It's weird when we get a data set with no missing values. Can you remember the last time it happened?

It is important to check the number of missing values present in all columns. Knowing the count helps us deal with missing values before creating any machine learning model with that data..

pyspark machine learning pipeline

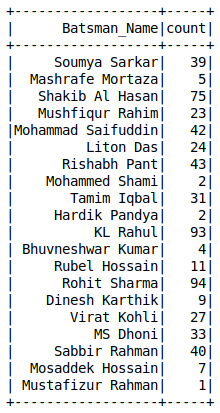

Value counts of a column Unlike Pandas, we don't have the value_counts () function in Spark dataframes. You can use the group by

pyspark machine learning pipeline

Encode categorical variables using PySpark

Most machine learning algorithms accept data only in numerical form. Therefore, it is essential to convert any categorical variable present in our data set to numbers.

Remember that we cannot just remove them from our dataset, as they may contain useful information. It would be a nightmare to lose that just because we don't want to figure out how to use them!!

Let's look at some of the methods for encoding categorical variables using PySpark.

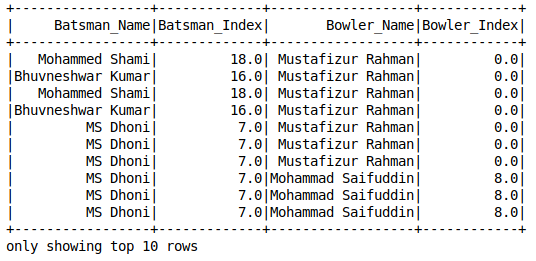

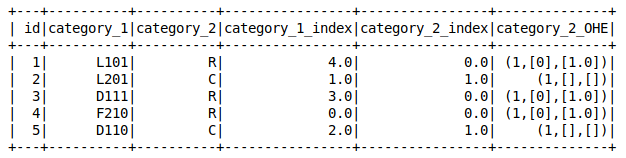

String indexing

pyspark machine learning pipeline

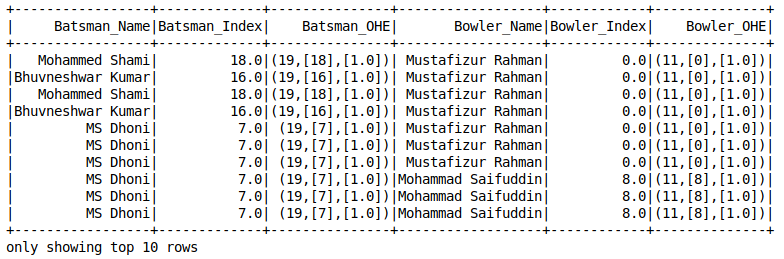

One-Hot Coding

One-hot encoding is a concept every data scientist should know. I have trusted him several times when dealing with missing values. It's a lifesaver! Here is the warning: Spark’s OneHotEncoder

does not directly encode the categorical variable. First, we need to use String Indexer to convert the variable to numeric form and then use OneHotEncoderEstimator

to encode multiple columns of the dataset.

pyspark machine learning pipeline

Vector Assembler

A vector assembler combines a given list of columns into a single vector column.

This is typically used at the end of the data exploration and preprocessing steps. In this stage, we usually work with some raw or transformed features that can be used to train our model. Vector Assembler converts them to a single column of features to train the machine learning model

pyspark machine learning pipeline

Building Machine Learning Pipelines with PySpark

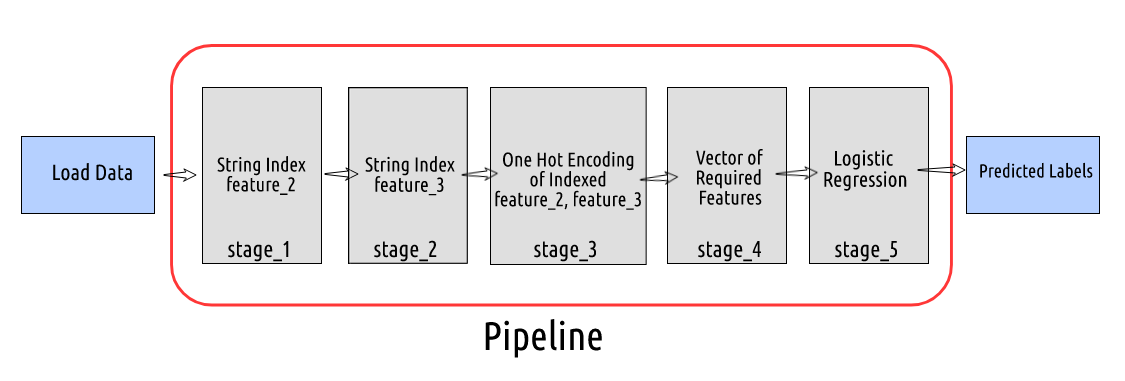

A machine learning project generally involves steps like data pre-processing, feature extraction, fitting the model and evaluating results. We need to perform many transformations on the data in sequence. As you can imagine, keeping track of them can become a tedious task.

This is where machine learning pipelines come in..

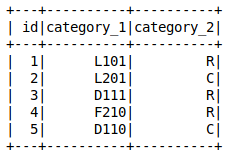

a PipelinePipeline is a term that is used in a variety of contexts, mainly in technology and project management. It refers to a set of processes or stages that allow the continuous flow of work from the conception of an idea to its final implementation. In the field of software development, for instance, A pipeline can include scheduling, Testing and deployment, thus guaranteeing greater efficiency and quality in the... It allows us to keep the data flow of all the relevant transformations that are required to reach the end result. We need to define the stages of the pipeline that act as a chain of command for Spark to run. Here,

each stage is a transformer or a estimatorThe "Estimator" is a statistical tool used to infer characteristics of a population from a sample. It relies on mathematical methods to provide accurate and reliable estimates. There are different types of estimators, such as the unbiased and the consistent, that are chosen according to the context and objective of the study. Its correct use is essential in scientific research, surveys and data analysis.....

Transformers and estimators As the name suggests, Transformers

convert one data frame to another by updating the current values of a particular column (how to convert categorical columns to numeric) or mapping it to some other values using defined logic. An estimator implements the to fit in() method in a data frame and produces a model. For instance, Logistic regression is an estimator that trains a classification model when we call the to fit in()

method.

Let's understand this with the help of some examples.

Examples of pipes

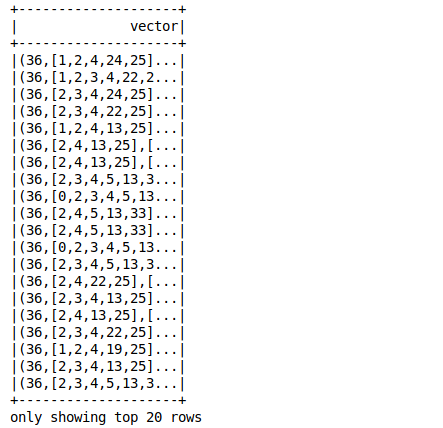

pyspark machine learning pipelines

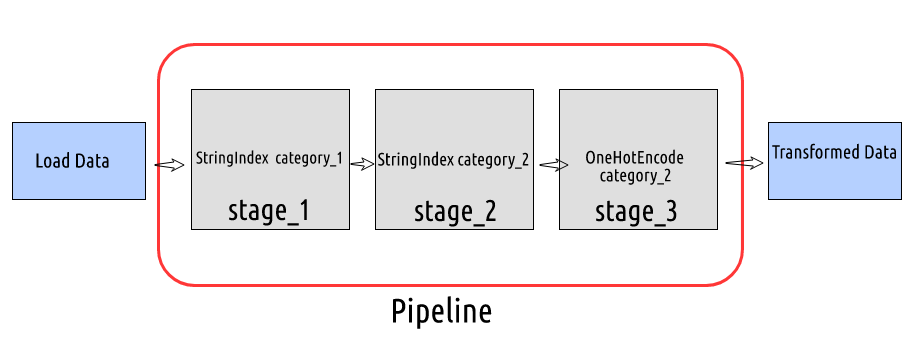

- We have created the data frame. Suppose we have to transform the data in the following order: stage_1: Label Encode o String Index la columna

- Category 1 stage_2: Label Encode o String Index la columna

- category_2 stage_3: One-Hot Encode la columna indexada

pyspark machine learning pipelines At every stage, we will pass the name of the input and output column and configure the pipeline passing the stages defined in the list of Pipeline

object.

pyspark machine learning pipelines

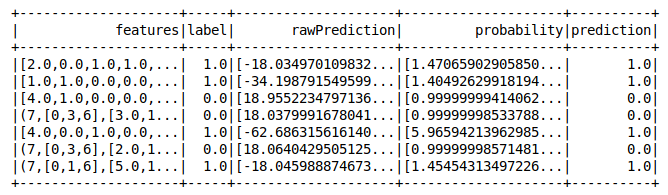

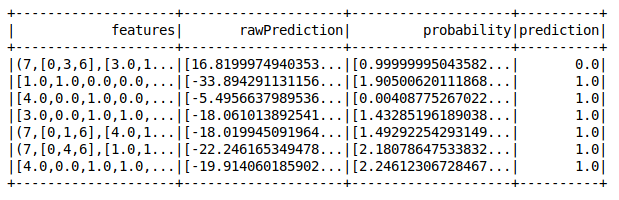

Now, Let's take a more complex example of how to configure a pipeline. Here, we will make transformations in the data and we will build a logistic regression model.

pyspark machine learning pipelines

- Now, suppose this is the order of our channeling: stage_1: Label Encode o String Index la columna

- feature_2 stage_2: Label Encode o String Index la columna

- feature_3 stage_3: One Hot Encode the indexed column of feature_2 Y

- feature_3

- stage_4: Create a vector of all the characteristics needed to train a logistic regression model

pyspark machine learning pipelines

pyspark machine learning pipelines

pyspark machine learning pipelines

Perfect!

Final notes

This was a short but intuitive article on how to build machine learning pipelines using PySpark. I will reiterate it again because it is so important: you need to know how these pipes work. This is a big part of your role as a data scientist..

Have you worked on an end-to-end machine learning project before? Or have you been part of a team that built these pipes in an industrial setting? Let's connect in the comment section below and discuss.

See you in the next article about this PySpark series for beginners. Happy learning!

Related