Overview

- Feature engineering in NLP is about understanding the context of the text.

- In this blog, we will look at some of the common engineering features in NLP.

- We will compare the results of a classification task with and without performing feature engineering.

Table of Contents

- Introduction

- NLP Task Overview

- List of features with code

- Implementation

- Comparison of results with and without function engineering

- Conclution

Introduction

“If he 80 percent of our work is data preparation, ensuring data quality is the important job of a machine learning team”. – Andrew Ng

Function engineering is one of the most important steps in machine learning. It is the process of using the domain knowledge of the data to create characteristics that make machine learning algorithms work.. Think of the machine learning algorithm as a child who learns; the more accurate the information you provide, the more they will be able to interpret the information well. Focusing on our data first will give us better results than focusing only on models. Feature engineering helps us create better data that helps the model to understand it well and provide reasonable results.

NLP is a subfield of artificial intelligence in which we understand human interaction with machines using natural languages. To understand a natural language, it is necessary to understand how we write a sentence, how we express our thoughts using different words, signs, special characters, etc., basically we must understand the context of the sentence to interpret its meaning.

If we can use these contexts as characteristics and feed them into our model, then the model will be able to better understand the sentence. Some of the common characteristics that we can extract from a sentence are the number of words, the number of uppercase words, the score number, the number of unique words, the number of empty words, the average sentence length, etc. We can define these characteristics based on our data set that we are using. In this blog, we will use a Twitter dataset so we can add some other characteristics like the number of hashtags, the amount of mentions, etc. We will discuss them in detail in the next sections..

NLP Task Overview

To understand the task of function engineering in NLP, we will implement it in a Twitter dataset. We will use COVID-19 Fake News Data Set. The task is to classify the tweet as Fake O True. The data set is divided into train, validation and test set. Below is the distribution,

| Break apart | True | Fake | Total |

| Train | 3360 | 3060 | 6420 |

| Validation | 1120 | 1020 | 2140 |

| Test | 1120 | 1020 | 2140 |

Feature list

I will list a total of 15 features we can use for the above dataset, the number of features totally depends on the type of dataset you are using.

1. Number of characters

Count the number of characters present in a tweet.

def count_chars(text):

return len(text)

2. Number of words

Count the number of words present in a tweet.

def count_words(text):

return len(text.split())

3. Capital letters number

Count the number of uppercase characters present in a tweet.

def count_capital_chars(text):

count=0

for i in text:

if i.isupper():

count+=1

return count

4. Number of uppercase words

Count the number of uppercase words present in a tweet.

def count_capital_words(text):

return sum(map(str.isupper,text.split()))

5. Count the number of scores

In this function, we return a dictionary of 32 punctuation marks with counts, that can be used as standalone features, which I will discuss in the next section.

def count_punctuations(text):

punctuations="!"#$%&"()*+,-./:;<=>[email protected][]^_`{|}~'

d=dict()

for i in punctuations:

d[str(i)+' count']=text.count(i)

return d

6. Number of words in quotes

The number of words between single quotes and double quotes.

def count_words_in_quotes(text):

x = re.findall("'.'|"."", text)

count=0

if x is None:

return 0

else:

for i in x:

t=i[1:-1]

count+=count_words

return count

7. Number of sentences

Count the number of sentences in a tweet.

def count_sent(text):

return len(nltk.sent_tokenize(text))

8. Count the number of unique words.

Count the number of unique words in a tweet.

def count_unique_words(text):

return len(set(text.split()))

9. Hashtag count

Since we are using the Twitter dataset, we can count the number of times users used the hashtag.

def count_htags(text):

x = re.findall(r'(#w[A-Za-z0-9]*)', text)

return len(x)

10. Mention count

And Twitter, most of the time people reply or mention someone in their tweet, counting the number of mentions can also be treated as a characteristic.

def count_mentions(text):

x = re.findall(r'(@w[A-Za-z0-9]*)', text)

return len(x)

11. Empty word count

Here we will count the number of stopwords used in a tweet.

def count_stopwords(text):

stop_words = set(stopwords.words('english'))

word_tokens = word_tokenize(text)

stopwords_x = [w for w in word_tokens if w in stop_words]

return len(stopwords_x)

12. Calculate the average length of words

This can be calculated by dividing the number of characters by the number of words.

df['avg_wordlength'] = df['char_count']/df['word_count']

13. Calculation of the average length of sentences

This can be calculated by dividing the word count by the sentence count.

df['avg_sentlength'] = df['word_count']/df['sent_count']

14. unique words vs word count function

This characteristic is basically the ratio of unique words to a total number of words.

df['unique_vs_words'] = df['unique_word_count']/df['word_count']

15. Stop word count vs. word count function

This characteristic is also the relationship between the number of stop words and the total number of words.

df['stopwords_vs_words'] = df['stopword_count']/df['word_count']

Implementation

You can download the dataset from here. After download, we can start to implement all the functions we defined above. We will focus more on function engineering, for this we will keep the approach simple, using TF-IDF and simple preprocessing. All the code will be available in my GitHub repository https://github.com/ahmadkhan242/Feature-Engineering-in-NLP.

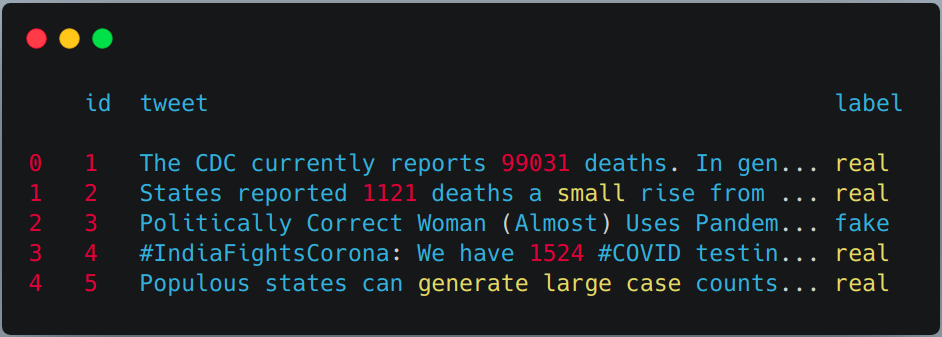

-

Train reading, validation and test suite with pandas.

train = pd.read_csv("train.csv") val = pd.read_csv("validation.csv") test = pd.read_csv(testWithLabel.csv") # For this task we will combine the train and validation dataset and then use # simple train test split from sklern. df = pd.concat([train, val]) df.head()

-

Apply previously defined feature extraction on the train and test set.

df['char_count'] = df["tweet"].apply(lambda x:count_chars(x)) df['word_count'] = df["tweet"].apply(lambda x:count_words(x)) df['sent_count'] = df["tweet"].apply(lambda x:count_sent(x)) df['capital_char_count'] = df["tweet"].apply(lambda x:count_capital_chars(x)) df['capital_word_count'] = df["tweet"].apply(lambda x:count_capital_words(x)) df['quoted_word_count'] = df["tweet"].apply(lambda x:count_words_in_quotes(x)) df['stopword_count'] = df["tweet"].apply(lambda x:count_stopwords(x)) df['unique_word_count'] = df["tweet"].apply(lambda x:count_unique_words(x)) df['htag_count'] = df["tweet"].apply(lambda x:count_htags(x)) df['mention_count'] = df["tweet"].apply(lambda x:count_mentions(x)) df['punct_count'] = df["tweet"].apply(lambda x:count_punctuations(x)) df['avg_wordlength'] = df['char_count']/df['word_count'] df['avg_sentlength'] = df['word_count']/df['sent_count'] df['unique_vs_words'] = df['unique_word_count']/df['word_count'] df['stopwords_vs_words'] = df['stopword_count']/df['word_count'] # SIMILARLY YOU CAN APPLY THEM ON TEST SET

-

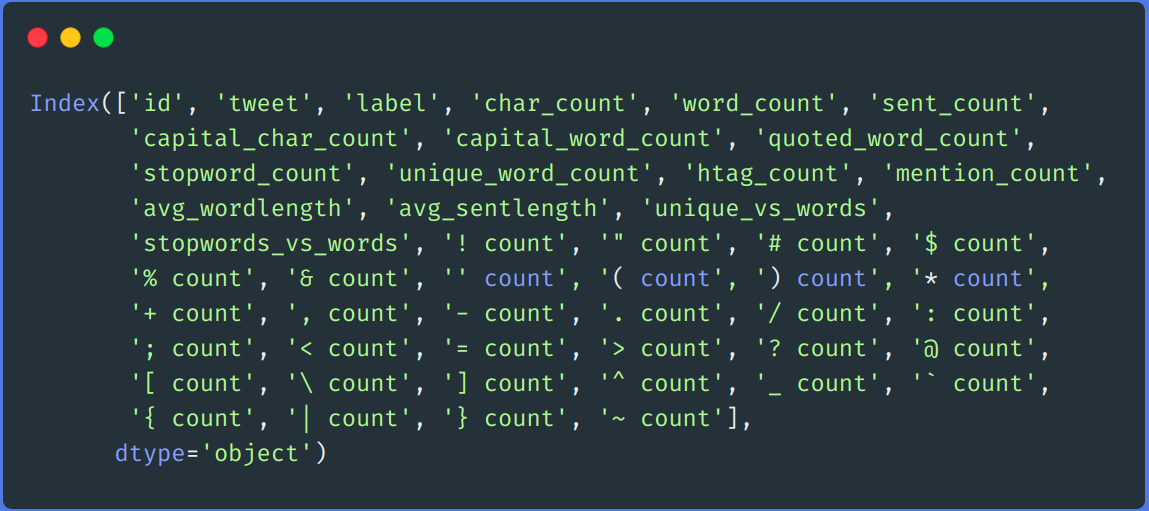

dding some additional features using score count

We will create a DataFrame from the dictionary returned by the "punct_count" function and then we will merge it with the main data set.

df_punct = pd.DataFrame(list(df.punct_count)) test_punct = pd.DataFrame(list(test.punct_count)) # Merging pnctuation DataFrame with main DataFrame df = pd.merge(df, df_punct, left_index=True, right_index=True) test = pd.merge(test, test_punct,left_index=True, right_index=True)

# We can drop "punt_count" column from both df and test DataFrame df.drop(columns=['punct_count'],inplace=True) test.drop(columns=['punct_count'],inplace=True) df.columns

-

reprocessing

We perform a simple step prior to processing, how to remove links, remove username, numbers, double space, punctuation, lowercase, etc.

def remove_links(tweet): '''Takes a string and removes web links from it''' tweet = re.sub(r'httpS+', '', tweet) # remove http links tweet = re.sub(r'bit.ly/S+', '', tweet) # rempve bitly links tweet = tweet.strip('https://www.analyticsvidhya.com/blog/2021/04/a-guide-to-feature-engineering-in-nlp/ ') # remove [links] return tweet def remove_users(tweet): '''Takes a string and removes retweet and @user information''' tweet = re.sub('([email protected][A-Za-z]+[A-Za-z0-9-_]+)', '', tweet) # remove retweet tweet = re.sub('(@[A-Za-z]+[A-Za-z0-9-_]+)', '', tweet) # remove tweeted at return tweet my_punctuation = '!"$%&'()*+,-./:;<=>?[]^_`{|}~•@' def preprocess(sent): sent = remove_users(sent) sent = remove_links(sent) sent = sent.lower() # lower case sent = re.sub('['+my_punctuation + ']+', ' ', sent) # strip punctuation sent = re.sub('s+', ' ', sent) #remove double spacing sent = re.sub('([0-9]+)', '', sent) # remove numbers sent_token_list = [word for word in sent.split(' ')] sent=" ".join(sent_token_list) return sent df['tweet'] = df['tweet'].apply(lambda x: preprocess(x)) test['tweet'] = test['tweet'].apply(lambda x: preprocess(x))

-

Text encoding

We will encode our text data using TF-IDF. We first fit transform in our train tweets column and test set and then merge it with all feature columns.

vectorizer = TfidfVectorizer() train_tf_idf_features = vectorizer.fit_transform(df['tweet']).toarray() test_tf_idf_features = vectorizer.transform(test['tweet']).toarray() # Converting above list to DataFrame train_tf_idf = pd.DataFrame(train_tf_idf_features) test_tf_idf = pd.DataFrame(test_tf_idf_features) # Saparating train and test labels from all features train_Y = df['label'] test_Y = test['label'] #Listing all features features = ['char_count', 'word_count', 'sent_count', 'capital_char_count', 'capital_word_count', 'quoted_word_count', 'stopword_count', 'unique_word_count', 'htag_count', 'mention_count', 'avg_wordlength', 'avg_sentlength', 'unique_vs_words', 'stopwords_vs_words', '! count', '" count', '# count', '$ count', '% count', '& count', '' count', '( count', ') count', '* count', '+ count', ', count', '- count', '. count', '/ count', ': count', '; count', '< count', '= count', '> count', '? count', '@ count', '[ count', ' count', '] count', '^ count', '_ count', '` count', '{ count', '| count', '} count', '~ count'] # Finally merging all features with above TF-IDF. train = pd.merge(train_tf_idf,df[features],left_index=True, right_index=True) test = pd.merge(test_tf_idf,test[features],left_index=True, right_index=True) -

Training

For him trainingTraining is a systematic process designed to improve skills, physical knowledge or abilities. It is applied in various areas, like sport, Education and professional development. An effective training program includes goal planning, regular practice and evaluation of progress. Adaptation to individual needs and motivation are key factors in achieving successful and sustainable results in any discipline...., we will use the random forest algorithm from the sci-kit learning library.

X_train, X_test, y_train, y_test = train_test_split(train, train_Y, test_size=0.2, random_state = 42) # Random Forest Classifier clf_model = RandomForestClassifier(n_estimators = 1000, min_samples_split = 15, random_state = 42) clf_model.fit(X_train, y_train) _RandomForestClassifier_prediction = clf_model.predict(X_test) val_RandomForestClassifier_prediction = clf_model.predict(test)

Results comparison

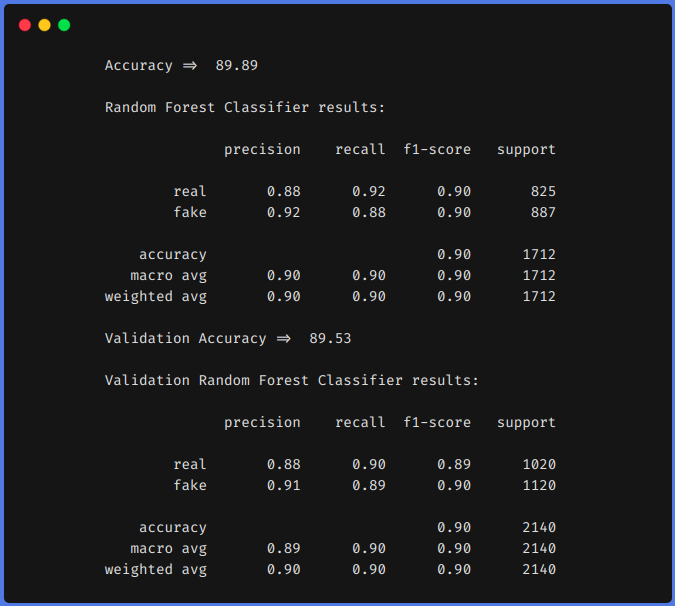

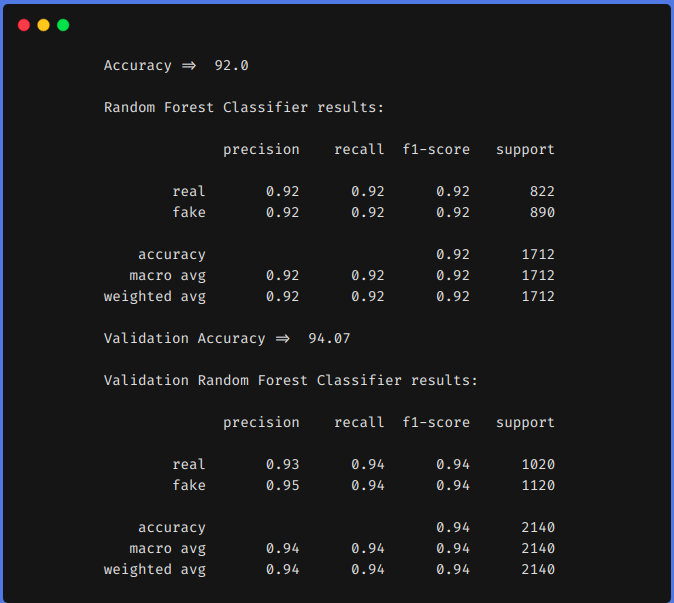

For comparison, we first train our model on the data set above using feature engineering techniques and then without using feature engineering techniques. In both approaches, we preprocessed the dataset using the same method described above and TF-IDF was used in both approaches to encode the text data. You can use any encoding technique you want, like word2vec, glove, etc.

1. Without using function engineering techniques

2. Use of function engineering techniques

From the previous results, we can see that feature engineering techniques helped us to increase our f1 of 0,90 until 0,92 on the train and from 0,90 until 0,94 in the test team.

Conclution

The above results show that if we perform function engineering, we can achieve higher precision using classical machine learning algorithms. Using a transformer-based model is a time-consuming and resource-intensive algorithm. If we do function engineering the right way, namely, after analyzing our dataset, we can get comparable results.

We can also do some other feature engineering, how to count the number of emojis used, the type of emojis used, what frequencies of unique words, etc. We can define our characteristics by analyzing the data set. I hope you have learned something from this blog, share it with others. Check out my personal machine learning blog (https://code-ml.com/) to get new and exciting content in different domains of ML and AI.

About the Author

Mohammad Ahmad (B.Tech) LinkedIn - https://www.linkedin.com/in/mohammad-ahmad-ai/ Personal Blog - https://code-ml.com/ GitHub - https://github.com/ahmadkhan242 Twitter - https://twitter.com/ahmadkhan_242

The media shown in this article is not the property of DataPeaker and is used at the author's discretion.