Overview

- GANs are generative models, believe what you feed them.

- We have listed 4 featured GAN libraries

Introduction

Nowadays, GAN is considered one of the most interesting research areas in computer vision. Its image processing prowess is unmatched and, being a data scientist, not exploring it would be a mistake. Even eminent people like Yann LeCun described GANs as “the most interesting idea in machine learning in recent 10 years”.

When I first worked with GAN, I developed it from scratch using PyTorch and it was a tedious task. It becomes even more difficult when the result is not satisfactory and you want to try another architecture, since now you must rewrite the code. But fortunately, Researchers working at various technical giants have developed various GAN libraries to help you explore and develop GAN-based applications.

In this article, we will see 4 Interesting GAN Libraries You Should Definitely Know About. What's more, I will give you an overview of the GAN, to get started.

I recommend that you consult our complete Artificial vision program to start in this field.

Table of Contents

- A quick overview of GANs

- GAN Libraries

- TF-GAN

- Torch-GAN

- Mimicry

- IBM Toolkit- GAN

A quick overview of GANs

Ian Good Fellow introduced GAN in 2014 and it is a state-of-the-art deep learning method. It is a member of the Generative Model family that goes through adversary training.

Generative modeling is a powerful method in which the network learns the distribution of the input data and tries to generate the new data point based on a similar distribution.. If we look at the examples of generative models we have Auto Encoders, Boltzmann machines, adversarial generative networks, redes bayesianas, etc.

GAN architecture

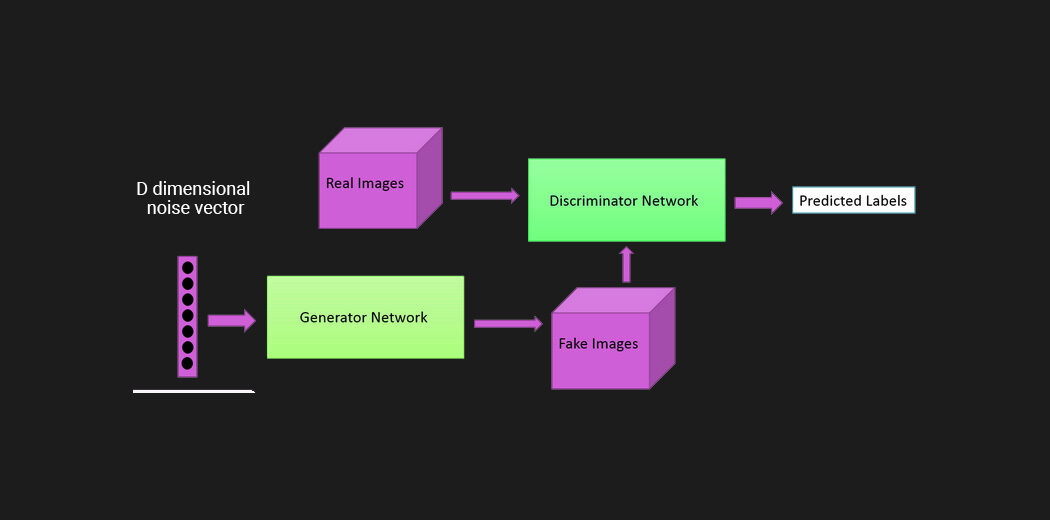

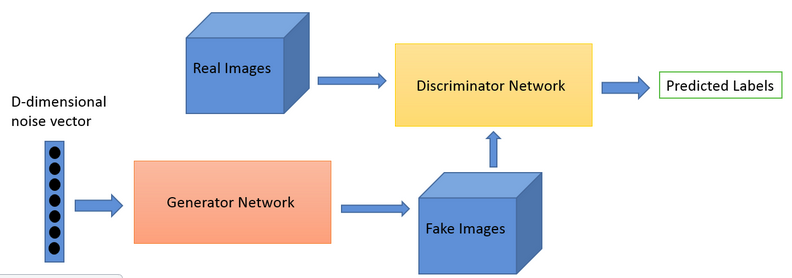

GANs consist of two neural networks, a generator GRAM and a discriminator D. What's more, these two models are involved in a zero sum game during training.

The generator network learns the distribution of training data. And when we provide random noise as input, generates some synthetic data that tries to mimic the training samples.

Now here comes the discriminator model (D). Designates a label: Real or False to the data generated by GRAM based on data distribution. This means that the new image comes from the training images or is an artificially generated image..

The case when D successfully recognize the image as real or fake leads to increased generator loss. In the same way, when GRAM manages to build good quality images similar to the real ones and tricks the D, loss of discriminator increases. What's more, the generator learns from the process and generates better and more realistic images in the next iteration.

Basically, can be considered as a two player MIN-MAX game. Here the performance of both networks improves over time. Both networks go through multiple training iterations. Over time and various updates in the model parameters such as weights and biases, reach the steady state also known as Nash equilibrium.

What is the Nash equilibrium?

The Nash equilibrium is a stable state of a system that involves the interaction of different participants, in which no participant can win through a unilateral change of strategy if the strategies of the others remain unchanged.

As a last resort, in this zero sum game, we can successfully generate artificial or fake images that mostly look like the real training data set.

Example-

Let's see how useful GANs can be.

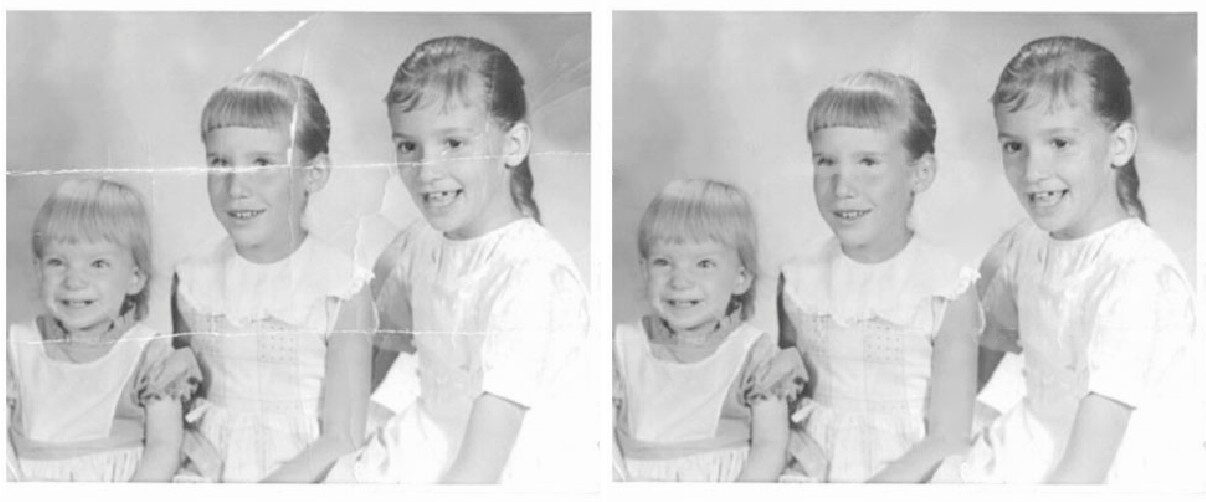

For instance, during lockdown, had a chance to review his old photo album. In such a stressful time, is a good review to relive your memories. But how this album was in your closet for years, intact, some photographs were damaged and that made you sad. And this is precisely when you decided to use GAN.

The image below was successfully restored with the help of GAN, using a method called Image Inpainting.

Original image vs. restored image

Image source: Bertalmío et al., 2000.

Image Inpainting is the art of restoring damaged images by reconstructing the missing parts using available background information.. This technique is also used to remove unwanted objects from the given images.

This was just a quick review of the GAN. If you want to know more about it, I suggest you read the following articles.

Now we will see some interesting GAN libraries.

TF-GAN

Tensorflow GAN, also known as TF-GAN, is an open source lightweight python library. It was developed by Google AI researchers for easy and effective GAN implementation.

TF-GAN provides a well-developed infrastructure to train and evaluate the Generative Adversarial Network along with effectively proven loss functions and evaluation metrics. The library consists of several modules to implement the model. Provides simple function calls that a user can apply on their own data without writing the code from scratch.

It is easy to install and use just like other packages like NumPy and pandas, as it provides the PyPi package. Use the following code

#Installing the library pip install tensorflow-gan #importing the library import tenorflow_gan as tfgan

The following is a code to generate images from the MNIST dataset using TF-Gan-

# Set up the input. images = mnist_data_provider.provide_data(FLAGS.batch_size) noise = tf.random_normal([FLAGS.batch_size, FLAGS.noise_dims]) # Build the generator and discriminator. gan_model = tfgan.gan_model( generator_fn=mnist.unconditional_generator, # you define discriminator_fn=mnist.unconditional_discriminator, # you define real_data=images, generator_inputs=noise) # Build the GAN loss. gan_loss = tfgan.gan_loss( gan_model, generator_loss_fn=tfgan_losses.wasserstein_generator_loss, discriminator_loss_fn=tfgan_losses.wasserstein_discriminator_loss) # Create the train ops, which calculate gradients and apply updates to weights. train_ops = tfgan.gan_train_ops( gan_model, gan_loss, generator_optimizer=tf.train.AdamOptimizer(gen_lr, 0.5), discriminator_optimizer=tf.train.AdamOptimizer(dis_lr, 0.5)) # Run the train ops in the alternating training scheme. tfgan.gan_train( train_ops, hooks=[tf.train.StopAtStepHook(num_steps=FLAGS.max_number_of_steps)], logdir=FLAGS.train_log_dir)

What I like about the library

- One important thing about the library is that TF-GAN currently supports Tensorflow-2.0, namely, the latest version of TensorFlow. What's more, you can use it efficiently with other frameworks.

- Training a generative adversary model is a heavy processing task, that used to take weeks. TF-GAN supports Cloud TPU. Hence, the training process is completed in a few hours. To know more about how to use TF-GAN in TPU, can see is library authors tutorial.

- In case you need to compare the results of multiple articles, TF-GAN provides you with standard metrics that make it easy for the user to efficiently and easily compare different research articles without any statistical bias..

The following are some projects implemented with TF-GAN-

What's more, to learn more about this interesting GAN library used by Google researchers, read the official document.

Torch-GAN

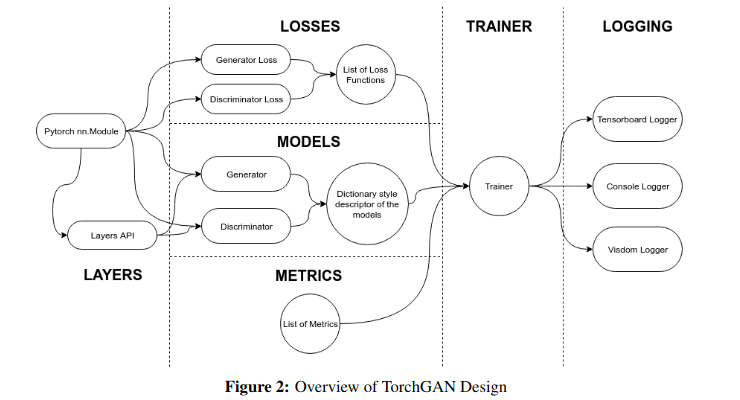

Torch-GAN is a PyTorch-based framework for writing short, easy-to-understand codes to develop GAN. This package consists of several generative adversary networks together with the utilities necessary for their implementation..

Generally, GANs share a standard design that has multiple components such as the generator model, the discriminator model, the loss function and evaluation metrics. Whereas Torch GAN mimics the design of GANs through a simple API and allows customizing components when required.

This GAN library facilitates interaction between GAN components through a highly versatile trainer that automatically adapts to user-defined patterns and losses..

Installing the library is simple using pip. You just need to use the below command below and voila.

pip3 install torchgan

Implementation of Torch-GAN models

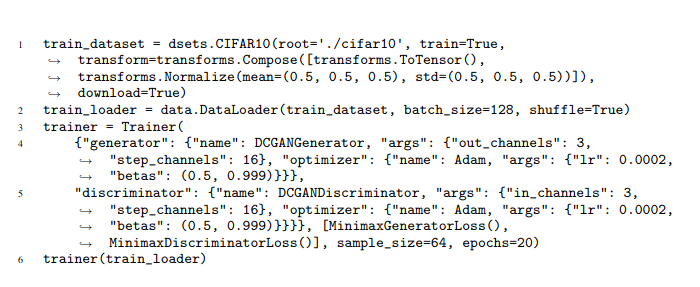

At the core of the design, we have a trainer module responsible for flexibility and ease of use. User must provide required specifications, namely, the architecture of the generator and discriminator models, along with the associated optimizer. The user must also provide the loss functions and evaluation metrics.

The library offers the freedom to choose your specifications from the wide range available or your own custom variants. In the following image, we can see the implementation of DC-GAN in only 10 lines of code, it is not surprising?

What do I like about this GAN library?

-

- The wide range of GAN architecture that supports. You name the architecture and you will find the TorchGAN implementation of it. For instance, Vanilla GAN, DCGAN, CycleGan, Conditional GAN, Generative Multi adversarial network and many more.

- Another important feature of the frame is its extensibility and flexibility.. Torch-GAN is an understandable package. We can use it efficiently with built-in or user-defined functionalities.

- What's more, provides efficient performance display through a Logger object. Supports console logging and performance viewing using TensorBoard and Vizdom.

If you want to go deeper, do not forget to read the official documentation de TorchGAN.

Mimicry

With increasing research in the field, we can see various GAN implementations. Difficult to compare multiple implementations developed using different frameworks, trained in different conditions and evaluated using different metrics. This comparison is an unavoidable task for researchers. Therefore, this was the main motivation behind the development of Mimicry.

Mimicry is a lightweight PyTorch library for GAN reproducibility. Provides common functionalities necessary to train and evaluate a Gan model. That allows researchers to focus on implementing the model rather than writing the same boilerplate code over and over again..

This GAN library provides the standard implementation of various GAN architectures such as DCGAN, Wasserstein GAN with gradient penalty (WGAN-GP), Self-monitored GAN (SSGAN), etc. same model size, trained in similar conditions.

Like the other two libraries, we can easily install Mimicry using pip and it is ready to use.

pip install torch-mimicry

Here is the quick implementation of SNGAN using mimicry

import torch import torch.optim as optimum import torch_mimicry as mmc from torch_mimicry.nets import sngan # Data handling objects device = torch.device('miracles:0' if torch.miracles.is_available() else "cpu") dataset = mmc.datasets.load_dataset(root='./datasets', name='cifar10') dataloader = torch.utils.data.DataLoader( dataset, batch_size=64, shuffle=True, num_workers=4) # Define models and optimizers netG = sngan.SNGANGenerator32().to(device) netD = sngan.SNGANDiscriminator32().to(device) optD = optimum.Adam(netD.parameters(), 2e-4, betas=(0.0, 0.9)) optG = optimum.Adam(netG.parameters(), 2e-4, betas=(0.0, 0.9)) # Start training trainer = mmc.training.Trainer( netD=netD, netG=netG, optD=optD, optG=optG, n_dis=5, num_steps=100000, lr_decay='linear', dataloader=dataloader, log_dir='./log/example', device=device) trainer.train()

Another important feature of mimicry is that it provides Tensorboard support for performance visualization. Therefore, you can create a loss and probability curve to monitor training. Can display randomly generated images to check variety.

Mimicry is an interesting development intended to help researchers. I will personally suggest you read the Imitation paper.

IBM GAN Toolkit

Up to now, we have seen some very efficient and cutting edge GAN libraries. There are many more GAN libraries like Keras-GAN, PyTorch-GAN, PyGAN, etc. When we look closely, we see some things in common between these GAN libraries. They are code intensive. If you want to use any of them, must be well versed in

- GAN knowledge and implementation.

- Fluent in Python

- How to use the particular frame

It's a bit hard to know everything for a software programmer. To solve the problem, here we have an easy to use GAN tool: IBM GAN-Toolkit.

The GAN Toolkit provides a highly flexible variant, without code, to implement GAN models. What's more, provides a high level of abstraction to implement the GAN model. Here, user only needs to give model details using config file or command line argument. Then the framework will take care of everything else. I personally found it very interesting.

The following steps will help you with the installation:

- First, we clone the code

$ git clone https://github.com/IBM/gan-toolkit $ cd gan-toolkit - Then install all the requirements

$ pip install -r requirements.txt

You are now ready to use. Finally, to train the network we have to give it a configuration file in JSON format as follows

{ "generator":{ "choice":"gan" }, "discriminator":{ "choice":"gan" }, "data_path":"datasets/dataset1.p", "metric_evaluate":"MMD" }

$ python main.py --config my_gan.json

The toolkit implements multiple GAN architectures like vanilla GAN, DC-GAN, Conditional-GAN and more.

Advantages of the GAN Toolkit

- Provides a code-free way to implement next-generation computer vision technology. Only a simple JSON file is required to define a GAN architecture. It is not necessary to write the training code as the framework will take care of it.

- Provides support for multiple libraries, namely, PyTorch, Keras and TensorFlow too.

- What's more, in the GAN toolkit, we have the freedom to easily mix and match the components of different models. For instance, can use DC-GAN generator model, the C-GAN discriminator and the vanilla gan training process.

Now just read the document and play GANs your way.

Final notes

GANs are an active field of research. We see regular updates almost weekly on the next version of GAN. You can check the work that researchers have done here.

To complete, In this article we discuss the 4 Most important GAN libraries that can be easily implemented in Python. Daily, we see tremendous developments and we see new applications of GAN. The following article explains some of these amazing apps:

What are the GAN libraries you use? Do you think I should have included some other library? Let us know in the comments below!.