Introduction

I love working with C ++, even after discovering the Python programming language for machine learning. C ++ it was the first programming language I learned and I am delighted to use it in the machine learning space.

I wrote about creating machine learning models in my previous post and the community loved the idea. I received an overwhelming response and a query stood out for me (of several people): Are there C libraries ++ for machine learning?

It's a fair question. Languages like Python and R have a large number of packages and libraries to suit different machine learning tasks.. Then, ¿C ++ do you have any offer of this kind?

Yes, does it! I will highlight two of these C libraries ++ in this post, and we will also see them in action (with code). If you are new to C ++ for machine learning, I will recommend you again to read the first post.

Table of Contents

- Why should we use machine learning libraries?

- Machine Learning Libraries in C ++

- Biblioteca SHARK

- MLPACK Library

Why should we use machine learning libraries?

This is a question that many newcomers will have.. What is the relevance of libraries in machine learning? Let me try to explain that to you in this section.

Let's say seasoned professionals and industry veterans have tried hard and found a solution to a roadblock.. Would you rather use that or would you rather spend hours trying to recreate the same thing from scratch? As usual, there is little point in going for the latter method, especially when you are working or learning within the set deadlines.

The best thing about our machine learning community is that there are already many solutions in the form of libraries and packages. Someone else, from experts to enthusiasts, already did the hard work and put the answer together nicely packaged in a library.

These machine learning libraries are efficient and optimized, and thoroughly tested for multiple use cases. Trusting these libraries is what drives our learning and makes writing code, either in C ++ o Python, be much easier and more intuitive.

Machine Learning Libraries in C ++

In this section, we will look at the two most popular machine learning libraries in C +:

- Biblioteca SHARK

- MLPACK Library

Let's look at each one individually and see how the C code works ++.

1) Biblioteca SHARK C ++

Shark is a fast modular library and has overwhelming support for supervised learning algorithms, as linear regression, neural networks, grouping, k-socks, etc. It also includes the functionality of linear algebra and numerical optimization. These are key mathematical functions or areas that are very important when performing machine learning tasks.

We will first see how to install Shark and configure an environment. After, we will implement linear regression with Shark.

Install Shark and configure the environment (i will do this for linux)

- Shark trusts Boost and cmake. Fortunately, all dependencies can be installed with the following command:

sudo apt-get install cmake cmake-curses-gui libatlas-base-dev libboost-all-dev

- To install Shark, run the following commands line by line in your terminal:

- clone de git https://github.com/Shark-ML/Shark.git (you can also download the zip file and extract it)

- cd shark

- mkdir compilation

- cd build

- cmake ..

- do

If you haven't seen this before, that's not an obstacle. It's pretty straightforward and there's a lot of information online if you get into trouble. For Windows and other operating systems, you can do a quick google search on how to install Shark. Here is the reference site Shark Installation Guide.

Compile programs with Shark

Implementing Linear Regression with Shark

My first post in this series had an introduction to linear regression. I will use the same idea in this post, but this time using Shark C library ++.

Initialization stage

We will start by including the libraries and header functions for linear regression:

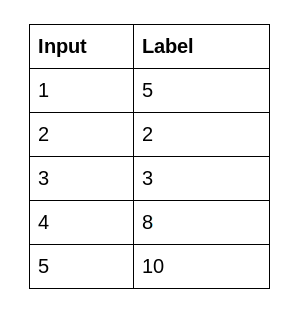

Next comes the data set. I have created two CSV files. The input.csv file contains the x values and the labels.csv file contains the y values. Below is a snapshot of the data:

You can find both files here: Machine Learning with C ++. First, we will create data containers to save the values of the CSV files:

Then, we need to import them. Shark comes with a nice CSV import feature, and we specify the data container that we want to initialize, and also the location of the CSV path file:

After, we need to instantiate a regression dataset type. Now, this is just a general object for regression, and what we will do in the constructor is pass our inputs and also our labels for the data.

Then, we need to train the linear regression model. How do we do that? We need to instantiate a trainer and establish a linear model:

Training stage

Then comes the key step where we truly train the model. Here, the coach has a member function called train. Then, this function trains this model and finds the parameters for the model, what exactly we want to do.

Prediction stage

In conclusion, let's generate the model parameters:

Linear models have a member function called make up for which generates the intersection of the line of best fit. Then, we generate a matrix instead of a multiplier. This is because the model can be generalized (not just linear, could be a polynomial).

We calculate the line of best fit by minimizing the least squares, In other words, minimizing loss squared.

Then, Fortunately, the model enables us to generate that information. The Shark library is very useful to give an indication of how well the models fit:

First, we need to initialize a squared loss object, and then we need to instantiate a data container. After, the forecast is calculated based on the inputs to the system, And then all we'll do is make the loss, which is calculated passing the labels and also the prediction value.

In conclusion, we need to compile. In the terminal, type the following command (make sure the directory is configured correctly):

g++ -o lr linear_regression.cpp -std=c++11 -lboost_serialization -lshark -lcblas

Once compiled, would have created a lr object. Now just run the program. The output we get is:

B : [1](-0,749091)

A :[1,1]((2.00731))

Lost: 7.83109

The value of b is a bit far from 0, but that's due to the noise on the labels. The value of the multiplier is close to 2, which is quite equivalent to the data. And this is how you can use the Shark library in C! ++ to build a linear regression model!

2) MLPACK C Library ++

mlpack is a fast and flexible machine learning library written in C ++. Its goal is to provide fast and extensible implementations of state-of-the-art machine learning algorithms. mlpack provides these algorithms as simple command line programs, left the Python, Julia links and C classes ++ which can then be integrated into larger-scale machine learning solutions.

We will first see how to install mlpack and the configuration environment. Then we will implement the k-means algorithm using mlpack.

Install mlpack and the configuration environment (i will do this for linux)

mlpack depends on the following libraries that need to be installed on the system and have headers present:

- Armadillo> = 8.400.0 (with LAPACK support)

- Boost (math_c99, program_options, serialization, unit_test_framework, heap, spirit)> = 1.49

- ensmallen> = 2.10.0

On Ubuntu and Debian, you can get all these dependencies by means of fit:

sudo apt-get install libboost-math-dev libboost-program-options-dev libboost-test-dev libboost-serialization-dev binutils-dev python-pandas python-numpy cython python-setuptools

Now that all the dependencies are installed on your system, you can directly run the following commands to compile and install mlpack:

- wget

- tar -xvzpf mlpack-3.2.2.tar.gz

- mkdir mlpack-3.2.2 / compile && cd mlpack-3.2.2 / build

- cmake ../

- make -j4 # The -j is the number of cores you want to use for a build

- sudo make install

On many Linux systems, mlpack will be installed by default for / usr / local / lib and you might need to set environment variable LD_LIBRARY_PATH:

export LD_LIBRARY_PATH=/usr/local/lib

The above instructions are the easiest way to get, compile and install mlpack. If your Linux distribution supports binaries, follow this site to install mlpack using a one line command depending on your distribution: MLPACK Installation Instructions. The above method works for all distributions.

Compile programs with mlpack

- Include the relevant header files in your program (assuming k-means implementation):

-

- #include

- #include

- #include

- To compile we need to link the following libraries:

-

- std = c ++ 11 -larmadillo -lmlpack -lboost_serialization

Implementando K-Means con mlpack

K-means is a centroid-based algorithm, or a distance-based algorithm, where we calculate the distances to assign a point to a group. En K-Means, each group is related to a centroid.

The main objective of the K-Means algorithm is to minimize the sum of distances between the points and their respective cluster centroid.

K-means is effectively an iterative procedure in which we want to segment the data into certain groups. First, we assign some initial centroids, so these can be absolutely random. Then, for each data point, we find the closest centroid. Then we will assign that data point to that centroid. Then, each centroid represents a class. And once we assign all the data points to each centroid, we will calculate the mean of these centroids.

For a detailed understanding of the K-means algorithm, read this tutorial: The most comprehensive guide to grouping K-means you'll ever need.

Here, we will implement k-means using mlpack library in C ++.

Initialization stage

We will start by including the libraries and header functions for k-means:

Then, we will create some basic variables to determine the number of clusters, the dimensionality of the program, the number of samples and the maximum number of iterations we want to do. Why? Because K-means is an iterative procedure.

Then, we will create the data. So this is where we will first use the Armadillo Library. We will create a map class that is effectively a data container:

There you go! This class mat, the data of the object you have, we have given it a dimensionality of two, and he knows that he is going to have 50 samples, and you have initialized all these data values to be 0.

Then, we will assign some random data to this data class and then execute K-means effectively. I'm going to create 25 points around position 1 1, and we can do this by effectively saying that each data point is 1 1 or at position X is equal to 1, and is equal to 1. After, we will add some random noise. for each of the 25 data points. Let's see this in action:

Here, to go from 0 a 25, the i-th column must be this weapon type vector at position 11, and then we will add a certain amount of random noise of size 2. Then, will be a The two-dimensional random noise vector multiplied by 0,25 up to this position, and that will be our data column. And then we will do exactly the same for the point x is equal to 2 and y is equal to 3.

And our data is ready! Time to move on to the training stage.

Training stage

Then, first, let's instantiate a mat weapon row type to contain the groups, and then we instantiate a mat weapon to contain the centroids:

Now, we need to create an instance of the K-means class:

We have instantiated the K-means class and specified the maximum number of iterations to pass to the constructor. So now we can go ahead and group.

We will call the member function Cluster of this class K-means. We need to pass the data, the number of clusters, and then we also need to pass the cluster object and the centroid object.

Now, this cluster function will run K-means on this data with a specific number of clusters, and then it will initialize these two objects: clusters and centroids.

Generating results

We can simply display the results using the centroids.print function. This will give you the location of the centroids:

Then, we need to compile. In the terminal, type the following command (again, make sure the directory is configured correctly):

g++ k_means.cpp -o kmeans_test -O3 -std=c++11 -larmadillo -lmlpack -lboost_serialization && ./kmeans_test

Once compiled, would have created a kmeans object. Now just run the program. The output we get is:

Centroids:

0,9497 1,9625

0,9689 3,0652

And that is!

Final notes

In this post, We saw two popular machine learning libraries that help us implement machine learning models in c ++. I love the extensive support available in the official documentation, so check it out. If you need help, contact me below and I'll be happy to get back to you.

In the next post, we will implement some interesting machine learning models like decision trees and random forest. So stay tuned!