Overview:

- Learn what Big Data is and how it is relevant in today's world

- Know the characteristics of Big Data

Introduction

The term “Big Data” it's a misnomer, as it implies that the pre-existing data is somehow small (they are not) or that the only challenge is its large size (size is one of them, but there are often more ).

In summary, the term Big Data applies to information that cannot be processed or analyzed using traditional processes or tools.

Increasingly, Today's organizations are facing more and more Big Data challenges. They have access to a wealth of information, but they don't know how to get value out of it because it is in its crudest form or in a semi-structured or unstructured format; and as a result, they don't even know if it's worth keeping (or even if they can keep it).

In this article, we analyze the concept of big data and what it is about.

Table of Contents

- What is Big Data?

- Features of Big Data

- Data volume

- The variety of data

- Data speed

What is Big Data?

We are part of it, every day!

An IBM survey found that more than half of today's business leaders realize they don't have access to the information they need to do their jobs. Businesses face these challenges in a climate where they have the ability to store anything and are generating data like never before in history.; combined, this presents a real information challenge.

It's an enigma: today's businesses have more access to potential information than ever, but nevertheless, as this potential gold mine of data accumulates, the percentage of data the business can process is rapidly reduced. In a nutshell, the Big Data era is in full force today because the world is changing.

Through instrumentation, we can feel more things and, if we can feel it, we tend to try to store it (or at least part of it). Through advances in communications technology, people and things are increasingly interconnected, and not just sometimes, but all the time. This interconnectivity fee is a runaway train. Generally known as machine to machine (M2M), interconnectivity is responsible for double digit data growth rates year over year (YoY).

Finally, because small ICs are now so inexpensive, we can add intelligence to almost everything. Even something as mundane as a train car has hundreds of sensors. In a railroad car, these sensors track things like conditions experienced by the wagon, the status of individual parts and GPS-based data for tracking and shipping logistics. After train derailments that claimed great loss of life, governments introduced regulations for this type of data to be stored and analyzed to prevent future disasters.

Rail cars are getting smarter too: processors have been added to interpret data from sensors on parts prone to wear, like bearings, to identify parts that need repair before they fail and cause further damage, or even worse, a disaster. But it's not just railroad cars that are smart, real rails have sensors every few feet. What's more, data storage requirements are for the entire ecosystem: automobiles, rails, railroad crossing sensors, weather patterns causing rail movements, etc.

Now add this to track the load of a train car, arrival and departure times, and you can see very quickly that you have a Big Data problem on your hands. Even if every bit of this data was relational (and it is not), they will all be raw and have very different formats, which makes processing them in a traditional relational system impractical or impossible. The wagons are just one example, but wherever we look, we see domains with speed, volume and variety that combine to create the big data problem.

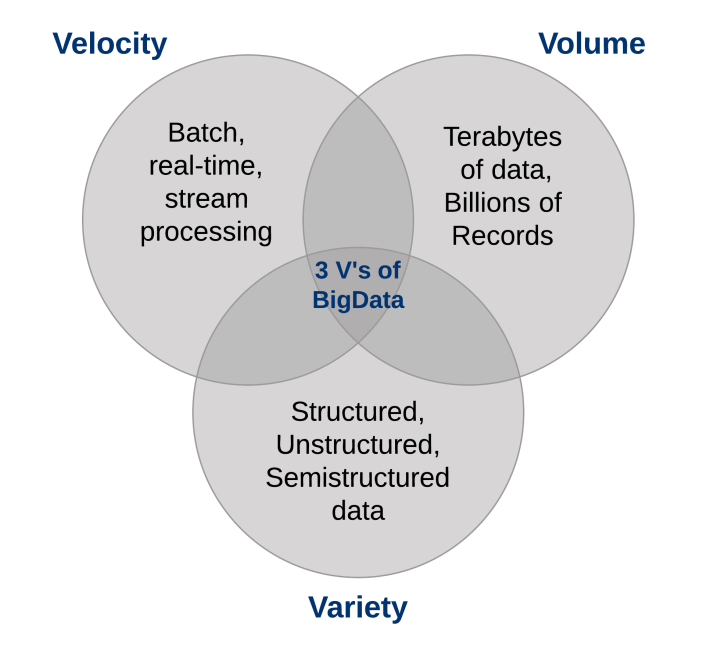

What are the characteristics of Big Data?

Three characteristics define Big Data: volume, variety and speed.

Gaskets, these characteristics define “Big Data”. They have created the need for a new class of capabilities to augment the way things are done today to provide a better line of sight and control over our existing knowledge domains and the ability to act upon them..

1. Data volume

The sheer volume of data being stored today is skyrocketing. In the year 2000, they were stored 800.000 petabytes (PB) of data in the world. Of course, a lot of the data that is created today is not analyzed at all and that is another issue that needs to be taken into account. This number is expected to reach 35 zettabytes (E.g.) to 2020. Twitter only generates more than 7 terabytes (TB) of data every day, Facebook 10 TB and some companies generate terabytes of data every hour of every day of the year. It is no longer unusual for individual companies to have storage clusters that contain petabytes of data.

When you stop and think about it, it's kinda weird that we're drowning in data. We store everything: environmental data, financial data, medical data, surveillance data and the list goes on and on. For instance, taking your smartphone out of its case generates an event; when the door of your commuter train opens to board, It is an event; register to take a plane, enter work, buy a song on iTunes, change the tv channel, take an electronic toll route: each of these actions generates data.

Agree, you get the point: there is more data than ever and all you have to do is look at the terabyte penetration rate for home personal computers as a telltale sign. We used to keep a list of all the data stores that we knew about that exceeded a terabyte almost a decade ago; suffice to say things have changed when it comes to volume.

As the term implies “Big Data”, organizations are faced with huge volumes of data. Organizations that don't know how to manage this data are overwhelmed by it. But the opportunity exists, with the right technological platform, to analyze almost all data (or at least more of them identifying the data that is useful to you) to get a better understanding of your business, your customers and the market. And this leads to the current conundrum faced by today's businesses in all industries..

As the amount of data available to the business increases, the percentage of data it can process, understand and analyze is decreasing, thus creating the blind zone.

What's in that blind zone?

You do not know: it can be something great or maybe nothing at all, but the "I don't know" is the problem (or the opportunity, depending on how you look at it). The conversation about data volumes has shifted from terabytes to petabytes with an inevitable shift to zettabytes, and all this data cannot be stored in your traditional systems.

2. The variety of data

The volume associated with the Big Data phenomenon brings with it new challenges for data centers trying to deal with it: its variety.

With the explosion of sensors and smart devices, as well as social collaboration technologies, data in a company has become complex, because they include not only traditional relational data, but also raw data, semi-structured and unstructured web pages, weblog files (including clickstream data), search indexes, social media forums, email, documents, sensor data from active and passive systems, etc.

What's more, Traditional systems may have difficulty storing and performing the analyzes necessary to understand the content of these records because much of the information that is generated does not lend itself to traditional database technologies.. In my experience, although some companies are moving along the way, in general, most are just beginning to understand big data opportunities.

In a nutshell, the variety represents all data types: a fundamental change from traditional structured data analysis requirements to include raw data, semi-structured and unstructured as part of the knowledge and decision-making process. Traditional analytics platforms can't handle variety. But nevertheless, the success of an organization will depend on its ability to extract knowledge from the various types of data available, which include both traditional and non-traditional.

When we look back on our database careers, sometimes it is humiliating to see that we spend more of our time in just the 20 percent of data: the relational type that is perfectly formatted and fits nicely into our strict schemas. But the truth of the matter is that the 80 percent of the world's data (and more and more of this data is responsible for setting new records for speed and volume) are not structured or, in the best case, semiestructurados. If you look at a Twitter feed, you will see the structure in its JSON format, but the actual text is unstructured and understanding that can be rewarding.

Video images and images are not easily or efficiently stored in a relational database, certain event information can change dynamically (like weather patterns), which is not suitable for strict schemes, and more. To capitalize on the Big Data opportunity, companies must be able to analyze all types of data, both relational and non-relational: text, sensor data, audio, video, transactional and more.

3. Data speed

Just as the volume and variety of data we collect has changed and the store, the speed at which they are generated and must be handled has also changed. A conventional understanding of speed generally considers how fast data arrives and stores, and their associated recovery rates. While managing all of that quickly is good, and the volumes of data that we are seeing are a consequence of the speed with which the data arrives.

To adapt to speed, a new way of thinking about a problem must start at the starting point of the data. Instead of limiting the idea of speed to the growth rates associated with your data repositories, we suggest you apply this definition to data in motion: the speed at which data flows.

After all, we agree that today's businesses are dealing with petabytes of data rather than terabytes, and the rise of RFID sensors and other information flows has led to a constant flow of data at a rate that has made it impossible for traditional systems. handle. Sometimes, gaining an edge over the competition may mean spotting a trend, problem or opportunity just seconds, or even microseconds, before someone else.

What's more, more and more data produced today has a very short shelf life, so organizations must be able to analyze this data in near real time if they expect to find valuable insights in this data.. In traditional processing, you can think of running queries with relatively static data: for instance, the query “Show me all the people who live in the ABC flood zone” would result in a single result set that would be used as an incoming weather warning list. Pattern. With flow computing, you can run a process similar to a continuous query that identifies people who are currently “in the ABC flood zones”, but you get continuously updated results because the location information from the GPS data is updated in real time.

Dealing effectively with Big Data requires you to perform analysis against the volume and variety of data while it is still in motion., not only after they are at rest. Consider examples from neonatal health tracking to financial markets; in all cases, require handling the volume and variety of data in new ways.

Final notes

You cannot afford to examine all the data that is available to you in your traditional processes; it's too much data with too little known value and too much staked cost. Big Data platforms give you a way to inexpensively store and process all that data and discover what is valuable and what is worth exploiting. What's more, since we are talking about data analysis at rest and data in motion, the actual data from which you can find value is not only broader, they can also use and analyze them more quickly in real time.

I recommend that you read these articles to familiarize yourself with the tools for big data:

Let us know your thoughts in the comments below..

Reference

Understanding Big Data: Analytics for enterprise-class Hadoop and streaming data.