The mismatch and overfit challenge in machine learning

Inevitably, you will face this question in an interview with a data scientist:

Can you explain what mismatch and overfit is in the context of machine learning?? Describe it in a way that even a non-technical person can understand.

Your ability to explain this in a non-technical, easy-to-understand way could well make your suitability for the data science role!!

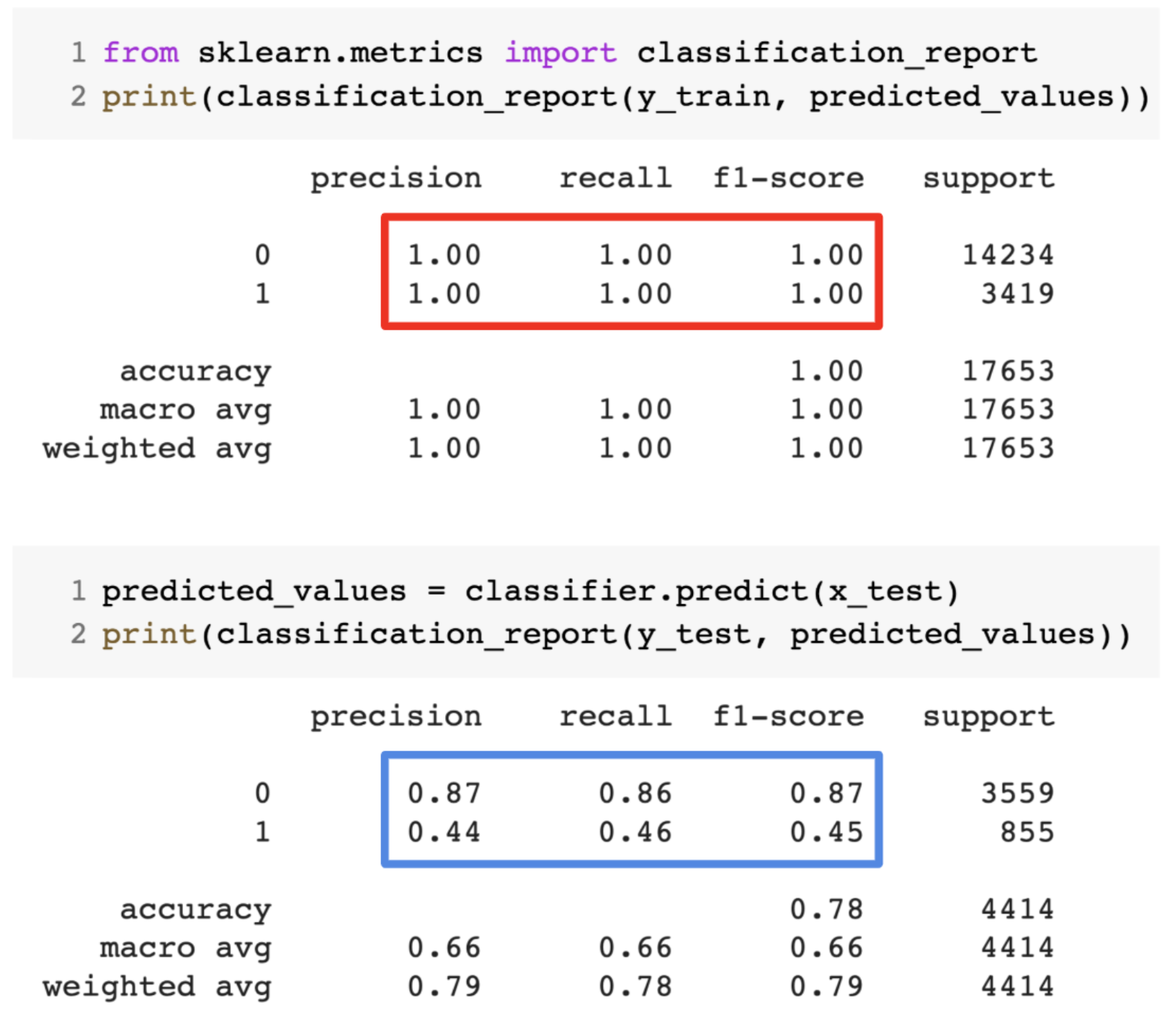

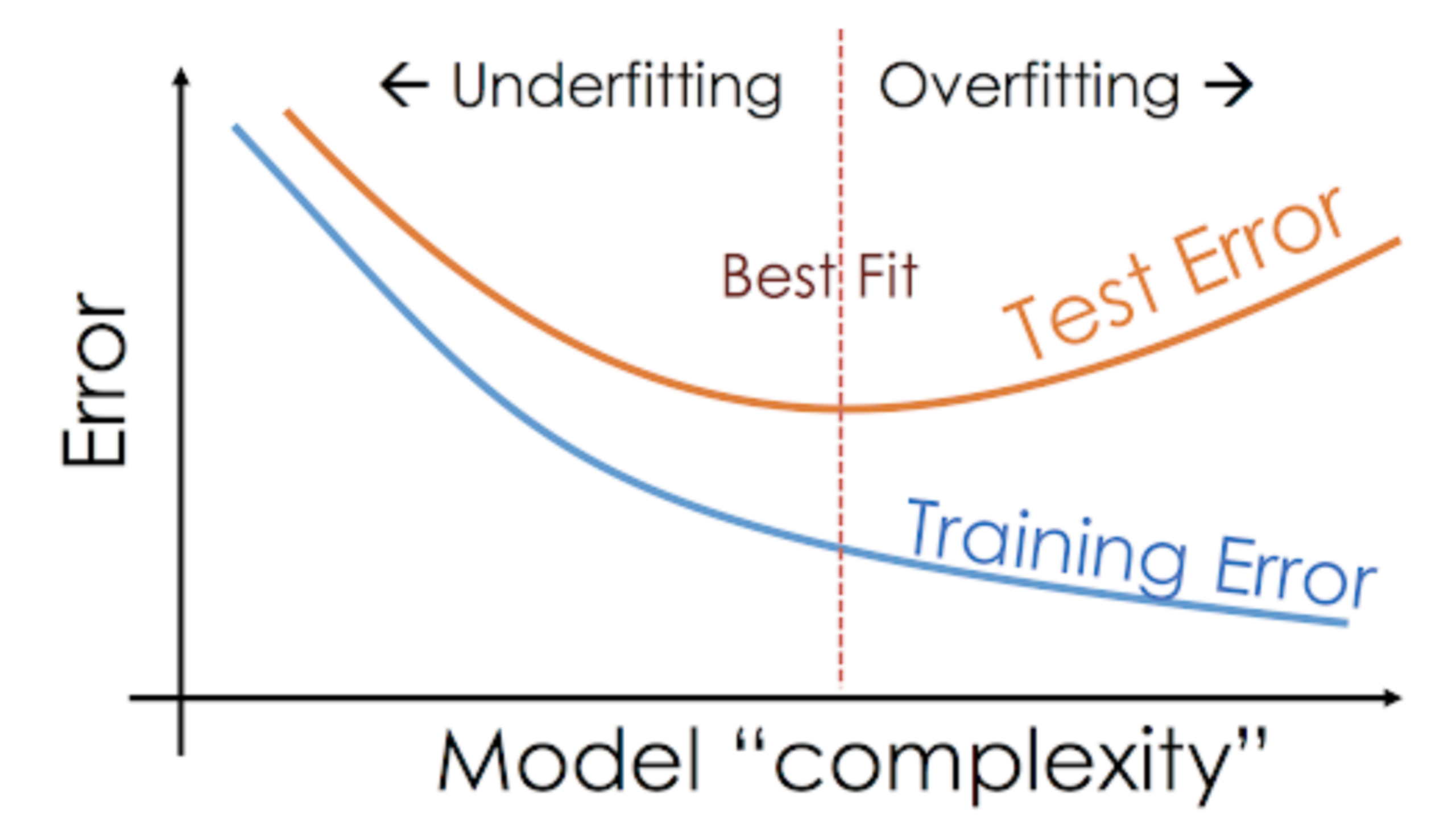

Even when we are working on a machine learning draft, we are often faced with situations where we encounter unexpected performance or differences in error rate between training set and test set (as it's shown in the following). How can a model perform so well in the training set and so poorly in the test set?

This happens very frequently whenever I work with predictive models based on trees. Because of the way algorithms work, You can imagine how difficult it is to avoid falling into the overfitting trap!!

At the same time, it can be quite overwhelming when we cannot find the underlying reason why our predictive model displays this anomalous behavior.

This is my personal experience: ask any seasoned data scientist about this, they usually start talking about a range of fancy terms like overfitting, maladjustment, bias and variance. But little is said about the intuition behind these machine learning concepts.. Let's rectify that, agree?

Let's take an example to understand underfitting vs overfitting

I want to explain these concepts using a real world example. Many people talk about the theoretical angle, but i think that's not enough: we need to visualize how under-fit and over-fit really work.

Then, let's go back to our college days for this.

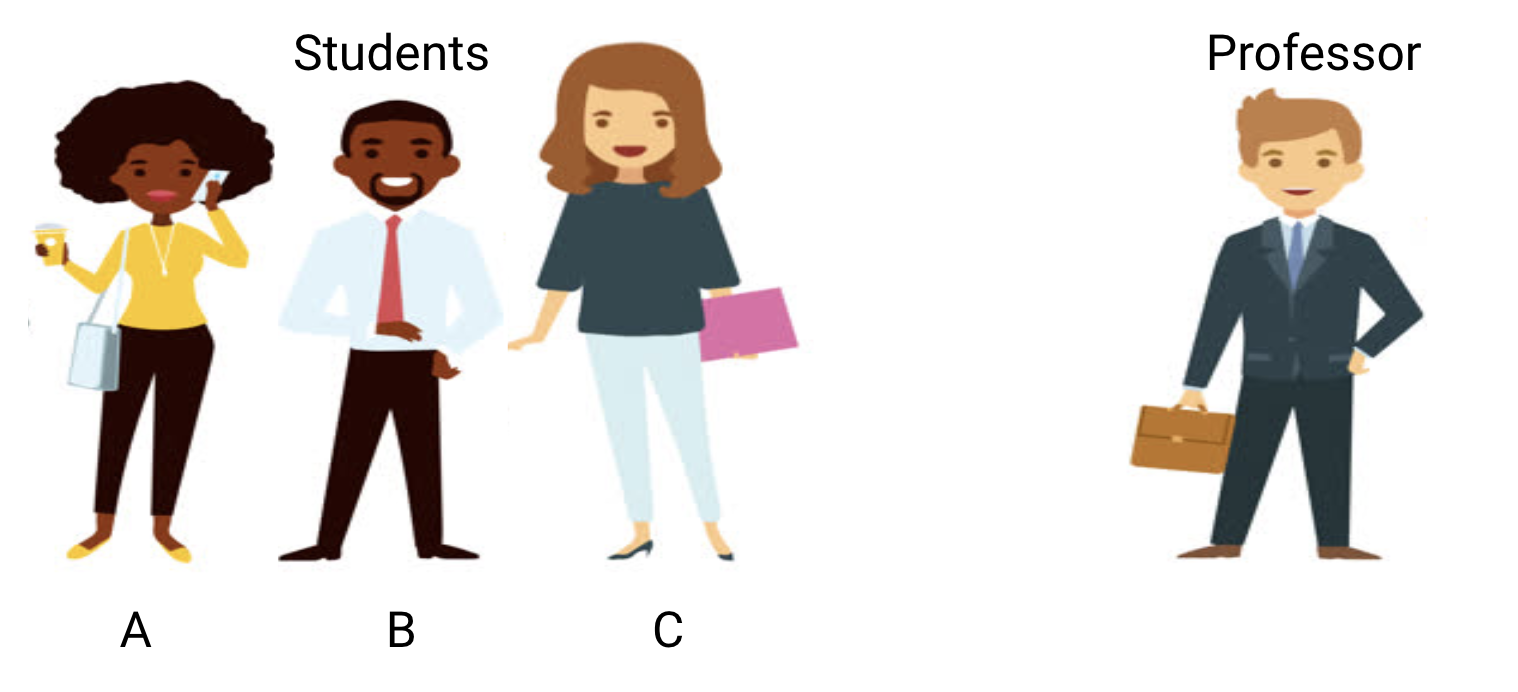

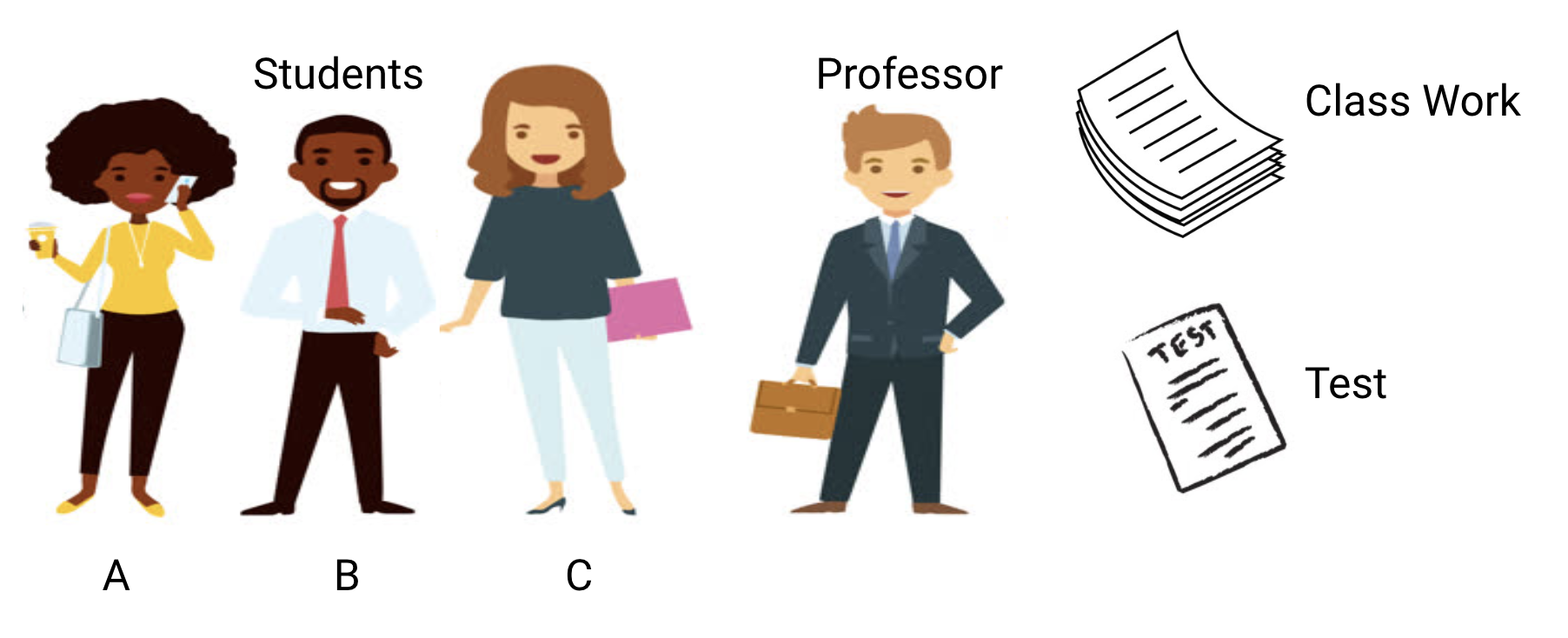

Consider a math class consisting of 3 students and a teacher.

Now, in any classroom, we can broadly divide the students into 3 categories. We will talk about them one by one.

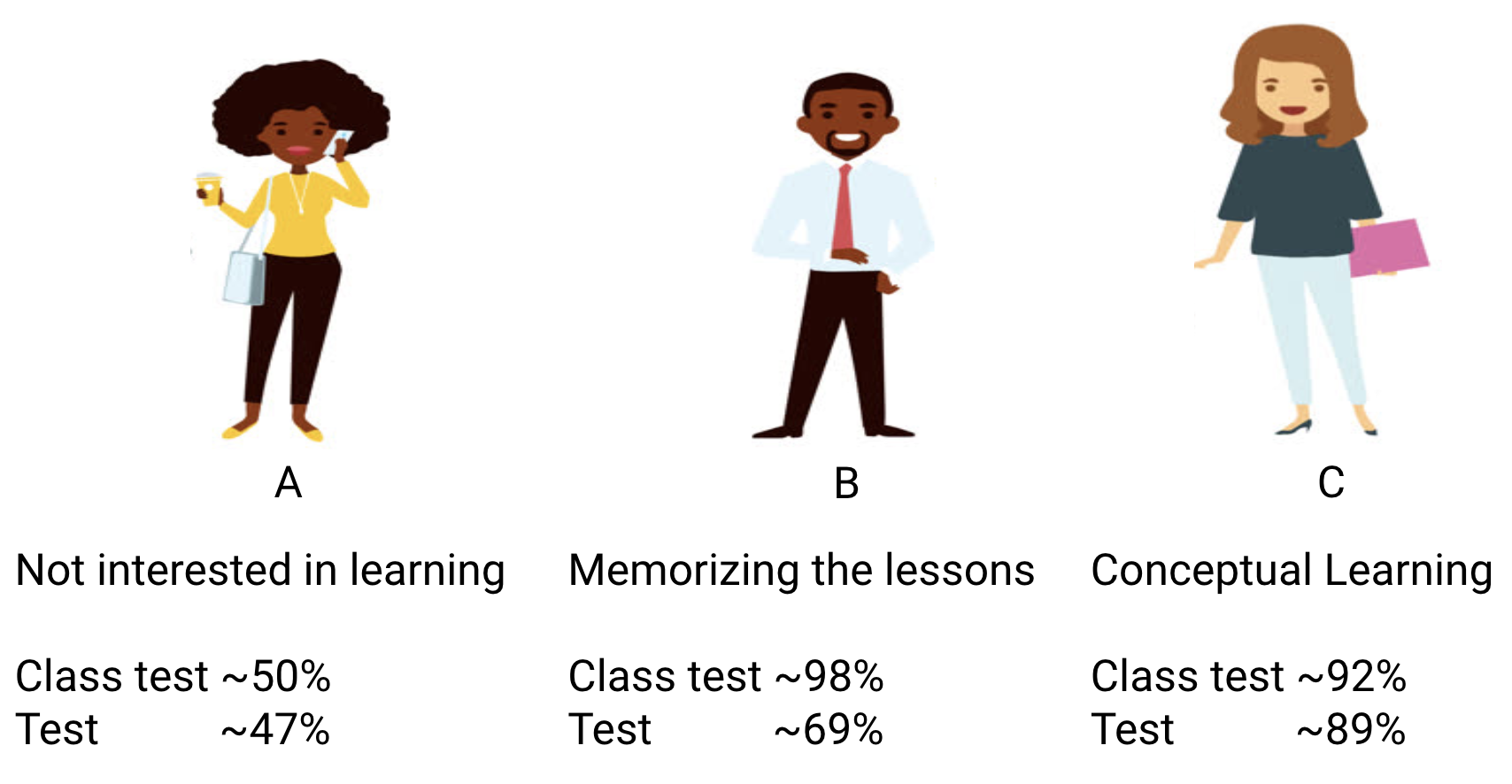

Let's say student A looks like a student who doesn't like math. He is not interested in what is taught in class and that is why he does not pay much attention to the teacher and the content he is teaching.

Let's consider student B. Is the most competitive student who focuses on memorizing each and every question taught in class rather than focusing on key concepts. Simply, not interested in learning the problem-solving approach.

In summary, we have the ideal student C. She is purely interested in learning the key concepts and problem solving approach in math class rather than just memorizing the solutions presented..

We all know from experience what happens in a classroom. The teacher first lectures and teaches students about problems and how to solve them. At the end of the day, the teacher simply takes a test based on what they taught in class.

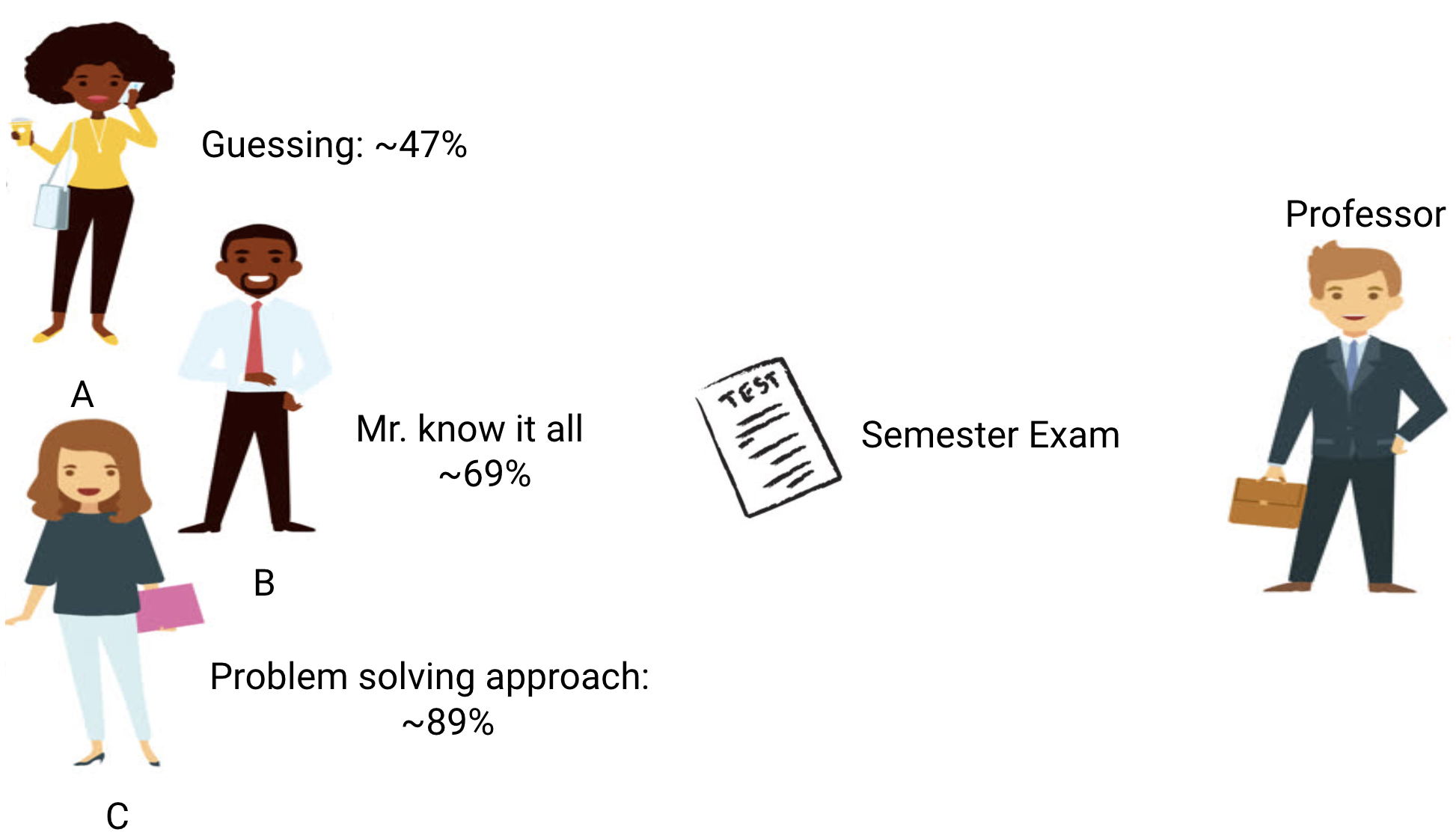

The hindrance comes in the semester3 tests that the school establishes. This is where new questions arise (invisible data). Students have not visualized these questions before and they certainly have not solved them in the classroom.. Sounds familiar?

Then, Let's discuss what happens when the teacher takes a test in the classroom at the end of the day:

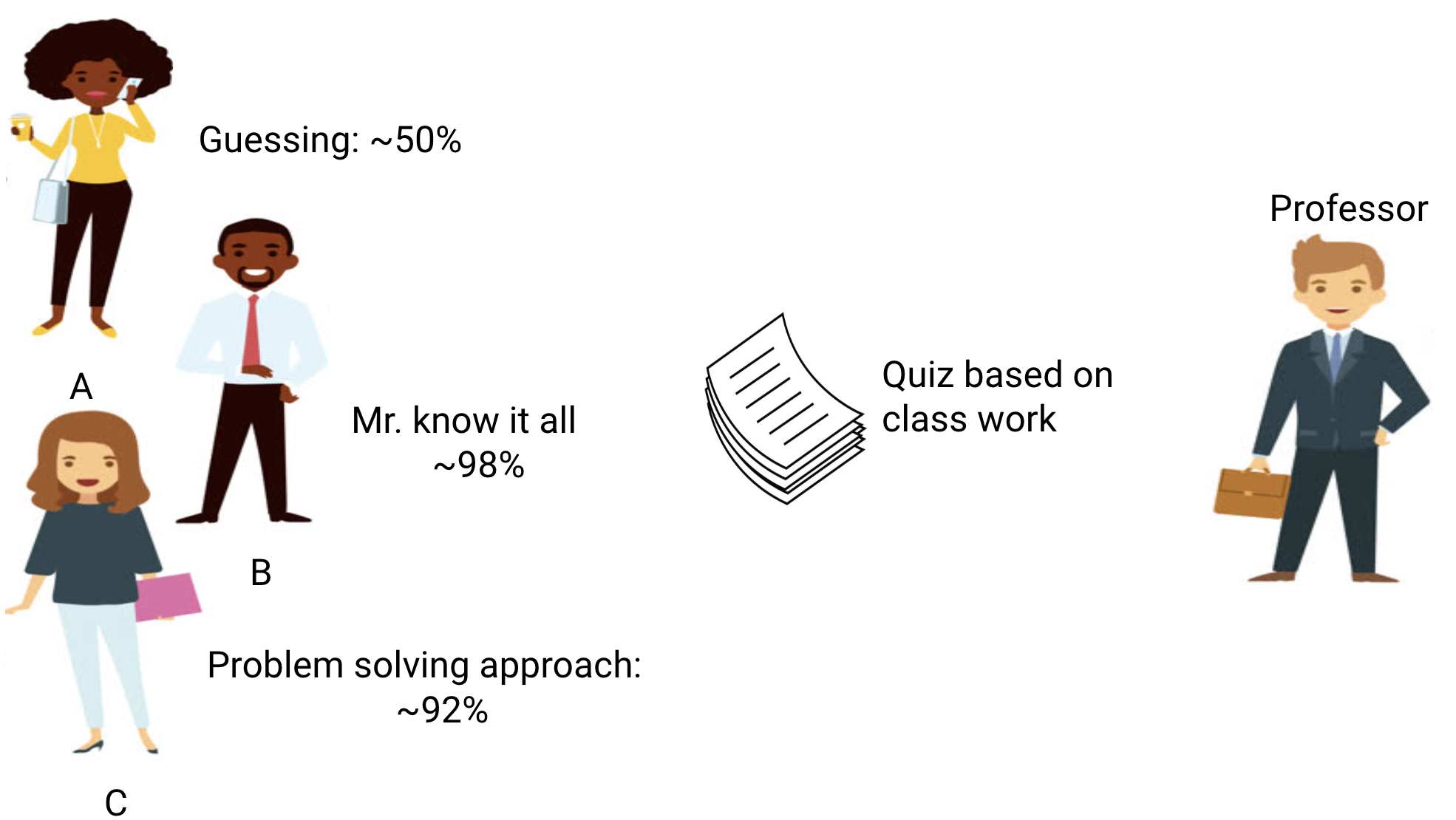

- Student A, that he was distracted in his own world, you just guessed the answers and got about a 50% grades in the test.

- Besides, the student who memorized each and every question taught in the classroom was able to answer almost all the questions by heart and, therefore, got a 98% grades on the class test.

- For student C, actually solved all the questions using the problem solving approach you learned in the classroom and got a score of 92%.

We can clearly infer that the student who simply memorizes everything is obtaining better results without much difficulty..

Now here's the twist. Let's also see what happens during the monthly test, when students have to face new unknown questions that the teacher does not teach in class.

- In the case of student A, things didn't change much and he still answers questions correctly at random ~ 50% weather.

- In the case of Student B, your score dropped significantly. Can you guess why? This is because he always memorized the problems that he was taught in class, but this monthly quiz contained questions I'd never seen before. Therefore, their performance dropped significantly.

- In the case of Student C, the score stayed about the same. This is because he focused on learning the problem-solving approach and, therefore, was able to apply the concepts you learned to solve the unfamiliar questions.

How is this related to mismatch and overfit in machine learning??

You may be wondering how this example relates to the problem we encountered throughout training and the decision tree classifier test scores.. Good question!

Then, Let's work on connecting this example with the results of the decision tree classifier that I showed you previously..

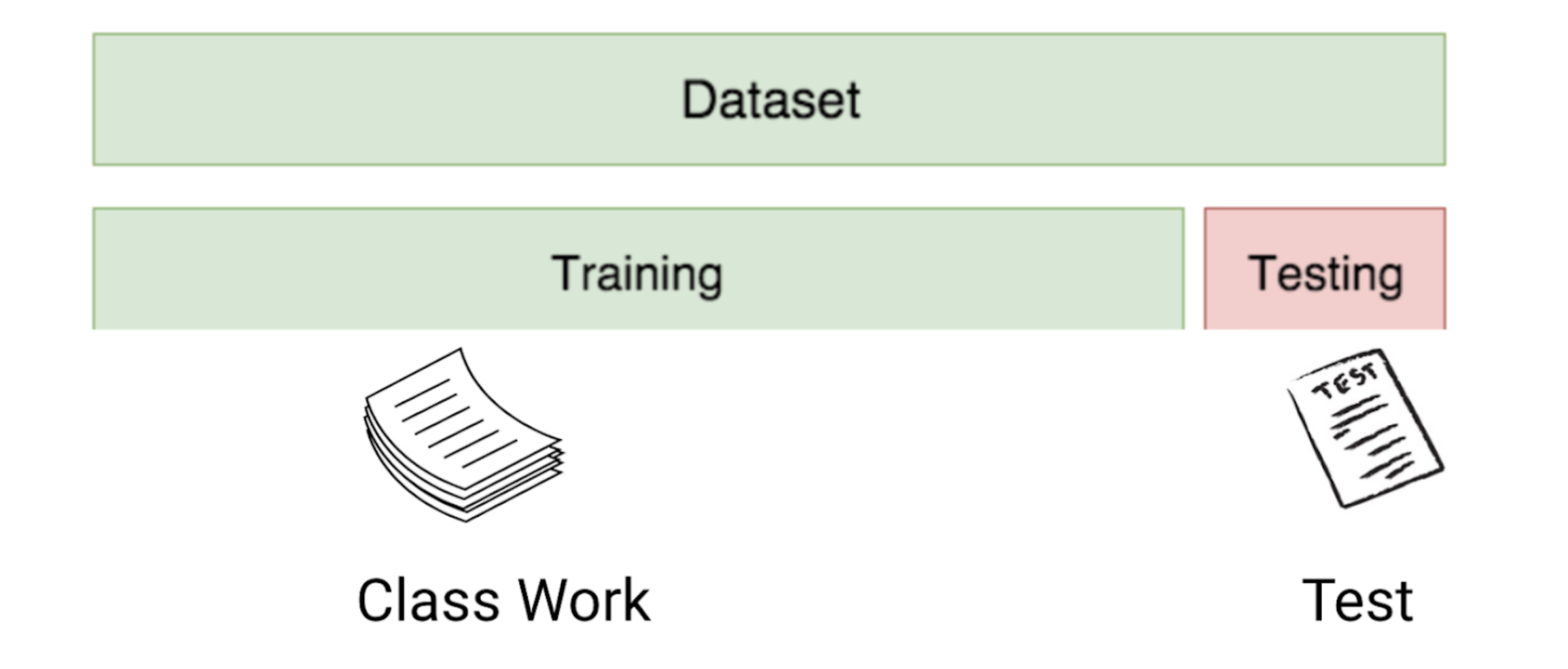

First, class work and class test resembles training data and prognosis on training data itself, respectively. Besides, the semi-annual test represents the set of tests of our data that we put aside before training our model (or data not seen in a real world machine learning project).

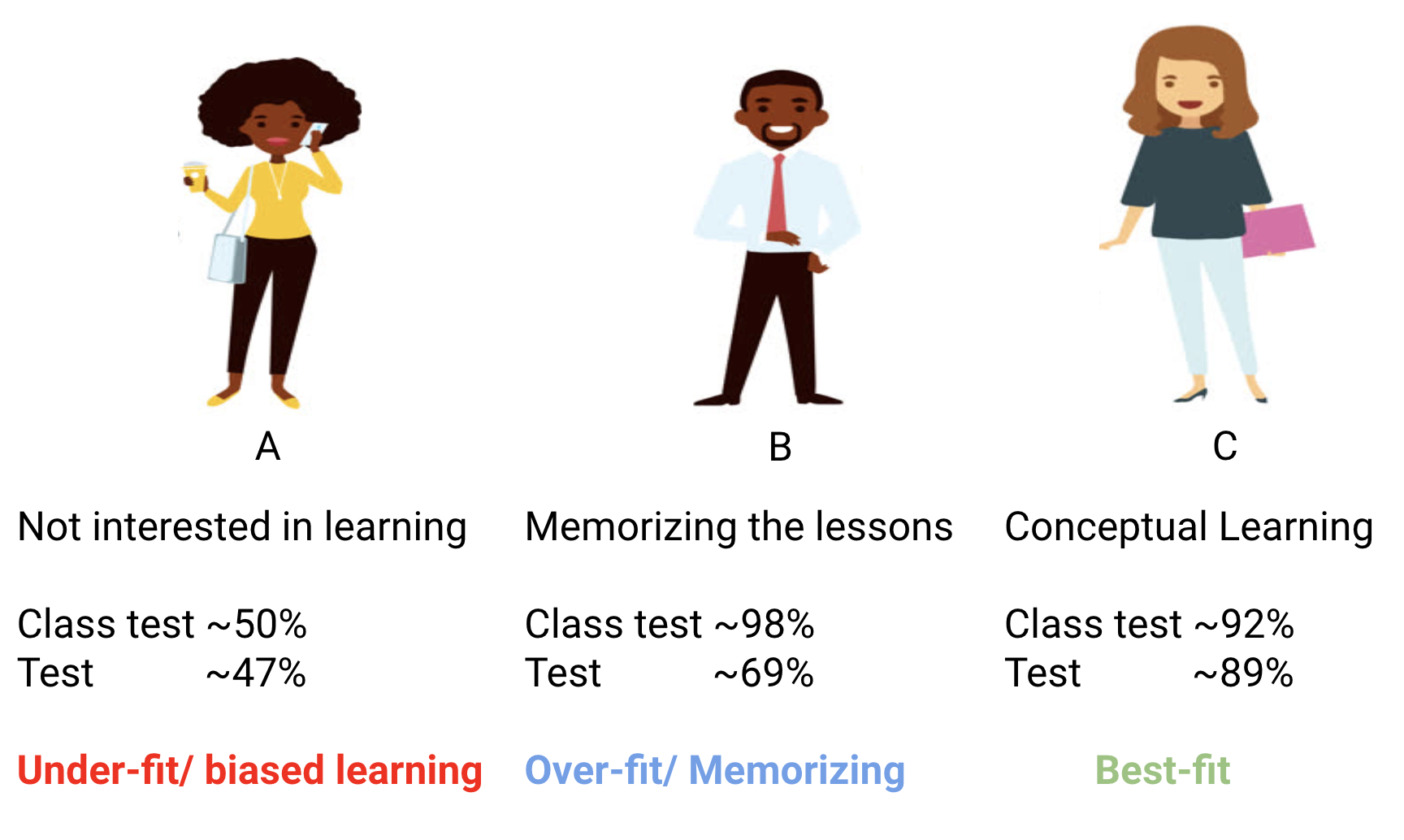

Now, remember our decision tree classifier I mentioned previously. Gave a perfect score on the training set, but had problems with the test set. Comparing that to the student examples we just discussed, the classifier makes an analogy with student B who tried to memorize each and every question in the training set.

Similarly, our decision tree classifier attempts to learn each and every point in the training data, but it suffers radically when it finds a new data point in the test set. Not able to generalize well.

This situation where a given model performs too well on the data of trainingTraining is a systematic process designed to improve skills, physical knowledge or abilities. It is applied in various areas, like sport, Education and professional development. An effective training program includes goal planning, regular practice and evaluation of progress. Adaptation to individual needs and motivation are key factors in achieving successful and sustainable results in any discipline...., but the performance drops significantly over the test set is called the overfitting model.

As an example, non-parametric models like decision trees, KNN and other tree-based algorithms are very prone to overfitting. These models can learn very complex relationships that can result in overfitting. The following graphic summarizes this concept:

Besides, if the model is performing poorly during the test and train, so we call it a poorly fitting model. An example of this situation would be the construction of a linear regression model on non-linear data.

Final notes

I hope this brief insight has cleared up any doubts you may have had about non-fitting models, they overfit and better fit and how they work or behave under the hood.

Feel free to send me any questions or comments below.