Introduction

A common challenge that I ran into while learning natural language processing (PNL) – Can we build models for languages other than English? The answer has been no for quite some time. Each language has its own grammatical patterns and linguistic nuances. And there just aren't many datasets available in other languages.

That's where Stanford's latest NLP library comes in.: StanfordNLP.

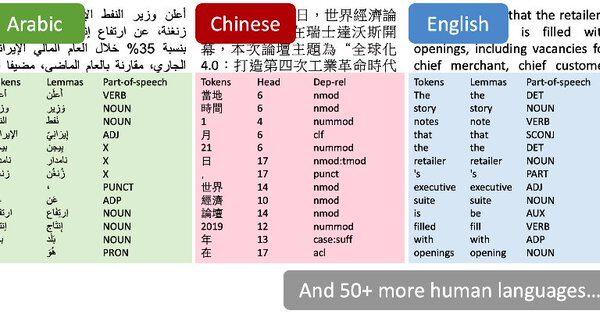

I could barely contain my excitement when I read the news last week. The authors stated that StanfordNLP could support more than 53 human languages. Yes, I had to double check that number.

I decided to check it out myself. There is still no official tutorial for the library, so I had a chance to experiment and play with it. And I found out that it opens up a world of infinite possibilities. StanfordNLP contains pre-trained models for rare Asian languages like Hindi, Chinese and Japanese in their original scripts.

The ability to work with multiple languages is a wonder that all NLP enthusiasts crave. In this article, we will analyze what is StanfordNLP, Why is it so important, and then we will activate Python to see it live in action. We will also take a Hindi case study to show how StanfordNLP works, You won't want to miss that!

Table of Contents

- What is StanfordNLP and why should I use it?

- StanfordNLP Configuration in Python

- Using StanfordNLP to perform basic NLP tasks

- StanfordNLP implementation in Hindi

- Using the CoreNLP API for text analysis

What is StanfordNLP and why should I use it?

Here is the description of StanfordNLP by the authors themselves:

StanfordNLP is the combination of the software package used by the Stanford team in the shared task CoNLL 2018 about universal dependency analysis and the group's official Python interface for the Software Stanford CoreNLP.

It's too much information at one time!! Let's break it down:

- CoNLL is an annual conference on natural language learning. Teams representing research institutes around the world are trying to solve a PNL task based

- One of last year's tasks was “Multilingual analysis of plain text to universal dependencies”. In simple terms, means parsing unstructured text data from multiple languages into helpful annotations of universal dependencies.

- Universal Dependencies is a framework that maintains consistency in annotations. These annotations are generated for the text regardless of the language being analyzed.

- Stanford's presentation ranked first in 2017. They lost the first position in 2018 due to a software bug (finished in fourth place)

StanfordNLP is a collection of pre-trained state-of-the-art models. These models were used by the researchers in the CoNLL competitions. 2017 Y 2018. All models are based on PyTorch and can be trained and evaluated with your own annotated data. Impressive!

Here are a few more reasons why you should check out this library:

- Native Python implementation that requires minimal effort to configure

- Complete neural network pipeline for robust text analysis, what includes:

- Tokenización

- Multi-word token expansion (MWT)

- Lematización

- Labeling parts of speech (POS) and morphological characteristics

- Dependency analysis

- Pre-trained neural models that support 53 idioms (humans) presented in 73 tree benches

- A stable and officially maintained Python interface for CoreNLP

What more could a NLP enthusiast ask for?? Now that we have an idea of what this library does, Let's take a spin in python!

StanfordNLP Configuration in Python

There are some quirky things about the library that puzzled me at first. For instance, you need Python 3.6.8 / 3.7.2 or later to use StanfordNLP. To be sure, I set up a separate environment in Anaconda to Python 3.7.1. This is how you can do it:

1. Open conda prompt and type this:

conda create -n stanfordnlp python=3.7.1

2. Now activate the environment:

source activate stanfordnlp

3. Install the StanfordNLP Library:

pip install stanfordnlp

4. We need to download the specific model of a language to work with it. Start a Python shell and import StanfordNLP:

import stanfordnlp

then download the language model for english (“in”):

stanfordnlp.download('on')

This may take a while depending on your internet connection.. These language models are quite large (English is from 1,96 GB).

A couple of important notes

- StanfordNLP is based on PyTorch 1.0.0. Could crash if you have an older version. Then, We show you how you can check the version installed on your machine:

pip freeze | grep torch

which should give an output like torch==1.0.0

- Tried using the library without GPU on my Lenovo Thinkpad E470 (8GB RAM, Intel Graphics). I got a memory error in Python pretty fast. Therefore, i switched to a GPU enabled machine and would advise you to do the same as well. You can try Google Colab which comes with free GPU support

That is all! Let's dive into some basic NLP processes right away..

Using StanfordNLP to perform basic NLP tasks

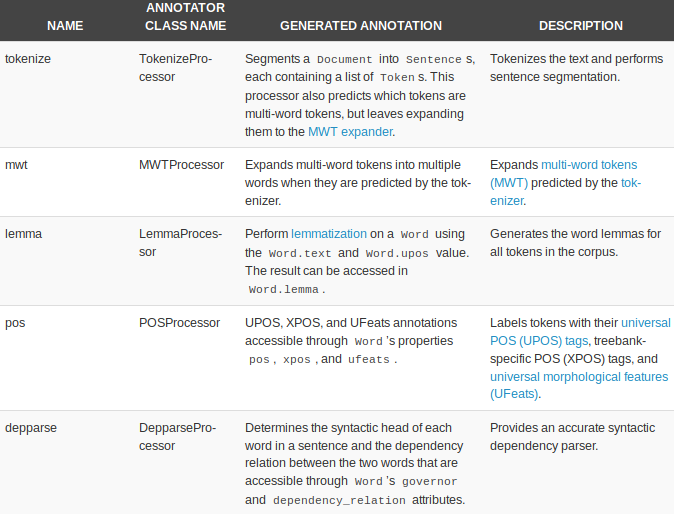

StanfordNLP comes with built-in processors to perform five basic NLP tasks:

- Tokenización

- Multi-word token expansion

- Lematización

- Parts of speech labeling

- Dependency analysis

Let's start by creating a text pipeline:

nlp = stanfordnlp.Pipeline(processors = "tokenize,mwt,lemma,pos")

doc = nlp("""The prospects for Britain’s orderly withdrawal from the European Union on March 29 have receded further, even as MPs rallied to stop a no-deal scenario. An amendment to the draft bill on the termination of London’s membership of the bloc obliges Prime Minister Theresa May to renegotiate her withdrawal agreement with Brussels. A Tory backbencher’s proposal calls on the government to come up with alternatives to the Irish backstop, a central tenet of the deal Britain agreed with the rest of the EU.""")

the processors = “” The argument is used to specify the task. All five processors are taken by default if no arguments are passed. Here's a quick overview of the processors and what they can do:

Let's see each of them in action.

Tokenización

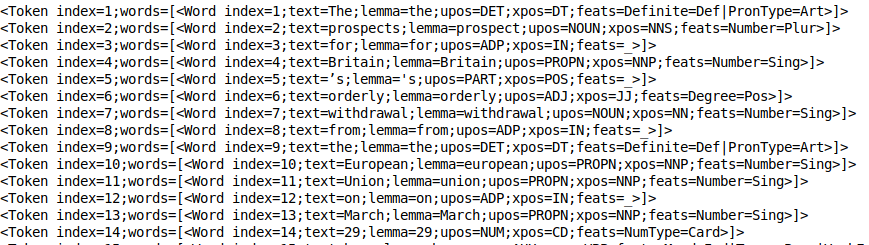

This process occurs implicitly once the Token processor is running. In fact, it's pretty fast. You can take a look at the tokens using print_tokens ():

doc.sentences[0].print_tokens()

The token object contains the index of the token in the sentence and a list of word objects (in the case of a multi-word token). Each word object contains useful information, as the index of the word, the motto of the text, pos tag (parts of the speech) and the feat tag (morphological characteristics).

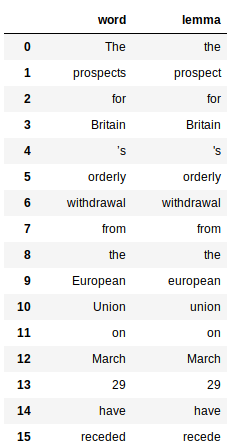

Lematización

This involves the use of the property “motto” of the words generated by the slogan processor. Here is the code to get the motto of all the words:

This returns a pandas data frame for each word and its respective motto:

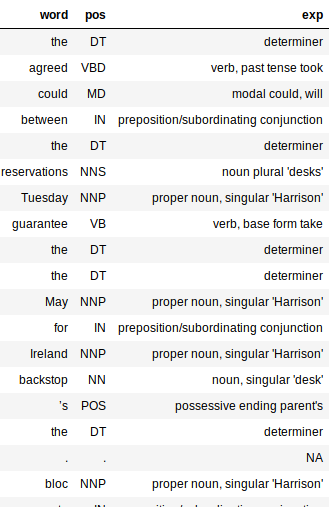

Labeling parts of speech (PoS)

The PoS tagger is quite fast and works great in all languages. Like the slogans, PoS tags are also easy to remove:

Notice the big dictionary in the code above? It's just a mapping between PoS tags and their meaning. This helps to better understand the syntactic structure of our document.

The output would be a data frame with three columns: word, pos and exp (Explanation). The explanation column gives us the most information about the text (Y, Thus, it is quite useful).

Adding the explain column makes it much easier to assess how accurate our processor is. I like the fact that the tagger is accurate for most words. It even captures the time of a word and if it is in basic or plural form.

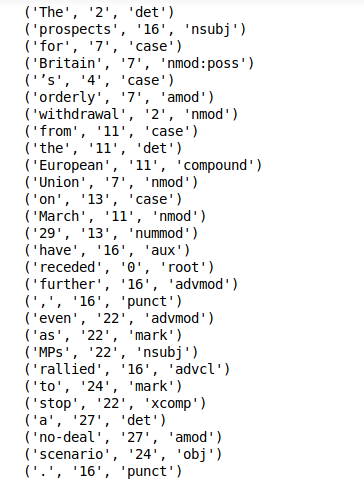

Dependency extraction

Dependency extraction is another out-of-the-box feature of StanfordNLP. You can just call print_dependencies () in a sentence to get the dependency ratios for all your words:

doc.sentences[0].print_dependencies()

The library calculates all of the above during a single pipeline run. This will only take a few minutes on a GPU-enabled machine.

Now we have discovered a way to do basic word processing with StanfordNLP. It's time to take advantage of the fact that we can do the same for others! 51 Languages!

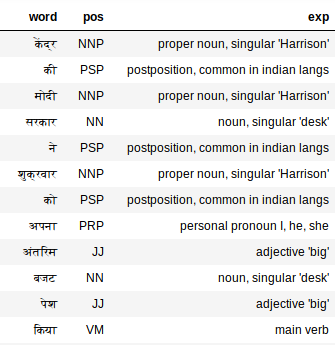

StanfordNLP implementation in Hindi

StanfordNLP really stands out for its multilingual text analysis performance and support. Let's delve into this last aspect.

Hindi word processing (devanagari script)

First, we have to download the hindi language model (Comparatively smaller!):

stanfordnlp.download('hi')

Now, take a snippet of Hindi text as our text document:

hindi_doc = nlp("""The Modi government at the Center presented its interim budget on Friday.. Acting Finance Minister Piyush Goyal in his budget, Labour, taxpayer, Bumpers announced for everyone including women. Although, Even after the budget, there was a lot of confusion about the tax.. What was special about this interim budget of the central government and who got what, understand here in easy language""")

This should be enough to generate all the labels. Let's check the tags for Hindi:

extract_pos(hindi_doc)

The PoS tagger works surprisingly well on Hindi text too. Look “My”, for instance. The PoS tagger labels it as a pronoun, me, he, she, what is exact.

Using the CoreNLP API for text analysis

CoreNLP is a time tested industrial grade NLP toolkit that is known for its performance and accuracy. StanfordNLP has been declared as an official Python interface for CoreNLP. That's a BIG win for this library.

There have been efforts before to create Python wrapper packages for CoreNLP, but nothing beats an official implementation of the authors themselves. This means that the library will see regular updates and improvements..

StanfordNLP requires three lines of code to start using the sophisticated CoreNLP API. Literally, Just three lines of code to set it up!!

1. Download the CoreNLP package. Open your Linux terminal and type the following command:

wget http://nlp.stanford.edu/software/stanford-corenlp-full-2018-10-05.zip

2. Unzip the downloaded package:

unzip stanford-corenlp-full-2018-10-05.zip

3. Start the CoreNLP server:

java -mx4g -cp "*" edu.stanford.nlp.pipeline.StanfordCoreNLPServer -port 9000 -timeout 15000

Note: CoreNLP requires Java8 to run. Make sure you have JDK and JRE 1.8.x installed.

Now, make sure StanfordNLP knows where CoreNLP is present. For that, must export $ CORENLP_HOME as your folder location. In my case, this folder was in home in itself so that my path is like

export CORENLP_HOME=stanford-corenlp-full-2018-10-05/

Once the above steps have been performed, you can start the server and make requests in python code. Below is a complete example of how to start a server, make requests and access the data of the returned object.

a. CoreNLPClient Configuration

B. Dependency analysis and POS

C. Recognition of named entities and co-reference strings

The above examples barely scratch the surface of what CoreNLP can do and, but nevertheless, it's very interesting, we were able to accomplish everything from basic NLP tasks like labeling parts of speech to things like recognizing named entities, extracting co-reference strings and finding who wrote what. in a sentence in a few lines of Python code.

What I like the most here is the ease of use and the increased accessibility this brings when it comes to using CoreNLP in Python.

My thoughts on using StanfordNLP – Pros and cons

Exploring a newly launched library was certainly a challenge. There is hardly any documentation on StanfordNLP! But nevertheless, it was quite an enjoyable learning experience.

Some things that excite me regarding the future of StanfordNLP:

- Your out-of-the-box support for multiple languages

- The fact that it will be an official Python interface for CoreNLP. This means that it will only improve functionality and ease of use in the future..

- It's pretty fast (except the huge memory footprint)

- Simple configuration in Python

But nevertheless, there are some cracks to solve. Below are my thoughts on areas where StanfordNLP could improve:

- The size of the language models is too large (English is from 1,9 GB, Chinese ~ 1,8 GB)

- The library requires a lot of code to produce functions. Compare that to NLTK, where you can quickly write a prototype; this might not be possible for StanfordNLP

- Viewing features are currently missing. It is useful to have it for functions like dependency analysis. StanfordNLP falls short here compared to libraries like SpaCy

Be sure to check Official StanfordNLP documentation.

Final notes

There is still a function that I have not tried yet. StanfordNLP allows you to train models on your own annotated data using Word2Vec embeds / FastText. I would like to explore it in the future and see how effective that functionality is.. I'll update the article when the library matures a bit.

Clearly, StanfordNLP is in beta stage. It will only get better from here, so this is a good time to start using it: get an edge over everyone else.

For now, the fact that these amazing toolkits (CoreNLP) are reaching the Python ecosystem and research giants like Stanford are making an effort to open their software open source, I am optimistic about the future.