Overview

- Do you want to get started with natural language processing (NLP)? This is the perfect first step

- Learn how to tokenize, a key aspect of preparing your data to create NLP models

- We present 6 different ways to tokenize text data

Introduction

Are you fascinated by the amount of text data available on the Internet?? Looking for ways to work with this text data but not sure where to start? After all, machines recognize numbers, not the letters of our language. And that can be a tricky landscape to navigate in machine learning..

Then, How can we manipulate and clean this text data to build a model? The answer lies in the wonderful world of Natural language processing (NLP).

Solving an NLP problem is a multi-stage process. We need to clean up the unstructured text data first before we can think about getting to the modeling stage. Data cleansing consists of a few key steps:

- Word tokenization

- Predict parts of speech for each token

- Text stemming

- Identify and remove stop words and much more.

In this article, we will talk about the first step: tokenization. First we will see what tokenization is and why it is necessary in NLP. Later, We will look at six unique ways to do tokenization in Python.

This article has no prerequisites. Anyone interested in NLP or data science will be able to follow it. If you are looking for a comprehensive resource for learning NLP, you should consult our comprehensive course:

Table of Contents

- What is tokenization in NLP?

- Why is tokenization required?

- Different methods to perform tokenization in Python

- Tokenization using the split function () of Python

- Tokenization using regular expressions

- Tokenization using NLTK

- Tokenization using Spacy

- Tokenization using Keras

- Tokenization using Gensim

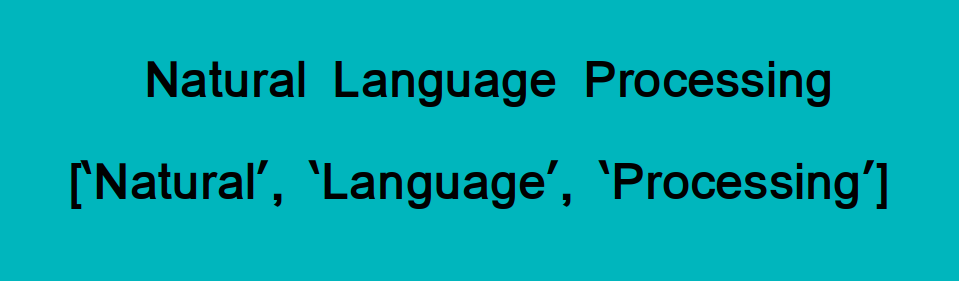

What is tokenization in NLP?

Tokenization is one of the most common tasks when it comes to working with text data. But, What does the term 'tokenization' really mean?

Tokenization essentially consists of dividing a phrase, prayer, paragraph or a full text document in smaller units, as individual words or terms. Each of these smaller units is called tokens.

Look at the image below to visualize this definition:

Tokens can be words, numbers or punctuation marks. In tokenization, smaller units are created by locating word boundaries. Waiting, What are the limits of words?

These are the end point of one word and the beginning of the next. These tokens are considered a first step for derivation and stemming (the next stage in text preprocessing that we'll cover in the next article).

Hard? Do not worry! The 21st century has facilitated accessibility to learning and knowledge. Any natural language processing course can be used to learn them easily.

Why is tokenization required in NLP?

I want you to think in the English language here. Pick any sentence that comes to mind and keep it in mind as you read this section. This will help you understand the importance of tokenization in a much easier way.

Before processing a natural language, we need to identify the words that constitute a string of characters. That is why youOkenization is the most basic step to proceed with NLP (text data). This is important because the meaning of the text could easily be interpreted by analyzing the words present in the text..

Let's take an example. Consider the following string:

“This is a cat.”

What do you think will happen after we tokenize on this chain? We obtain [‘This’, ‘is’, ‘a’, cat’].

There are numerous uses for doing this. We can use this tokenized form to:

- Count the number of words in the text.

- Count the frequency of the word, namely, the number of times a particular word is present.

And so on. We can extract much more information that we will discuss in detail in future articles.. For now, it's time to delve into the nitty-gritty of this article: the different methods to perform tokenization in NLP.

Methods to perform tokenization in Python

We'll look at six unique ways we can tokenize text data. I have provided the Python code for each method so that you can follow it on your own machine.

1. Tokenization using the split function () of Python

Let's start with the break apart() method since it is the most basic. Returns a list of strings after dividing the given string by the specified separator. By default, split () break a chain in each space. We can change the separator to anything. We'll see.

Word tokenization

Output : ['Founded', 'in', '2002,', 'SpaceX’s', 'mission', 'is', 'to', 'enable', 'humans',

'to', 'become', 'a', 'spacefaring', 'civilization', 'and', 'a', 'multi-planet',

'species', 'by', 'building', 'a', 'self-sustaining', 'city', 'on', 'Mars.', 'In',

'2008,', 'SpaceX’s', 'Falcon', '1', 'became', 'the', 'first', 'privately',

'developed', 'liquid-fuel', 'launch', 'vehicle', 'to', 'orbit', 'the', 'Earth.']

Statement tokenization

This is similar to tokenizing words. Here, we study the structure of sentences in the analysis. A sentence usually ends with a period (.), For what we can use “.” as a separator to break the rope:

Output : ['Founded in 2002, SpaceX’s mission is to enable humans to become a spacefaring

civilization and a multi-planet nspecies by building a self-sustaining city on

Mars',

'In 2008, SpaceX’s Falcon 1 became the first privately developed nliquid-fuel

launch vehicle to orbit the Earth.']

A big drawback of using Python break apart() The method is that we can only use one separator at a time. Another thing to keep in mind: in the tokenization of words, break apart() did not consider punctuation as an independent symbol.

2. Tokenization using regular expressions (RegEx)

First, let's understand what a regular expression is. Basically, is a special character sequence that helps you match or find other strings or sets of strings using that sequence as a pattern.

We can use the re Python library for working with regular expressions. This library comes pre-installed with the Python installation package.

Now, let's do word tokenization and sentence tokenization taking regex into account.

Word tokenization

Output : ['Founded', 'in', '2002', 'SpaceX', 's', 'mission', 'is', 'to', 'enable',

'humans', 'to', 'become', 'a', 'spacefaring', 'civilization', 'and', 'a',

'multi', 'planet', 'species', 'by', 'building', 'a', 'self', 'sustaining',

'city', 'on', 'Mars', 'In', '2008', 'SpaceX', 's', 'Falcon', '1', 'became',

'the', 'first', 'privately', 'developed', 'liquid', 'fuel', 'launch', 'vehicle',

'to', 'orbit', 'the', 'Earth']

the re.findall () The function finds all the words that match the pattern passed to it and stores them in the list.

The “w"Represents" any word character "which normally means alphanumeric (letters, numbers) and underscore (_). ‘+’ means any number of times. Then [w’]+ indicates that the code should find all alphanumeric characters until any other character is found.

Statement tokenization

To perform sentence tokenization, we can use the re.split () function. This will divide the text into sentences by passing it a pattern.

Output : ['Founded in 2002, SpaceX’s mission is to enable humans to become a spacefaring

civilization and a multi-planet nspecies by building a self-sustaining city on

Mars.',

'In 2008, SpaceX’s Falcon 1 became the first privately developed nliquid-fuel

launch vehicle to orbit the Earth.']

Here, we have an advantage over him break apart() method since we can pass multiple separators at the same time. In the above code, we use the re.compile () function in which we pass [.?!]. This means that the sentences will be divided as soon as any of these characters are found.

Are you interested in reading more about the regular expression? The following resources will help you get started with regular expressions in NLP:

3. Tokenization using NLTK

Now, this is a library that you will appreciate the more you work with text data. NLTK, abreviatura de Natural Language ToolKit, is a library written in Python for symbolic and statistical processing of natural language.

You can install NLTK using the following code:

pip install --user -U nltkNLTK contains a module called tokenizar () which is also classified into two subcategories:

- Word tokenization: We use the word_tokenize method () to divide a sentence into tokens or words

- Tokenization of the sentence: We use the sent_tokenize method () to divide a document or paragraph into sentences

Let's see both one by one.

Word tokenization

Output: ['Founded', 'in', '2002', ',', 'SpaceX', '’', 's', 'mission', 'is', 'to', 'enable',

'humans', 'to', 'become', 'a', 'spacefaring', 'civilization', 'and', 'a',

'multi-planet', 'species', 'by', 'building', 'a', 'self-sustaining', 'city', 'on',

'Mars', '.', 'In', '2008', ',', 'SpaceX', '’', 's', 'Falcon', '1', 'became',

'the', 'first', 'privately', 'developed', 'liquid-fuel', 'launch', 'vehicle',

'to', 'orbit', 'the', 'Earth', '.']

Statement tokenization

Output: ['Founded in 2002, SpaceX’s mission is to enable humans to become a spacefaring

civilization and a multi-planet nspecies by building a self-sustaining city on

Mars.',

'In 2008, SpaceX’s Falcon 1 became the first privately developed nliquid-fuel

launch vehicle to orbit the Earth.']

4. Tokenization using the spaCy library

I love the SpaCy library. I can't remember the last time I didn't use it when I was working on an NLP project. It's that useful.

spaCy is a open source library for advanced Natural language processing (PNL). Supports more than 49 languages and provides state-of-the-art calculation speed.

To install Spacy on Linux:

pip install -U spacy python -m spacy download en

To install it on other operating systems, go to this link.

Then, Let's see how we can use the genius of spaCy to do tokenization. we will use spacy.lang.en that supports english language.

Word tokenization

Output : ['Founded', 'in', '2002', ',', 'SpaceX', '’s', 'mission', 'is', 'to', 'enable',

'humans', 'to', 'become', 'a', 'spacefaring', 'civilization', 'and', 'a',

'multi', '-', 'planet', 'n', 'species', 'by', 'building', 'a', 'self', '-',

'sustaining', 'city', 'on', 'Mars', '.', 'In', '2008', ',', 'SpaceX', '’s',

'Falcon', '1', 'became', 'the', 'first', 'privately', 'developed', 'n',

'liquid', '-', 'fuel', 'launch', 'vehicle', 'to', 'orbit', 'the', 'Earth', '.']

Statement tokenization

Output : ['Founded in 2002, SpaceX’s mission is to enable humans to become a spacefaring

civilization and a multi-planet nspecies by building a self-sustaining city on

Mars.',

'In 2008, SpaceX’s Falcon 1 became the first privately developed nliquid-fuel

launch vehicle to orbit the Earth.']

spaCy is quite fast compared to other libraries while doing NLP tasks (Yes, even NLTK). I encourage you to listen to the following DataHack Radio podcast to learn the story behind how spaCy was created and where you can use it.:

And here is a detailed tutorial to get started with spaCy:

5. Tokenization using Keras

Hard! One of the frames of deep learningDeep learning, A subdiscipline of artificial intelligence, relies on artificial neural networks to analyze and process large volumes of data. This technique allows machines to learn patterns and perform complex tasks, such as speech recognition and computer vision. Its ability to continuously improve as more data is provided to it makes it a key tool in various industries, from health... most popular in the industry right now. It is an open source neural network library for Python. Keras is very easy to use and can also be run on top of TensorFlow.

In the context of NLP, we can use Keras to clean up the unstructured text data that we normally collect.

You can install Keras on your machine using just one line of code:

pip install Hard

Let's get to work. To perform word tokenization using Keras, we use the text_to_word_sequence method of keras.preprocessing.text class.

Let's see Keras in action.

Word tokenization

Output : ['founded', 'in', '2002', 'spacex’s', 'mission', 'is', 'to', 'enable', 'humans',

'to', 'become', 'a', 'spacefaring', 'civilization', 'and', 'a', 'multi',

'planet', 'species', 'by', 'building', 'a', 'self', 'sustaining', 'city', 'on',

'mars', 'in', '2008', 'spacex’s', 'falcon', '1', 'became', 'the', 'first',

'privately', 'developed', 'liquid', 'fuel', 'launch', 'vehicle', 'to', 'orbit',

'the', 'earth']

Keras reduces the box of all alphabets before tokenizing them. That saves us quite a bit of time as you can imagine!!

6. Tokenization using Gensim

The final tokenization method that we will cover here is the use of the Gensim library. It is an open source library for unsupervised topic modeling and natural language processing. and is designed to automatically extract semantic themes from a given document.

This is how you can install Gensim:

pip install gensim

We can use the gensim.utils class to import the tokenizar method to perform word tokenization.

Word tokenization

Outpur : ['Founded', 'in', 'SpaceX', 's', 'mission', 'is', 'to', 'enable', 'humans', 'to',

'become', 'a', 'spacefaring', 'civilization', 'and', 'a', 'multi', 'planet',

'species', 'by', 'building', 'a', 'self', 'sustaining', 'city', 'on', 'Mars',

'In', 'SpaceX', 's', 'Falcon', 'became', 'the', 'first', 'privately',

'developed', 'liquid', 'fuel', 'launch', 'vehicle', 'to', 'orbit', 'the',

'Earth']

Statement tokenization

To perform sentence tokenization, we use the split_sentences method of gensim.summerization.texttcleaner class:

Output : ['Founded in 2002, SpaceX’s mission is to enable humans to become a spacefaring

civilization and a multi-planet ',

'species by building a self-sustaining city on Mars.',

'In 2008, SpaceX’s Falcon 1 became the first privately developed ',

'liquid-fuel launch vehicle to orbit the Earth.']

You may have noticed that Gensim is quite strict about punctuation. Divides each time a score is found. In the division of sentences too, Gensim tokenized the text by finding ” n” while other libraries ignored it.

Final notes

Tokenization is a critical step in the overall pipeline of NLP. We can't just jump to the build part of the model without first cleaning up the text.

In this article, we saw six different methods of tokenization (word and sentence) of a given text. There are also other ways, but they're good enough to get you started.

I'll cover other text cleaning steps like removing stopwords, tagging part of speech and recognizing named entities in my future posts. Until then, keep learning!