Foto of Daddy Mohlala on Unsplash

Data is water, purifying them to make them edible is a function of the data analyst: Kashish Rastogi

Table of Contents:

- Problem Statement

- Description of data

- Text cleaning with NLP

- Find if the text has: with space

- Text cleanup with preprocessor library

- Sentiment analysis of the data

- Data visualization

I am taking the twitter data that is available here on the DataPeaker platform.

Libraries import

import pandas as pd import re import plotly.express as px import nltk import spacy

I am loading a small spacious model. There is 3 model sizes you can download spacy (little, medium and large) according to your requirements.

nlp = spacy.load('en_core_web_sm')

The data looks like this

df = pd.read_csv(r'location of file') from DF['id'] df.head(5)

Problem Statement

The key point is to find the sentiment of the text data. Text provided is from clients of various tech firms that make phones, laptops, gadgets, etc. The task is to identify if the tweets have a negative sentiment, positive or neutral towards the company.

Description of data

Label: The label column has 2 unique values 0 Y 1.

Pío: The tweet columns have the text provided by the customers

Data manipulation

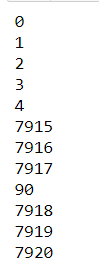

Find the shape of the data

There is 2 columns and 7920 rows.

df.shape

Tweets classification

fig = px.pie(df, names=df.label, hole=0.7, title="Tweets Classification",

height=250, color_discrete_sequence=px.colors.qualitative.T10)

fig.update_layout(margin=dict(t=100, b=40, l=60, r=40),

plot_bgcolor="#2d3035", paper_bgcolor="#2d3035",

title_font=dict(size=25, color="#a5a7ab", family="Side, sans-serif"),

font=dict(color="#8a8d93"),

)

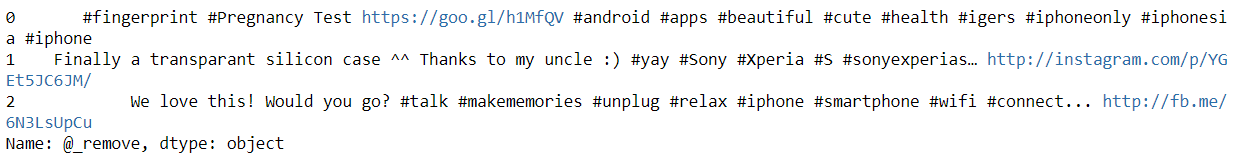

Take a moment and look at the data. Do you see?

Image source: https://unsplash.com/photos/LDcC7aCWVlo

- There is no markup to analyze, is plain text (¡yaa!).

- There are many things to filter like:

-

- Twitter identifier is masked (@Username), what is of no use to us.

- Labels

- Links (edit)

- Special characters

- We see that the text has numerical value in them.

- There are many typos and contractions in the text.

- There are many company names (Sony, Apple).

- The text is not in lowercase

Let's clean up the text

Find if the text has:

- Twitter usernames

- Labels

- Numerical values

- Links (edit)

Deleting if the text has:

- Twitter username because you will not provide any additional information at this time, since for security reasons, username was changed to fictitious names

- Hashtag words will not provide any useful meaning to the text in sentiment analysis.

- The URL link will not add information to the text either.

- Removing

- Scoring for clean text

- Words shorter than 3 are safe to remove from the text because the words will be like (soy, soy, it is) that have no specific meaning or function in the text

- Stopwords are always the best option to remove

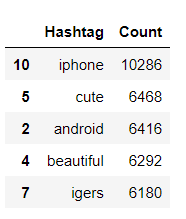

Removing Username of the text

Make a function to remove the username from the text with the simple find all() pandas function. Where are we going to select the words that start with ‘@’.

def remove_pattern(input_txt):

r = re.findall(r"@(w+)", input_txt)

for i in r:

input_txt = re.sub(i, '', input_txt)

return input_txt

df['@_remove'] = np.vectorize(remove_pattern)(df['tweet'])

df['@_remove'][:3]

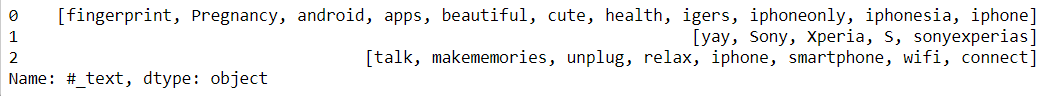

Finding Labels in the text

Making a function to extract hashtags from text with the simple find all() pandas function. Where are we going to select the words that start with ‘#’ and store them in a data frame.

hashtags = []

def hashtag_extract(x):

# Loop over the words in the tweet

for i in x:

ht = re.findall(r"#(w+)", i)

hashtags.append(ht)

return hashtags

Passing function and extracting hashtags now we can visualize how many hashtags there are in positive and negative tweets

# extracting hashtags from neg/pos tweets dff_0 = hashtag_extract(df['tweet'][df['label'] == 0]) dff_1 = hashtag_extract(df['tweet'][df['label'] == 1]) dff_all = hashtag_extract(df['tweet'][df['label']]) # unnesting list dff_0 = sum(dff_0,[]) dff_1 = sum(dff_1,[]) dff_all = sum(dff_all,[])

Counting the frequent hashtags used when label = 0. FreqDist means that it will tell us how many times that word appeared in the whole document.

data_0 = nltk.FreqDist(dff_0)

data_0 = pd.DataFrame({'Hashtag': list(data_0.keys()),

'Count': list(data_0.values())}).sort_values(by='Count', ascending=False)

data_0[:5]

If you want to know more about Plotly and how to use it, visits is Blog. Each chart is well explained with different parameters that you need to keep in mind when plotting charts.

fig = px.bar(data_0[:30], x='Hashtag', y='Count', height=250,

title="Top 30 hashtags",

color_discrete_sequence=px.colors.qualitative.T10)

fig.update_yaxes(showgrid=False),

fig.update_xaxes(categoryorder ="total descending")

fig.update_traces(hovertemplate=None)

fig.update_layout(margin=dict(t=100, b=0, l=60, r=40),

hovermode ="x unified",

xaxis_tickangle = 300,

xaxis_title=" ", yaxis_title=" ",

plot_bgcolor="#2d3035", paper_bgcolor="#2d3035",

title_font=dict(size=25, color="#a5a7ab", family="Side, sans-serif"),

font=dict(color="#8a8d93")

)

Passing text

sentences = nlp(str(text))

Finding Northnumerical values in the text

spacy provides functions like_num that indicates whether the text has numeric values or not

for token in sentences:

if token.like_num:

text_num = token.text

print(text_num)

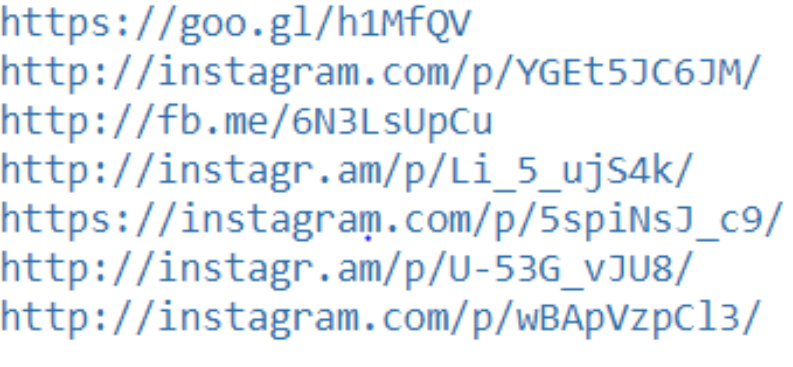

Finding URL link in the text

spacy provides like_url function that indicates whether the text has a url link in them or not

# find links

for token in sentences:

if token.like_url:

text_links = token.text

print(text_links)

There is a library in Python that helps to clean up the text, you can find the documentation. here

Nowadays, this library supports cleaning, tokenization and analytics

- URLs

- Labels

- Mentions

- Reserved words (RT, FAV)

- Emojis

- Emoticons

Importing library

!pip install tweet-preprocessor import preprocessor as p

Call a function to clean up the text

def preprocess_tweet(row):

text = row['tweet']

text = p.clean(text)

return text

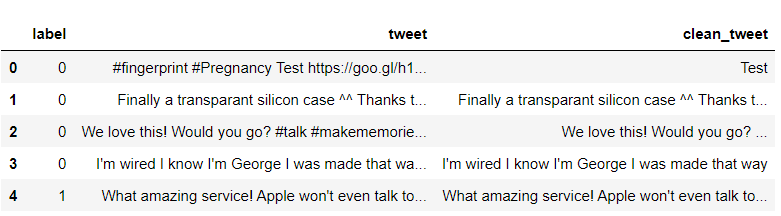

df['clean_tweet'] = df.apply(preprocess_tweet, axis=1) df[:6]

As we see, clean_tweet columns only have text, all usernames are removed, hashtag and url links

Some of the steps for cleaning are still like

- downloading all the text

- Removing scores

- Remove numbers

Code:

def preprocessing_text(text):

# Make lowercase

text = text.str.lower()

# Remove punctuation

text = text.str.replace('[^ ws]', '', regex=True)

# Remove digits

text = text.str.replace('[d]+', '', regex=True)

return text

pd.set_option('max_colwidth', 500)

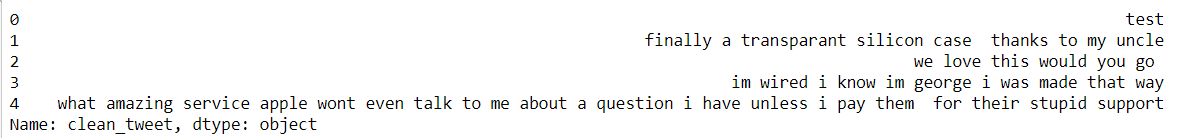

df['clean_tweet'] = preprocessing_text(df['clean_tweet'])

df['clean_tweet'][:5]

We have our text clean, let's remove the empty words.

What are empty words? Do you need to remove the stopwords?

Stopwords are the most common words in any natural language. Empty words are like me, soy, your, when, etc., do not add any additional information to the text.

No need to remove stopwords every time it depends on the case study, here we are finding the feeling of the text, so we don't need to stop the words.

from nltk.corpus import stopwords

# Remove stop words

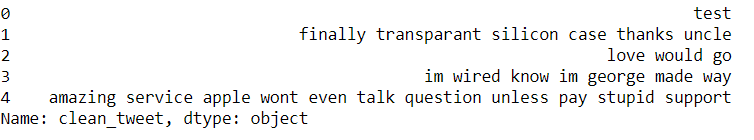

stop = stopwords.words('english')

df['clean_tweet'] = df['clean_tweet'].apply(lambda x: ' '.join([word for word in x.split() if word not in (stop)]))

df['clean_tweet'][:5]

After implementing all the steps we got our clean text. Now, What to do with the text?

- Can we find out what words are used frequently?

- What words are used most negatively / positively in the text?

Tokenize words and calculate the frequency and word count and store them in a data frame.

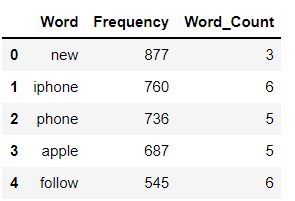

A frequency distribution records the number of times each word has occurred. For instance, a new word has been used frequently in full data followed by other words iPhone, telephone, etc.

a = df['clean_tweet'].str.cat(sep=' ')

words = nltk.tokenize.word_tokenize(a)

word_dist = nltk.FreqDist(words)

dff = pd.DataFrame(word_dist.most_common(),

columns=['Word', 'Frequency'])

dff['Word_Count'] = dff.Word.apply(len)

dff[:5]

fig = px.histogram(dff[:20], x='Word', y='Frequency', height=300,

title="Most common 20 words in tweets", color_discrete_sequence=px.colors.qualitative.T10)

fig.update_yaxes(showgrid=False),

fig.update_xaxes(categoryorder ="total descending")

fig.update_traces(hovertemplate=None)

fig.update_layout(margin=dict(t=100, b=0, l=70, r=40),

hovermode ="x unified",

xaxis_tickangle = 360,

xaxis_title=" ", yaxis_title=" ",

plot_bgcolor="#2d3035", paper_bgcolor="#2d3035",

title_font=dict(size=25, color="#a5a7ab", family="Side, sans-serif"),

font=dict(color="#8a8d93"),

)

fig = px.bar(dff.tail(10), x='Word', y='Frequency', height=300,

title="Least common 10 words in tweets", color_discrete_sequence=px.colors.qualitative.T10)

fig.update_yaxes(showgrid=False),

fig.update_xaxes(categoryorder ="total descending")

fig.update_traces(hovertemplate=None)

fig.update_layout(margin=dict(t=100, b=0, l=70, r=40),

hovermode ="x unified",

xaxis_title=" ", yaxis_title=" ",

plot_bgcolor="#2d3035", paper_bgcolor="#2d3035",

title_font=dict(size=25, color="#a5a7ab", family="Side, sans-serif"),

font=dict(color="#8a8d93"),

)

fig = px.bar(a, height=300, title="Frequency of words in tweets",

color_discrete_sequence=px.colors.qualitative.T10)

fig.update_yaxes(showgrid=False),

fig.update_xaxes(categoryorder ="total descending")

fig.update_traces(hovertemplate=None)

fig.update_layout(margin=dict(t=100, b=0, l=70, r=40), showlegend=False,

hovermode ="x unified",

xaxis_tickangle = 360,

xaxis_title=" ", yaxis_title=" ",

plot_bgcolor="#2d3035", paper_bgcolor="#2d3035",

title_font=dict(size=25, color="#a5a7ab", family="Side, sans-serif"),

font=dict(color="#8a8d93"),

)

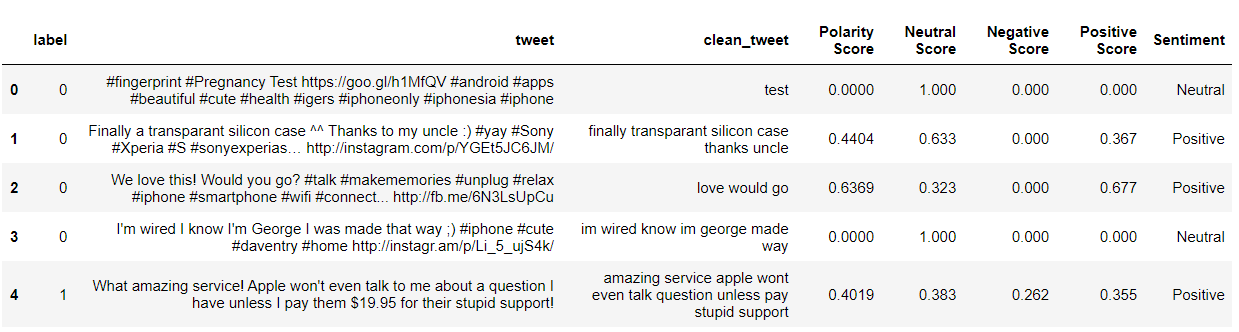

Sentiment analysis

from nltk.sentiment.vader import SentimentIntensityAnalyzer from nltk.sentiment.util import * #Sentiment Analysis SIA = SentimentIntensityAnalyzer() df["clean_tweet"]= df["clean_tweet"].astype(str) # Applying Model, Variable Creation df['Polarity Score']=df["clean_tweet"].apply(lambda x:SIA.polarity_scores(x)['compound']) df['Neutral Score']=df["clean_tweet"].apply(lambda x:SIA.polarity_scores(x)['New']) df['Negative Score']=df["clean_tweet"].apply(lambda x:SIA.polarity_scores(x)['neg']) df['Positive Score']=df["clean_tweet"].apply(lambda x:SIA.polarity_scores(x)['pos']) # Converting 0 to 1 Decimal Score to a Categorical Variable df['Sentiment']='' df.loc[df['Polarity Score']>0,'Sentiment']='Positive' df.loc[df['Polarity Score']==0,'Sentiment']='Neutral' df.loc[df['Polarity Score']<0,'Sentiment']='Negative' df[:5]

Classification of tweets based on sentiment

fig_pie = px.pie(df, names="Sentiment", title="Tweets Classifictaion", height=250,

hole=0.7, color_discrete_sequence=px.colors.qualitative.T10)

fig_pie.update_traces(textfont=dict(color="#fff"))

fig_pie.update_layout(margin=dict(t=80, b=30, l=70, r=40),

plot_bgcolor="#2d3035", paper_bgcolor="#2d3035",

title_font=dict(size=25, color="#a5a7ab", family="Side, sans-serif"),

font=dict(color="#8a8d93"),

legend=dict(orientation="h", yanchor="bottom", y = 1, xanchor ="right", x=0.8)

)

Conclution:

We saw how to clean text data when we have a Twitter username, hashtag, URL links, digits and did sentiment analysis on text data.

We saw how to find if the text has URL links or digits in them with the help of spacy.

About the Author:

Can you connect me

The media shown in this article is not the property of DataPeaker and is used at the author's discretion.