This article was published as part of the Data Science Blogathon

Introduction

In natural language processing, feature extraction is one of the trivial steps to take to better understand the context of what we are dealing with. After cleaning and normalizing the initial text, we must transform it into its characteristics to use it in modeling. We use some particular method to assign weights to particular words within our document before modeling them. We opted for the numerical representation of individual words, since it is easy for the computer to process numbers; in such cases, we opted for word embeddings.

Source: https://www.analyticsvidhya.com/blog/2020/06/nlp-project-information-extraction/

In this article, We will discuss the various methods of word embedding and feature extraction that are practiced in natural language processing..

Feature extraction:

Bag of words:

In this method, we take each document as a collection or bag that contains all the words. The idea is to analyze the documents. The document here refers to a unit. In case we want to find all the negative tweets during the pandemic, every tweet here is a document. To obtain the bag of words we always carry out all those previous steps such as cleaning, derivation, lematización, etc ... Then we generate a set of all the words that are available before sending it to model.

“Entrance is the best part of football” -> {'entry', 'better', 'part', 'soccer'}

We can get repeated words within our document. A better representation is a vector shape, that can tell us how many times each word can appear in a document. The following is called the document term matrix and is shown below:

Source: https://qphs.fs.quoracdn.net/main-qimg-27639a9e2f88baab88a2c575a1de2005

It informs us about the relationship between a document and the terms. Each of the values in the table refers to the term frequency. To find the similarity, we choose the similarity measure of the cosine.

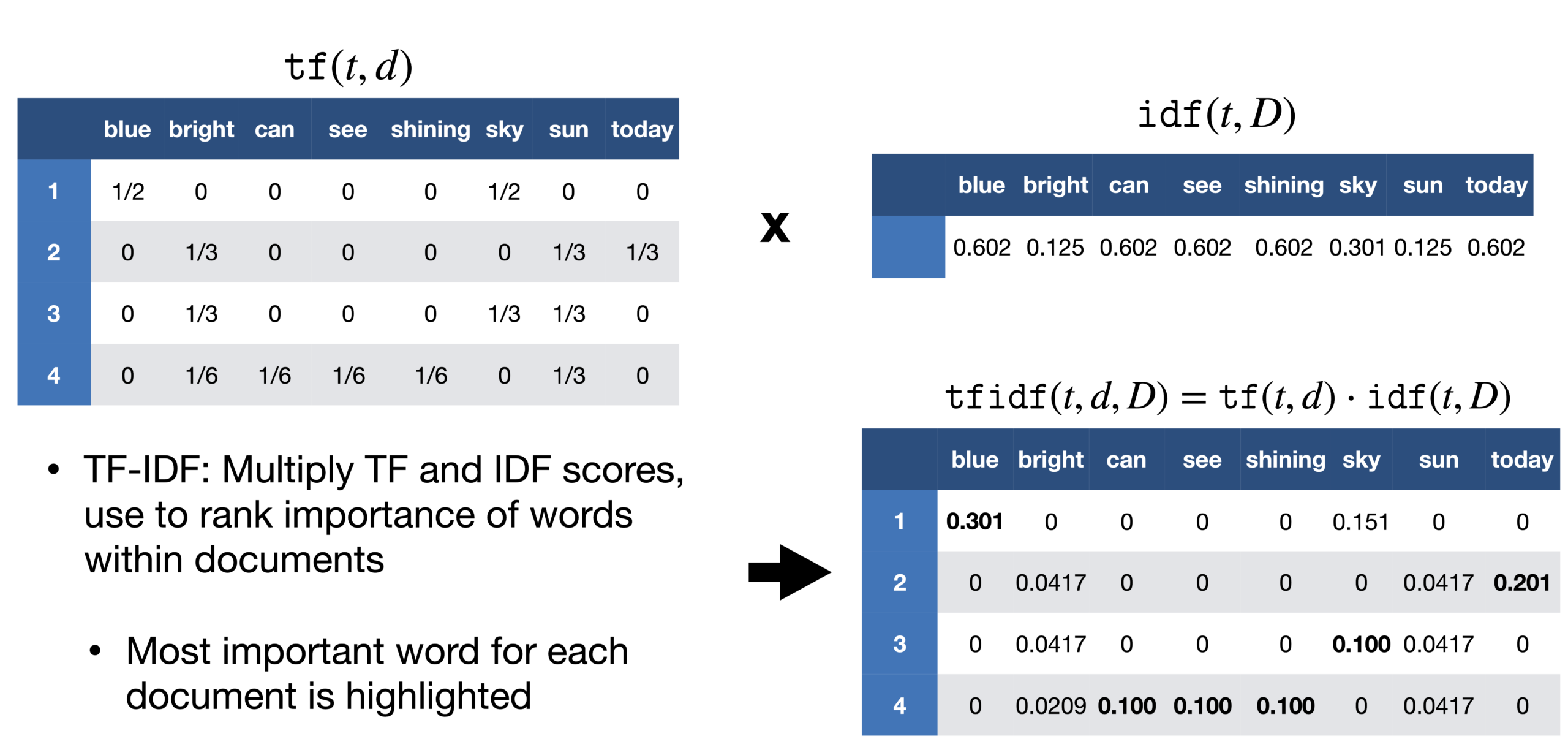

TF-IDF:

One problem we find with the bag of words approach is that it treats all words equally, but in a document, there is a high probability that certain words are repeated more frequently than others. In a report on Messi's victory in the Copa América, the word Messi would be repeated more frequently. We cannot give Messi the same weight as any other word in that document. In the report, if we take each sentence as a document, we can count the number of documents every time Messi appears. This method is called document frequency.

Then we divide the frequency of the term by the frequency of the document of that word. This helps us with the frequency of appearance of terms in that document and inversely with the number of documents in which it appears. Therefore, we have the TF-IDF. The idea is to assign particular weights to the words that tell us how important they are in the document.

Source: https://sci2lab.github.io/ml_tutorial/tfidf/

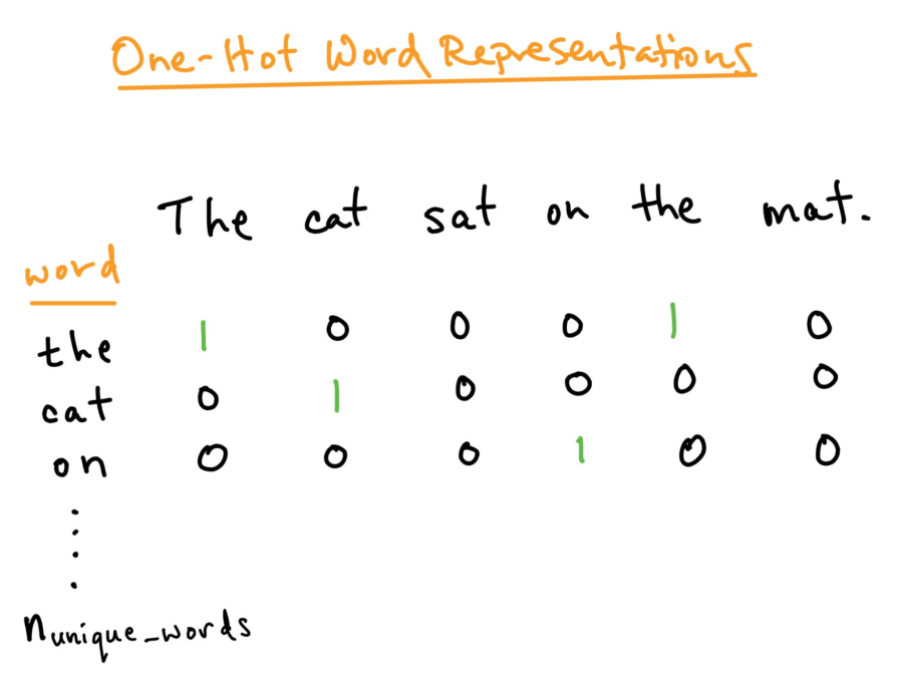

One-hot encoding:

For a better analysis of the text that we want to process, we must create a numerical representation of each word. This can be fixed by using the One-hot encoding method. Here we treat each word as a class and in a document, wherever the word is, we assign 1 in the table and all other words in that document get 0. This is similar to the bag of words, but here we just keep every word in a bag.

Source: https: //towardsdatascience.com/word-embedding-in-nlp-one-hot-encoding-and-skip-gram-neural-network-81b424da58f2

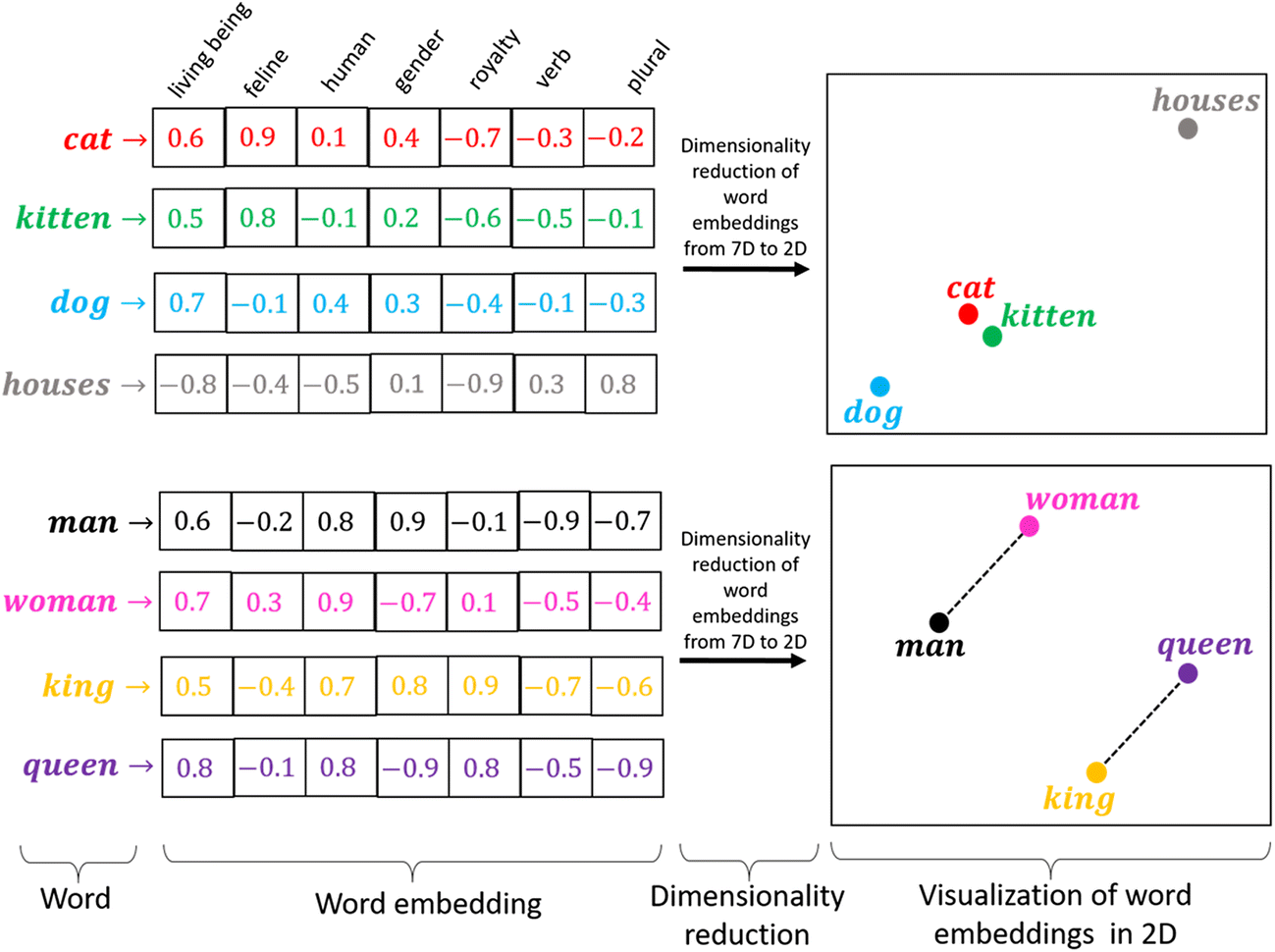

Word embedding:

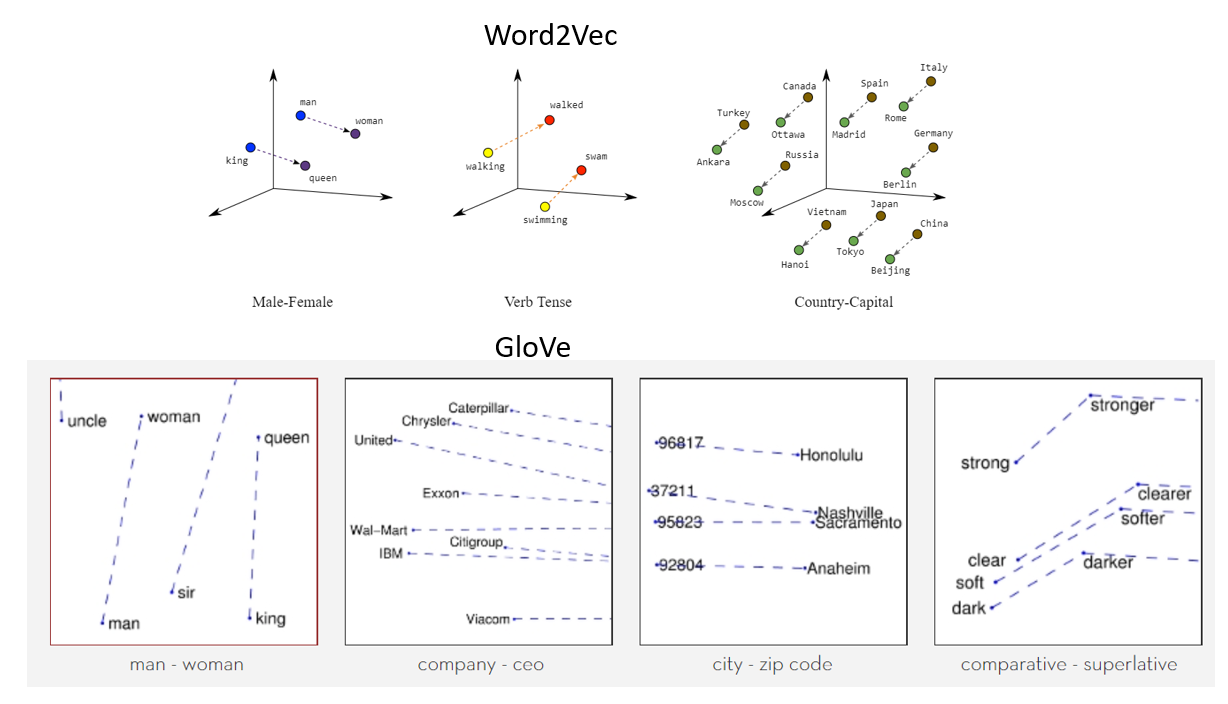

One-hot encoding works fine when we have a small data set. When there is a huge vocabulary, we can code it using this method as the complexity increases a lot. We need a method that can control the size of the words we represent. We do this by limiting it to a fixed size vector. We want to find an inlay for each word. We want you to show us some properties. For instance, if two words are similar, must be closer to each other in representation, and two opposite words if their pairs exist, both must have the same distance difference. These help us find synonyms, analogies, etc.

Source: https://miro.medium.com/max/1400/1*sAJdxEsDjsPMioHyzlN3_A.png

Word2Vec:

Word2Vec is widely used in most NLP models. Transform the word into vectors. Word2vec is a two-layer network that processes text with words. The input is in the text corpus and the output is a set of vectors: the feature vectors represent the words in that corpus. While Word2vec is not a deep neural network, convert text into an unambiguous form of calculation for deep neural networks. The purpose and benefit of Word2vec is to collect vectors of the same words in vector space. Namely, find mathematical similarities. Word2vec creates vectors that are distributed using numeric displays of word elements, characteristics such as the context of individual words. It does it without human intervention.

With enough data, use and conditions, Word2vec can make the most accurate predictions about the meaning of a word based on past appearances. That conjecture can be used to form word and word combinations (for instance, "great", namely, "Big" to say that "small" is "tiny"), or group texts and separate them by topic. Those collections can form the basis for the search, emotional analysis and recommendations in various fields, like scientific research, legal discovery, e-commerce and customer relationship management. The result of the Word2vec network is a glossary where each element has a vector attached, which can be embedded in a deep reading net or just asked to find the relationship between the words.

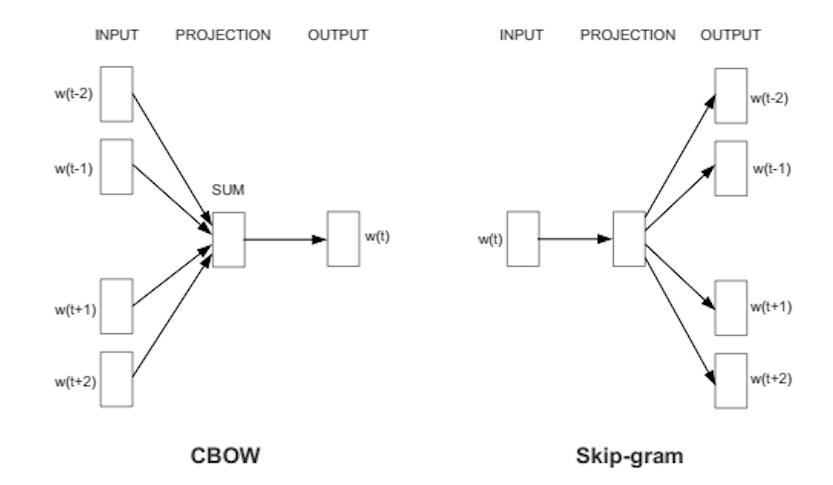

Word2Vec can capture the contextual meaning of words very well. There are two flavors. In one of the methods, we are given the neighboring words called continuous bag of words (CBoW), and in which we are given the middle word called skip-gram and we predict the neighboring words. Once we get a set of previously trained weights, we can save it and this can be used later for word vectorization without the need to transform again. We store them in a lookup table.

Source: https://wiki.pathmind.com/word2vec

Glove:

GloVe – global vector for word representation. A Stanford unsupervised learning algorithm is used to generate built-in words by combining a matrix of words for the co-occurrence of words from the corpus matrix.. Pop-up embedded text displays attractive line formatting for a word in vector space. The GloVe model is trained on the zero-level global co-occurrence matrix, which shows how often words are found in a particular corpus. Completing this matrix requires one pass per entire corporation to collect statistics. For a large corpus, this transaction may cost a computer, but it is a one-time expense in the future. Post-follow-up training is much faster because the number of non-matrix entries is usually much less than the total number of entries in the corpus.

The following is a visual representation of word inlays:

Source: https://miro.medium.com/max/1400/1*gcC7b_v7OKWutYN1NAHyMQ.png

References:

1. Image – https://www.develandoo.com/blog/do-robots-read/

2. https://nlp.stanford.edu/projects/glove/

3. https://wiki.pathmind.com/word2vec

4. https://www.udacity.com/course/natural-language-processing-nanodegree–nd892

Conclution:

Source: https: //medium.com/datatobiz/the-past-present-and-the-future-of-natural-language-processing-9f207821cbf6

About me: I am a research student interested in the field of deep learning and natural language processing and I am currently doing a postgraduate degree in Artificial Intelligence.

Feel free to connect with me at:

1. Linkedin: https://www.linkedin.com/in/siddharth-m-426a9614a/

2. Github: https://github.com/Siddharth1698

The media shown in this article is not the property of DataPeaker and is used at the author's discretion.