Target

- LSTM is a special type of red neuronal recurrenteRecurrent neural networks (RNN) are a type of neural network architecture designed to process data streams. Unlike traditional neural networks, RNNs use internal connections that allow information from previous entries to be remembered. This makes them especially useful in tasks such as natural language processing, Machine translation and time series analysis, where context and sequence are central to the... Able to handle long-term dependencies.

- Understand the architecture and operation of an LSTM network

Introduction

Long Short Term Memory Network is an advanced RNN, a sequential network, that allows information to persist. Is able to handle the problem of gradientGradient is a term used in various fields, such as mathematics and computer science, to describe a continuous variation of values. In mathematics, refers to the rate of change of a function, while in graphic design, Applies to color transition. This concept is essential to understand phenomena such as optimization in algorithms and visual representation of data, allowing a better interpretation and analysis in... of disappearance faced by RNN. A red neuronalNeural networks are computational models inspired by the functioning of the human brain. They use structures known as artificial neurons to process and learn from data. These networks are fundamental in the field of artificial intelligence, enabling significant advancements in tasks such as image recognition, Natural Language Processing and Time Series Prediction, among others. Their ability to learn complex patterns makes them powerful tools.. Recurrent is also known as RNN and is used for persistent memory.

Let's say that while watching a video you remember the previous scene or while reading a book you know what happened in the previous chapter. Similarly, RNNs work, remember the previous information and use it to process the current input. The deficiency of RNN is that they cannot remember the long-term dependencies due to the disappearance gradient. LSTMs are explicitly designed to avoid long-term dependency problems.

Note: If you are more interested in learning concepts in an audiovisual format, we have this full article explained in the video below. If that is not the case, you can keep reading.

LSTM architecture

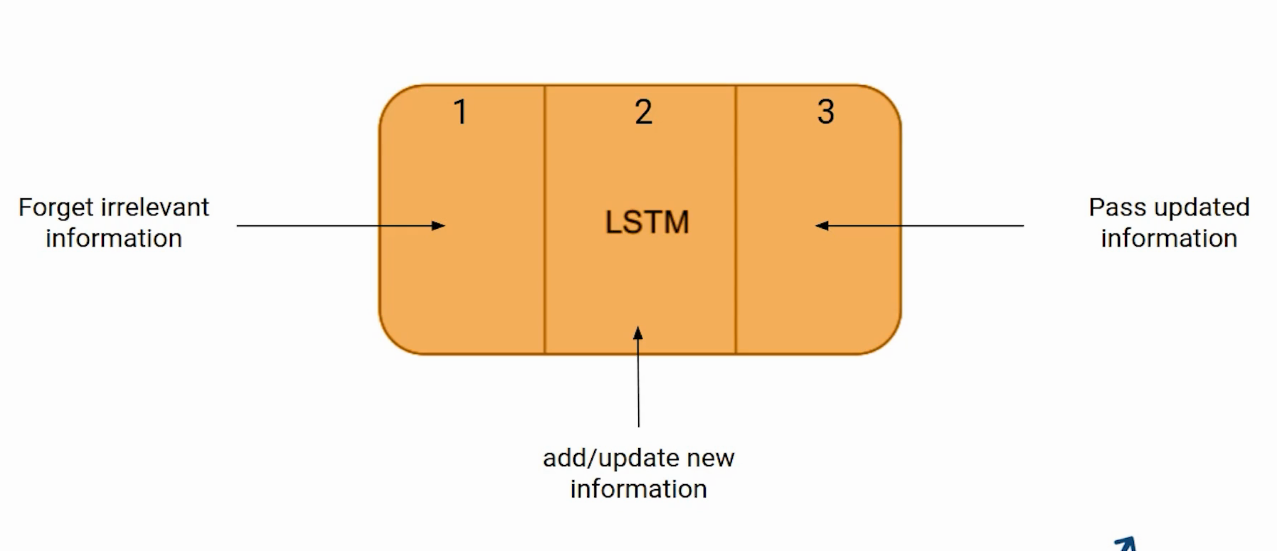

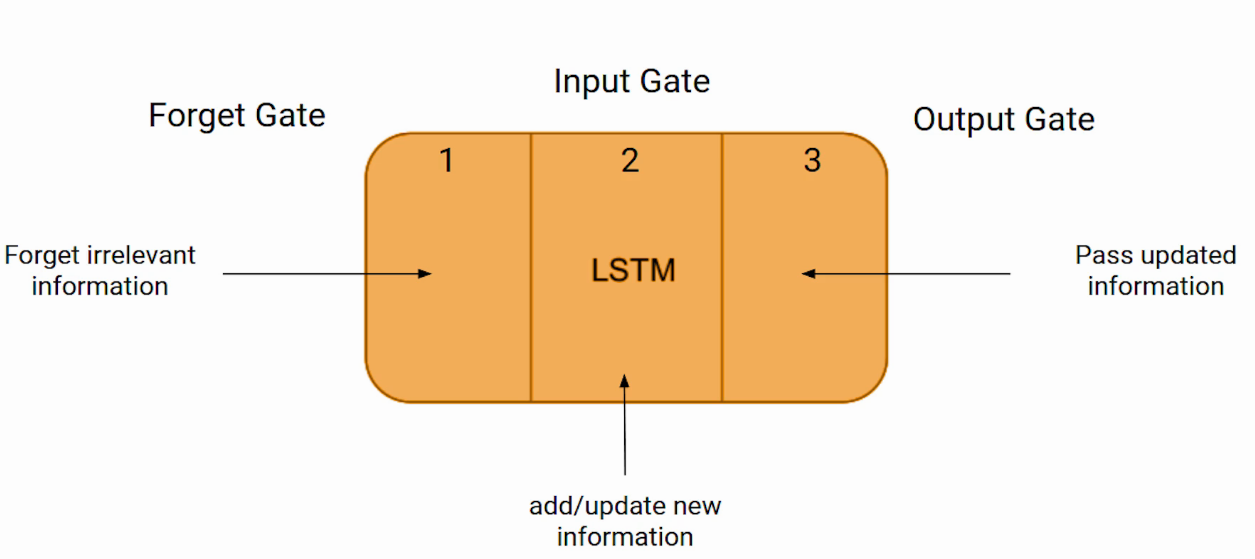

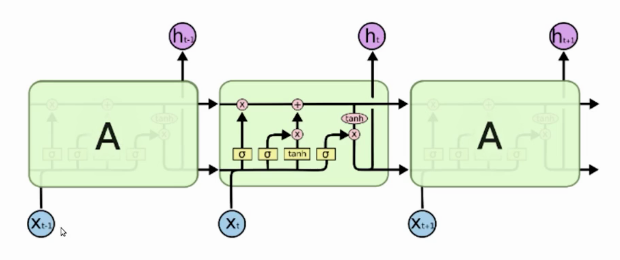

On a high level, LSTM works much like an RNN cell. Here's the inner workings of the LSTM network. The LSTM consists of three parts, as shown in the picture below and each part performs individual function.

The first part chooses whether the information coming from the previous timestamp should be remembered or is irrelevant and can be forgotten. In the second part, the cell tries to learn new information from the input to this cell. Finally, in the third part, the cell passes the updated information from the current timestamp to the next.

These three parts of an LSTM cell are known as doors. The first part is called Forget the door, the the second part is known as the front door and the last one is the exit door.

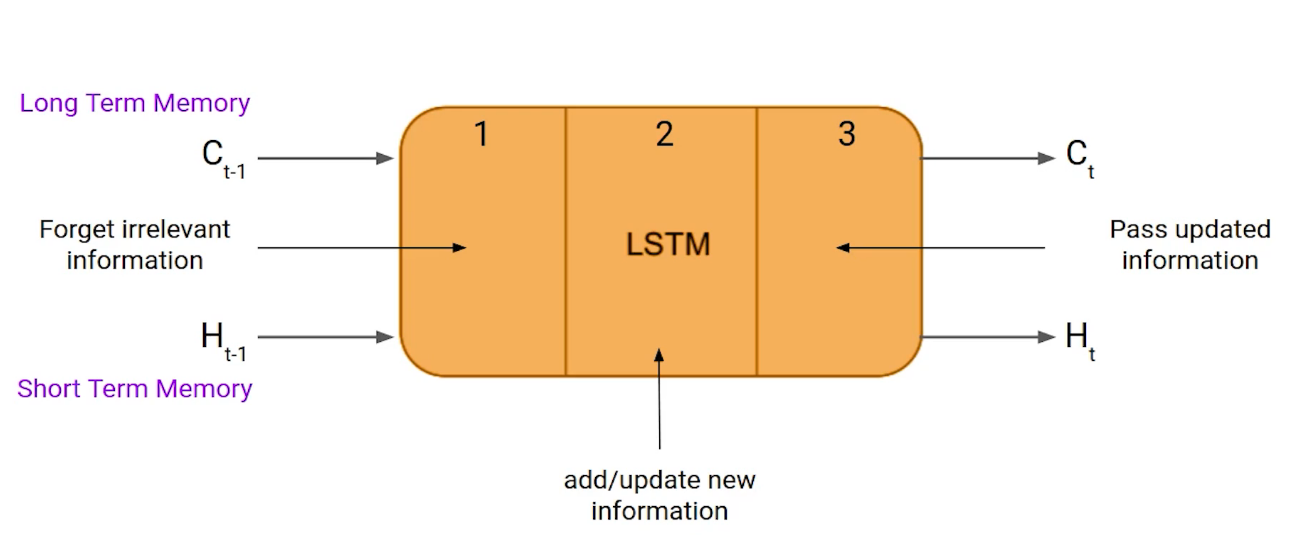

Like a simple RNN, an LSTM also has a hidden state where H (t-1) represents the hidden state of the previous timestamp and Ht is the hidden state of the current timestamp. Besides that, LSTM also has a cell state represented by C (t-1) y C

Here, the hidden state is known as short term memory and the state of the cell is known as long term memory. Please refer to the following picture.

It is interesting to note that the state of the cell carries the information along with all the timestamps.

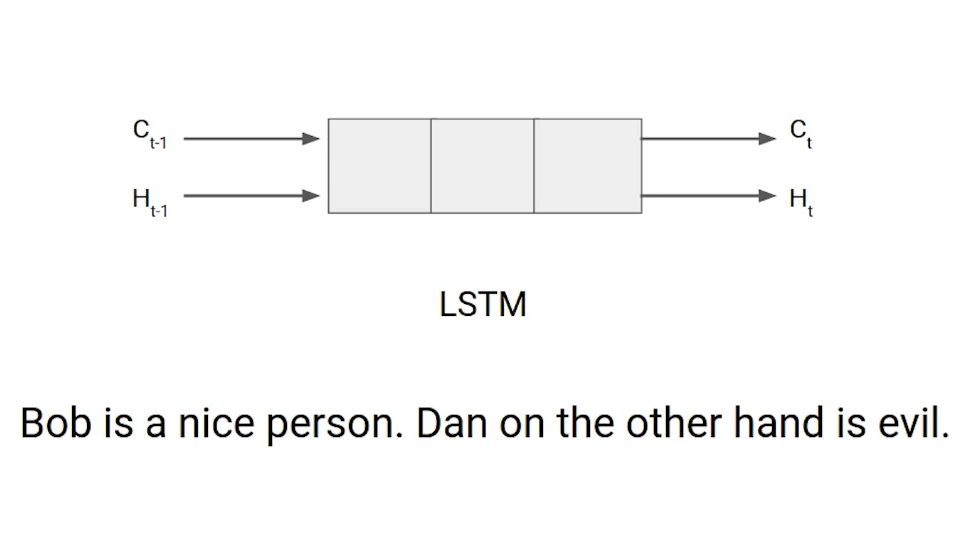

Let's take an example to understand how LSTM works. Here we have two sentences separated by a period. The first sentence is “Bob is a good person” and the second sentence is “And, Secondly, it's evil”. It's very clear, in the first sentence we are talking about Bob and as soon as we find the point (.) We started talking about Dan.

A measureThe "measure" it is a fundamental concept in various disciplines, which refers to the process of quantifying characteristics or magnitudes of objects, phenomena or situations. In mathematics, Used to determine lengths, Areas and volumes, while in social sciences it can refer to the evaluation of qualitative and quantitative variables. Measurement accuracy is crucial to obtain reliable and valid results in any research or practical application.... that we move from the first sentence to the second, our network must realize that we are no longer talking about Bob. Now our topic is Dan. Here, the Forget Network door lets you forget about it. Let's understand the roles these doors play in the LSTM architecture.

Forget door

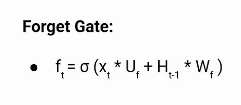

In a cell of the LSTM network, the first step is to decide whether we should keep the old timestamp information or forget it. Here is the equation for the door of oblivion.

Let's try to understand the equation, here

- Xt: input to current timestamp.

- Uf: weight associated with input

- Ht-1: the hidden state of the previous timestamp

- Wf: It is the weighting matrix associated with the hidden state.

Subsequently, a sigmoid function is applied to it. That will make ft be a number between 0 Y 1. This ft is later multiplied by the cell state of the previous timestamp, as it's shown in the following.

If ft is 0, the network will forget everything and if the value of ft is 1, will not forget anything. Let's go back to our example. The first sentence was talking about Bob and after a full stop, the net will meet Dan, in an ideal case, the net should forget about Bob.

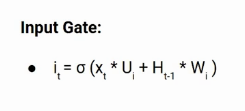

Entrance gate

Let's take another example

“Bob knows how to swim. He told me on the phone that he had served in the Navy for four long years “.

Then, in these two sentences, we are talking about bob. But nevertheless, both provide different types of information about Bob. In the first sentence, we get the information that you know how to swim. While the second sentence says he uses the phone and served in the Navy for four years.

Now just think about it, based on the context given in the first sentence, what information in the second sentence is critical. First, used the telephone to advise or served in the navy. In this context, It does not matter if you used the telephone or any other means of communication to transmit the information. The fact that he was in the Navy is important information and this is something we want our model to remember. This is the task of the front door.

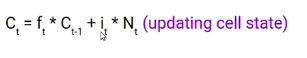

The entrance gate is used to quantify the importance of the new information carried by the entrance. Here is the equation for the front door.

- Xt: Entry with current timestamp t

- Ui: input weights matrix

- Ht-1: a hidden state in the previous timestamp

- Wi: weight matrix of the input associated with the hidden state

Again we have applied the sigmoid function. As a result, the value of I at timestamp t will be between 0 Y 1.

New information

Now, the new information that needed to pass to the cell state is a function of a hidden state at previous timestamp t-1 and input x at timestamp t. The wake functionThe activation function is a key component in neural networks, since it determines the output of a neuron based on its input. Its main purpose is to introduce nonlinearities into the model, allowing you to learn complex patterns in data. There are various activation functions, like the sigmoid, ReLU and tanh, each with particular characteristics that affect the performance of the model in different applications.... Here is Tanh. Due to the tanh function, the value of the new information will be between -1 Y 1. If the value of Nt is negative, the information is subtracted from the cell state and if the value is positive, the information is added to the cell state at the current date and time.

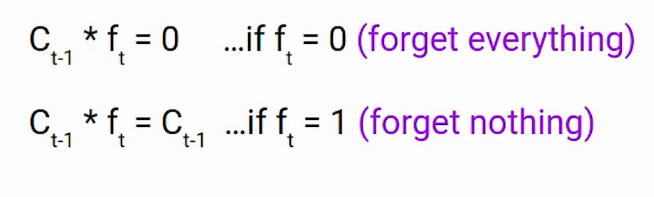

But nevertheless, the Nt will not be added directly to the cell state. Here comes the updated equation

Here, Ct-1 is the state of the cell at the current timestamp and others are the values that we have previously calculated.

Exit door

Now consider this sentence

"Bob fought the enemy alone and died for his country. For your contributions, brave________. “

During this task, we have to complete the second sentence. Now, the moment we see the word brave, we know we are talking about a person. In the sentence only Bob is brave, we cannot say that the enemy is brave or the country is brave. Then, based on current expectation, we have to give a relevant word to fill in the blank. That word is our exit and this is the function of our exit door.

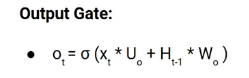

Here is the exit gate equation, which is quite similar to the previous two doors.

Its value will also be between 0 Y 1 due to this sigmoid function. Now, to calculate the current hidden state, we will use Ot and tanh from the updated cell state. As shown below.

It turns out that the hidden state is a function of long-term memory (Ct) and the current output. If you need to output the current timestamp, just apply SoftMax activation in Ht hidden state.

Here, the token with the highest score in the output is the prediction.

This is the most intuitive diagram of the LSTM network.

This diagram is taken from an interesting blog. I urge everyone to check it out. Here is the link-

Final notes

In summary, In this article we looked in detail at the architecture of a Sequential modelThe sequential model is a software development approach that follows a series of linear and predefined stages. This model includes phases such as planning, analysis, design, Implementation and maintenance. Its structure allows for easy project management, although it can be rigid in the face of unforeseen changes. It is especially useful in projects where the requirements are well known from the start, ensuring clear and measurable progress.... LSTM and how it works.

If you are looking to start your data science journey and want all topics under one roof, your search stops here. Take a look at DataPeaker's certified AI and ML BlackBelt Plus Program

If you have any question, Let me know in the comment section!