This article was published as part of the Data Science Blogathon

Data science, machine learning, MLops, data engineering, all these data frontiers advance with speed and precision. The future of data science is defined by larger firms like Microsoft, Amazon, Databricks, Google and these companies are driving innovation in this field. Because of these rapid changes, it makes sense to get certified with any of these big players and learn about their product offering. What's more, with the end-to-end solutions provided by these platforms, from scalable data lakes to scalable clusters, for both testing and production, making life easier for data professionals. From a business perspective, has all the infrastructure under one roof, in the cloud and on demand, and more and more companies are leaning or, what's more, are forced to move to the cloud due to the ongoing pandemic.

How does DP-100 help (Design and implement a data science solution on Azure) to a data scientist or anyone who works with data?

In summary, companies collect data from various sources, mobile apps, POS systems, internal tools, machines, etc., and all of these are in various departments or various databases, this is especially true for large legacy companies. One of the main hurdles for data scientists is getting relevant data under one roof to build models for use in production.. In the case of Azure, all this data is moved into a data lake, data manipulation can be done using SQL or Spark groups, data cleaning, model preprocessing, building models using test clusters (low cost), model monitoring, model equity, data drift and cluster implementation (higher scalable cost). The data scientist can focus on solving problems and let Azure do the heavy lifting.

Another use case scenario is model tracking using mlflow (Databricks open source project). Anyone who has participated in a DS hackathon knows that model tracking, logging metrics and comparing models is a tedious task, if you haven't configured a pipeline. In Azure, all of this is facilitated through the use of experiments called, all models are registered, metrics are logged, artifacts are logged, all using a single line of code.

About Azure DP-100

Azure DP-100 (Design and implement a data science solution on Azure) is Microsoft's data science certification for all data enthusiasts. It's a self-paced learning experience, with freedom and flexibility. After completion, one can work in blue without problems and build models, track experiments, build pipes, adjust hyperparameters and Camino AZUR.

Requirements

- Basic knowledge of Python, after having worked on it for at least 3-6 months, makes it easy to prepare for the exam.

- Basic knowledge of machine learning. This helps you understand the codes and answer questions about AA during the exam..

- Having worked on the Jupyter laptop or the Jupyter lab, this is not a mandate, since all labs are on the jupyter laptop, it's easy to work with them.

- Knowledge of Databricks and mlflow can be leveraged to get better test scores. As of July 2021, these concepts are included in DP-100.

- Rs. 4500 exam fees.

- Sign up for a free Azure account, you will receive credits from 13.000 rupees with which you can explore Azure ML. This is more than enough. But Azure ML is free only for the first few 30 days. So make good use of this subscription.

- The most important thing is to set your exam date within 30 days from today, pay it, this serves as a good motivator.

Dp exam page 100

It's worth it?

The cost of the exam is approximately 4.500 rupees and not many companies expect a certification during recruitment, it's good to have it, but many, neither the recruiters demand nor know it, then the question arises: Is it worth paying? Is it worth my weekends? The answer is yes, just because, although one could be a great machine learning teacher or Python expert, but the inner workings of Azure is specific to Azure, many methods are specific to Azure to drive performance improvements. You can't just dump a Python code and expect it to deliver optimal performance. Many processes are automated in azure, for instance: automl module creates models with just one line of code, hyperparameter tuning requires one line of code. No ML Code is another drag and drop tool that makes model building child's play. Containers / storage / key vaults / work space / experiments / all are tools and specific kinds of blue. When creating compute instances, work with the pipeline, mlflow also helps to understand the concepts of Mlops. Definitely an advantage if you are working in Azure and want to explore the nitty-gritty. In general, the rewards outweigh the effort.

Preparation

- The exam is based on MCQ with around 60 a 80 questions and the time provided is 180 minutes. This time is more than enough to complete and review all the questions..

- Two lab questions or case study type questions are asked and these are required questions and cannot be skipped.

- It is a supervised test, so be sure to prepare for the exam.

- Microsoft changes the pattern about twice a year, so it is better to check the update exam pattern.

- It is easier if the exam preparation is divided into 2 Steps, theory and laboratory.

- The theory is quite detailed and you need at least 1-2 weeks of preparation and review. All theoretical questions can be studied from microsoft docs. A detailed study of these documents will suffice..

- This important section constitutes the largest number of questions – Create and operate machine learning solutions with Azure Machine Learning.

- Labs are important too. Although no practical laboratory questions will be asked, it is helpful to understand Azure specific classes and methods. And these make up the majority of questions.

- No questions about machine learning will be asked, for instance, it won't ask what the R2 score is. What you may ask is how to record the R2 score for an experiment. Then, ML app on azure should be the focus.

- Microsoft provides an instructor-led guide. paid course also for DP-100. I don't see the need to address this, since everything is provided in the MS docs.

- Practice Labs, about 14, practice at least once to become familiar with the Azure workspace.

- Review theory before taking exams, so as not to be confused during the exam.

Measured skills:

- Set up an Azure Machine Learning workspace

- Run experiments and train models

- Optimize and manage models

- Deploy and consume models

Clone the repository to practice azure labs:

git clone https://github.com/microsoftdocs/ml-basics

Some methods / important Azure classes:

## to create workspace

ws = Workspace.get(name="aml-workspace",

subscription_id='1234567-abcde-890-fgh...',

resource_group='aml-resources')

## register model

model = Model.register(workspace=ws,

model_name="classification_model",

model_path="model.pkl", # local path

description='A classification model',

tags={'data-format': 'CSV'},

model_framework=Model.Framework.SCIKITLEARN,

model_framework_version='0.20.3')

## Run a .py file in a piepeline

step2 = PythonScriptStep(name="train model",

source_directory = 'scripts',

script_name="train_model.py",

compute_target="aml-cluster")

# Define the parallel run step step configuration

parallel_run_config = ParallelRunConfig(

source_directory='batch_scripts',

entry_script="batch_scoring_script.py",

mini_batch_size="5",

error_threshold=10,

output_action="append_row",

environment=batch_env,

compute_target=aml_cluster,

node_count=4)

# Create the parallel run step

parallelrun_step = ParallelRunStep(

name="batch-score",

parallel_run_config=parallel_run_config,

inputs=[batch_data_set.as_named_input('batch_data')],

output=output_dir,

arguments=[],

allow_reuse=True

)

Some important concepts (not an exhaustive list):

- Create a compute cluster for tests and productions

- Create pipeline steps

- Connect the Databricks cluster to the Azure ML workspace

- Hyperparameter tuning method

- Work with data: data sets and data warehouse

- Model drift

- Differential privacy

- Detect the injustice of the model (MCQ questions)

- Model explanations using shap explainer.

- Method to remember

- Scriptrunconfig

- PipelineData

- ParallelRunConfig

- PipelineEndpoint

- RunConfiguration

- init () run ()

- PostedPipeline

- ComputeTarget.attach

- dataset methods / datastore

Azure DP-100 exam prep session

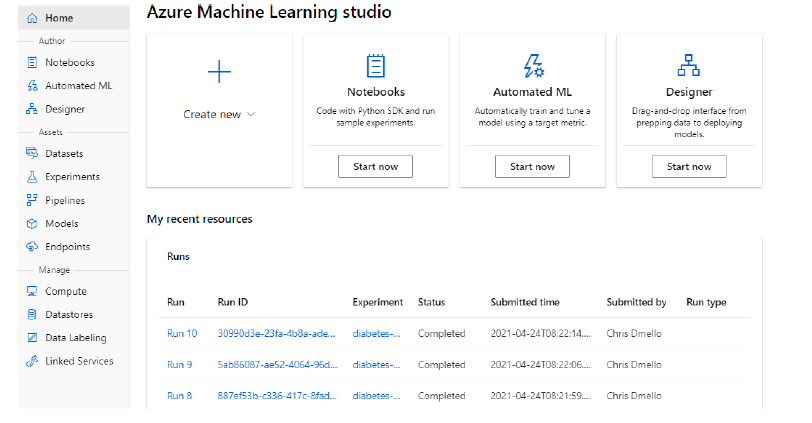

Azure Machine Learning workspace:

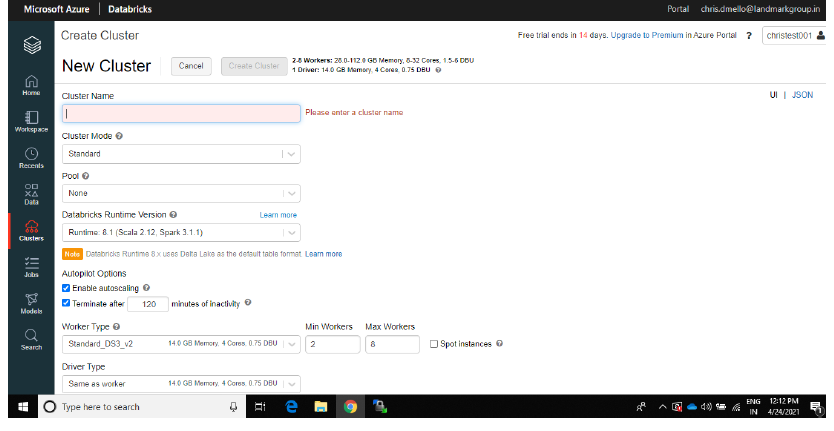

Azure Databricks creates a cluster:

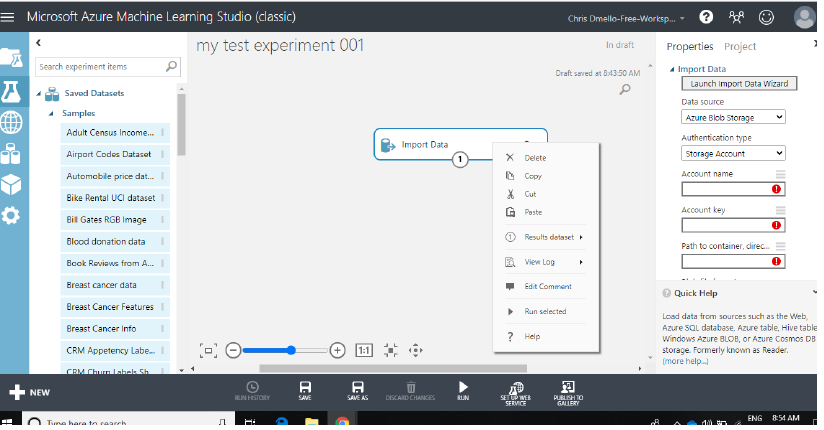

Azure Designer:

Exam day

- Make sure to test your system the day before. Work laptops sometimes cause problems, so it is better to use personal laptops.

- Books are not allowed / papers / pens or other stationery.

- The proctor performs the initial basic checks and allows you to start the exam.

- Once the exam is submitted, scores are provided on screen and then in an email. So don't forget to check your mail.

- Certification is valid only for 2 years.

Good luck! Your next goal should be DP-203 (Data engineering in Microsoft Azure).

The media shown in this article is not the property of DataPeaker and is used at the author's discretion.