Introduction

Today, organizations handle a large amount and a wide variety of data: customer calls, your emails, tweets, mobile app data and more. It takes a lot of effort and time for this data to be useful. One of the basic skills for extracting information from text data is natural language processing (PNL).

Natural language processing (PNL) it is the art and science that helps us extract information from the text and use it in our calculations and algorithms. Given the increase in content on the Internet and social networks, is one of the must-haves for all data scientists.

Whether you know NLP or not, this guide should help you as a ready reference for you. Through this guide, I provided you with resources and codes to perform the most common tasks in NLP.

Once you have read this guide, feel free to take a look at our video course on natural language processing (PNL).

Why did I create this guide?

After having been working on NLP problems for some time, I have come across various situations where I needed to consult hundreds of different sources to study the latest developments in the form of research articles, blogs and contests for some of the common NLP tasks. .

Then, I decided to gather all these resources in one place and make it a one-stop solution for the latest and most important resources for these common NLP tasks.. Below is the list of tasks covered in this article along with their relevant resources.. Let us begin.

Table of Contents

- Derivative

- Lematización

- Word embeddings

- Labeling part of speech

- Named entity disambiguation

- Named entity recognition

- Sentiment analysis

- Semantic text similarity

- Language identification

- Text summary

1. Derivative

What is Stemming ?: Derivation is the process of reducing words (generally modified or derived) to its root or root of the word. The purpose of the root is to reduce the related words to the same root, even if the root is not a dictionary word. For instance, in the english language-

- hermosa Y beautifully are derived from beauti

- best Y best are derived from best Y best respectively

Paper: the original article by Martin Porter in Porter's algorithm to derive.

Algorithm: Here is the Python implementation of the Porter2 derivation algorithm.

Implementation: This is how you can derive a word using the Porter2 algorithm from the drifting Library.

2. Lematización

What is stemming ?: Stemming is the process of reducing a group of words to its motto or dictionary form. It takes into account things like POS (Parts of speech), the meaning of the word in the sentence, the meaning of the word in close sentences, etc. before reducing the word to your motto. For instance, in the english language-

- hermosa Y beautifully are slogan to hermosa Y beautifully respectively.

- good, best Y best are slogan to good, good Y good respectively.

Document 1: This paper discusses the different methods of lemmatization in great detail. A must read if you want to know how traditional stemmers work.

Document 2: This is an excellent job Addressing the Stemming Problem for Variation Rich Languages Using Deep Learning.

Data set: This is the link for the Treebank-3 dataset that you can use if you want to create your own Lemmatiser.

Implementation: Below is an implementation of an English Lemmatizer using spacy.

#!pip install spacy#python -m spacy download enimport spacynlp=spacy.load("en")doc="good better best"

for token in nlp(doc): print(token,token.lemma_)

3. Word embeddings

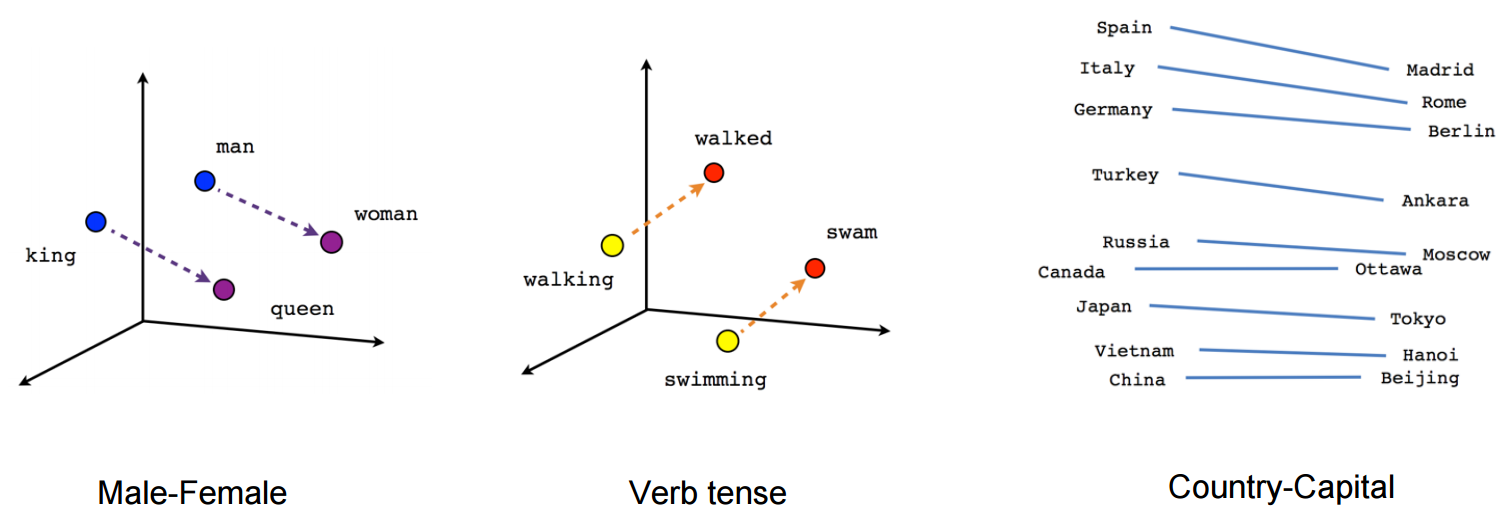

What are word embeddings ?: Word Embeddings is the name of the techniques used to represent natural language in vector form of real numbers. They are useful due to the inability of computers to process natural language. Then, these word inlays capture the essence and relationship between words in natural language using real numbers. And Word Embedding, a word or phrase is represented in a fixed-dimension vector of length, Let's say 100.

For example-

A word “man” can be represented in a vector of 5 dimensions like

where each of these numbers is the magnitude of the word in a particular direction.

Blog: Here is an article that explains Word embeddings in great detail.

Paper: A very good role which explains word vectors in detail. A must read for a deep understanding of word vectors.

Tool: A browser based tool to visualize word vectors.

Pre-trained Word Vectors: Here is an exhaustive list of Pre-trained Word Vectors in 294 languages by facebook.

Implementation: This is how you can get pre-trained one-word Word Vector using the gensim package.

Download the Word vectors previously trained in Google News from here.

#!pip install gensimfrom gensim.models.keyedvectors import KeyedVectorsword_vectors=KeyedVectors.load_word2vec_format('GoogleNews-vectors-negative300.bin',binary=True)word_vectors['human']

Implementation: This is how you can train your own word vectors using gensim

sentence=[['first','sentence'],['second','sentence']]model = gensim.models.Word2Vec(sentence, min_count=1,size=300,workers=4)

4. Labeling part of speech

What is part speech tagging ?: In simplistic terms, Part-of-speech tagging is the process of marking words in a sentence as nouns, verbs, adjectives, adverbs, etc.. For instance, in the sentence-

“Ashok killed the snake with a stick”

The parts of speech are identified as:

Ashok PROPN

delicate VERB

the THE

snake NOUN

with ADP

a THE

palo NOUN

. POINT

Test 1: This appropriately titled choi role The latest essence of the state of the art introduces a novel method called Dynamic Feature Induction that achieves state of the art in POS tagging task

Document 2: This paper Introduces Unattended POS Labeling Using Anchor Hidden Markov Models.

Implementation: This is how we can perform POS tagging using spacy.

#!pip install spacy#!python -m spacy download en nlp=spacy.load('en')sentence="Ashok killed the snake with a stick"for token in nlp(sentence): print(token,token.pos_)

5. Named entity disambiguation

What is Named Entity Disambiguation ?: Named entity disambiguation is the process of identifying entity mentions in a sentence. For instance, in the sentence-

“Apple earned revenue of 200 billion dollars in 2016”

It is the task of Designation of Named Entities to infer that Apple in the sentence is the Apple company and not a fruit..

Named entity, in general, requires an entity knowledge base that you can use to link entities in the sentence to the knowledge base.

Document 1: This article by Huang makes use of deep neural network-based deep semantic relationship models in conjunction with the knowledge base to achieve state-of-the-art result in named entity disambiguation.

Document 2: This article by Ganea and Hofmann make use of local neural attention alongside Word embeddings and without manually created functions.

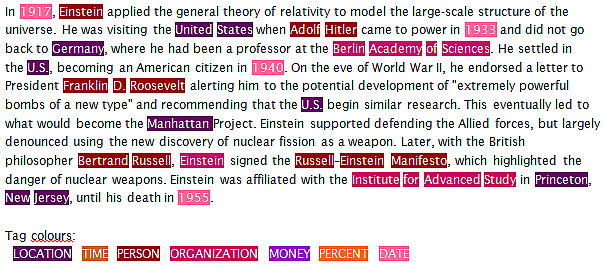

6. Named entity recognition

What is Named Entity Recognition ?: Named entity recognition is the task of identifying entities in a sentence and classifying them into categories like a person, organization, date, Location, time, etc. For instance, a NER would take a sentence like:

“Ram of Apple Inc. traveled to Sydney on 5 October 2017”

and returns something like

RAM

of

Apple ORG

C ª. ORG

traveled

to

Sydney GPE

about

Fifth DATE

October DATE

2017 DATE

Here, ORG stands for Organization and GPE stands for Location.

The problem with current NERs is that even next-generation NERs tend to underperform when used in a data domain that is different from the data the NER was trained on..

Paper: This excellent paper uses bi-directional LSTMs and combines supervised and unsupervised learning methods to achieve a state-of-the-art result in the recognition of named entities in 4 Languages.

Implementation: Then, explains how you can perform named entity recognition using spacy.

import spacynlp=spacy.load('en')sentence="Ram of Apple Inc. travelled to Sydney on 5th October 2017"for token in nlp(sentence): print(token, token.ent_type_)

7. Sentiment analysis

What is sentiment analysis ?: Sentiment analysis is a wide range of subjective analysis that uses natural language processing techniques to perform tasks such as identifying the sentiment of a customer review., positive or negative feeling in a sentence, judge mood using speech analysis or written text analysis, etc. For instance:

"I didn't like the chocolate ice cream" – it's a negative ice cream experience.

“I did not hate chocolate ice cream”: can be considered a neutral experience

There is a wide range of methods used to perform sentiment analysis, from counting negative and positive words in a sentence to using LSTM with word inlays.

Blog 1: This article focuses on conducting sentiment analysis on movie tweets

Blog 2: This article focuses on conducting sentiment analysis of tweets during the Chennai flood.

Document 1: This paper takes the supervised learning approach with the Naive Bayes method to rank IMDB reviews.

Document 2: This paper uses the unsupervised learning method with LDA to identify aspects and feelings of user generated opinions. This document is outstanding in that it addresses the problem of a shortage of commented reviews..

Repository: This is an awesome repository of research work and implementation of sentiment analysis in several languages.

Data set 1: Opinion dataset from multiple domains, version 2.0

Data set 2: Twitter sentiment analysis dataset

Do the Twitter sentiment analysis yourself.

8. Semantic text similarity

What is text semantic similarity ?: Semantic similarity of text is the process of analyzing the similarity between two pieces of text with respect to the meaning and substance of the text instead of analyzing the syntax of the two pieces of text. What's more, the similarity is different from the relationship.

For instance –

The car and the bus are similar, but the car and fuel are related.

Document 1: This paper presents the different approaches to measuring text similarity in detail. A must-read article to learn about existing approaches in one place.

Document 2: This paper presents the CNN to classify a pair of two short texts

Document 3: This paper makes use of Tree-LSTM that achieve a cutting-edge result in the semantic relationship of texts and semantic classification.

9. Language identification

What is language identification ?: Language identification is the task of identifying the language in which the content is found. It makes use of the statistical and syntactic properties of the language to perform this task. It can also be considered as a special case of text classification.

Blog: In this fastText blog post, introduce a new tool that can identify 170 languages with 1 MB of memory usage.

Document 1: This paper analyze 7 language identification methods of 285 Languages.

Document 2: This paper describes how deep neural networks can be used to achieve cutting-edge results in automatic language identification.

10. Text summary

What is text summary ?: Text summarization is the process of shortening a text by identifying the important points of the text and creating a summary using these points. The objective of the text summary is to retain the maximum information along with the maximum shortening of the text without altering the meaning of the text.

Document 1: This paper describes a neural attention model-based approach to abstract sentence summarization.

Document 2: This paper describes how sequence-by-sequence RNNs can be used to achieve state-of-the-art results in text summarization.

Repository: This Google Brain repository The team has the codes to use a custom sequence-by-sequence model for the text summary. The model is trained on a Gigaword data set.

Application: The autotldr robot on Reddit use text summary to summarize articles in a post's comments. This feature turned out to be very famous among Reddit users..

Implementation: this is how you can quickly summarize your text using the gensim package.

from gensim.summarization import summarizesentence="Automatic summarization is the process of shortening a text document with software, in order to create a summary with the major points of the original document. Technologies that can make a coherent summary take into account variables such as length, writing style and syntax.Automatic data summarization is part of machine learning and data mining. The main idea of summarization is to find a subset of data which contains the information of the entire set. Such techniques are widely used in industry today. Search engines are an example; others include summarization of documents, image collections and videos. Document summarization tries to create a representative summary or abstract of the entire document, by finding the most informative sentences, while in image summarization the system finds the most representative and important (i.e. salient) images. For surveillance videos, one might want to extract the important events from the uneventful context.There are two general approaches to automatic summarization: extraction and abstraction. Extractive methods work by selecting a subset of existing words, phrases, or sentences in the original text to form the summary. In contrast, abstractive methods build an internal semantic representation and then use natural language generation techniques to create a summary that is closer to what a human might express. Such a summary might include verbal innovations. Research to date has focused primarily on extractive methods, which are appropriate for image collection summarization and video summarization."summarize(sentence)

Final notes

So it was about the most common NLP tasks along with its relevant resources in the form of blogs., research articles, repositories and applications, etc. If you believe it, there is a great resource on any of these 10 tasks that I have missed or you want to suggest adding another task, then feel free to comment on your suggestions and comments.

We also have a great course, NLP using Python, for you if you want to become a NLP practitioner.

Happy learning!