Introduction

I'm a big fan of R, it's no secret. I've trusted him since I learned statistics in college. In fact, R is still my preferred language for machine learning projects.

Three things mainly attracted me to R:

- Easy to understand and use syntax

- The amazing RStudio tool

- R packages!

R offers a large number of packages to perform machine learning tasks, including 'dplyr’ for data manipulation, ‘ggplot2’ for data visualization, ‘caret’ for building ML models, etc.

There are even R packages for specific functions, that include credit risk score, website data extraction, econometrics, etc. There's a reason R is beloved among statisticians around the world: the large number of R packages available makes life much easier.

In this article, I will show eight R packages that have gone unnoticed among data scientists, but which are incredibly useful for performing specific machine learning tasks. to get started, I have included an example along with the code of each package.

Créame, His love for R is about to undergo another revolution!!

The R packages we'll cover in this article

I have broadly divided these R packages into three categories:

- Data visualization

- Machine learning

- Other miscellaneous R packages

- Bono: More R packages!

Data visualization

R is an amazing tool for visualizing data. The ease with which we can generate all kinds of graphics with just one or two lines of code? Truly a time saver.

R provides seemingly countless ways to visualize your data. Even when I am using Python for a certain task, I go back to R to explore and visualize my data. I'm sure most R users feel the same way!!

Let's check out some awesome but lesser known R packages for doing exploratory data analytics.

This is my go-to package for exploratory data analysis. From plotting data structure to QQ charts and even creating reports for your dataset, this package does it all.

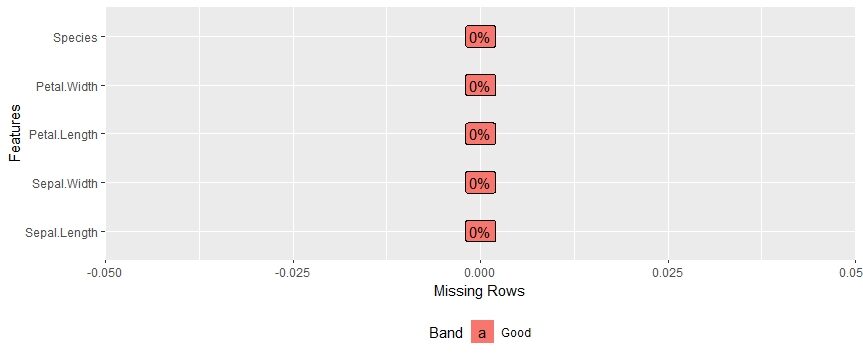

Let's see what DataExplorer can do with an example. Please note that we have stored our data in the data variableIn statistics and mathematics, a "variable" is a symbol that represents a value that can change or vary. There are different types of variables, and qualitative, that describe non-numerical characteristics, and quantitative, representing numerical quantities. Variables are fundamental in experiments and studies, since they allow the analysis of relationships and patterns between different elements, facilitating the understanding of complex phenomena..... Now, we want to find out the percentage of missing values in each feature present. This is extremely useful when working with massive data sets and calculating the sum of the missing values can be time consuming..

You can install DataExplorer using the following code:

install.packages("DataExplorer")

Now let's see what DataExplorer can do for us:

library(DataExplorer) data(iris) plot_missing(iris)

We get a really intuitive graph for the missing values:

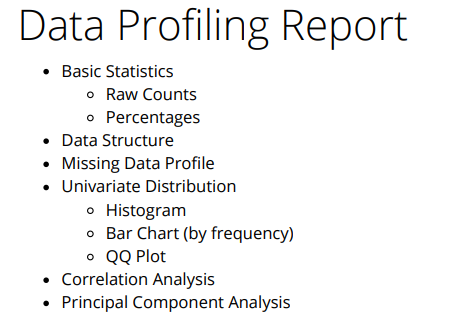

One of my favorite aspects of DataExplorer is the full report that we can generate using just one line of code:

create_report(iris)

Below are the different types of factors we get in this report:

You can access the full report through this link. A VERY useful package.

How about a 'drag and drop plugin’ to generate graphs in R? That's right – Dodge is a package that allows you to continue creating graphics without having to code them.

Esquisse is based on the ggplot2 package. That means you can interactively explore your data in the skimming environment by generating ggplot2 graphs..

Use the following code to install and upload Dodge on your machine:

# From CRAN

install.packages("esquisse")

#Load the package in R

library(esquisse)

esquisse::esquisser() #helps in launching the add-inYou can also start the esquisse plugin through the RStudio menu. The esquisse user interface looks like this:

Fairly good, truth? Go ahead and play around with different types of plots: it's an eye-opening experience.

Machine learning

Ah, build machine learning models in R. The holy grail we data scientists strive for when undertaking new machine learning projects. You may have used the 'caret package’ to build models before.

Now, let me introduce you to some R packages that can change the way you approach the model building process.

One of the main reasons Python got ahead of R was because of its libraries focused on machine learning (como scikit-learn). For a long time, R lacked this ability. Sure you could use different packages to perform different AA tasks, but there was no single package that could do it all. We had to call three different libraries to build three different models.

Not ideal.

And then the MLR package arrived.. It's an amazing package that allows us to perform all kinds of machine learning tasks.. MLR includes all the popular machine learning algorithms we use in our projects.

I highly recommend reading the following article to delve into MLR:

Let's see how to install MLR and build a random forest model on the iris dataset:

install.packages("Mlr")

library(Mlr)

# Load the dataset

data(iris)

# create task

task = makeClassifTask(id = "iris", iris, target = "Species")

# create learner

learner = makeLearner("classif.randomForest")

# build model and evaluate

holdout(Learner, task)

# measure accuracy

holdout(Learner, task, measures = acc)

Production:

Resample Result Task: iris Learner: classif.randomForest Aggr perf: acc.test.mean=0.9200000 # 92% accuracy - not bad! Runtime: 0.0239332

A common problem with the different functions available in R (who do the same) is that they can have different interfaces and arguments. Let's take the random forest algorithm, for instance. The code you would use in the random forest package and the collation sign the package is different, truth?

As MLR, parsnip eliminates the problem of referencing multiple packages for a given machine learning algorithm. Successfully mimics Python's scikit-learn package in R.

Let's look at the following simple example to give you an idea of how parsnip works for a linear regression problem:

install.packages("parsnip")

library(parsnip)

#Load the dataset

data(mtcars)

#Build a linear regression model

fit <- linear_reg("regression") %>%

set_engine("lm") %>%

fit(mpg~.,data=mtcars)

fit #extracts the coefficient values

Production:

parsnip model object

Call:

stats::lm(formula = formula, data = data)

Coefficients:

(Intercept) cyl disp hp drat wt qsec

12.30337 -0.11144 0.01334 -0.02148 0.78711 -3.71530 0.82104

vs am gear carb

0.31776 2.52023 0.65541 -0.19942

Ranger is one of my favorite R packages. I regularly use random forests to create baseline models, especially when I participate in data science hackathons.

Here is a question: How many times have you encountered a slow random forest calculation for large data sets in R? It happens too often on my old machine.

Packets as the caret, random and rf forests take a long time to calculate the results. The 'Ranger package’ accelerates our modeling process for the random forest algorithm. Helps you quickly create a large number of trees in less time.

Let's code a random forest model using Ranger:

install.packages("Ranger")

#Load the Ranger package

require(Ranger)

Ranger(Species ~ ., data = iris,num.trees=100,mtry=3)

train.idx <- sample(nrow(iris), 2/3 * nrow(iris))

iris.train <- iris[train.idx, ]

iris.test <- iris[-train.idx, ]

rg.iris <- Ranger(Species ~ ., data = iris.train)

pred.iris <- predict(rg.iris, data = iris.test)

#Build a confusion matrix

table(iris.test$Species, pred.iris$predictions)

Production:

setosa versicolor virginica

setosa 16 0 0

versicolor 0 16 2

virginica 0 0 16

Quite an impressive performance. You should test Ranger on more complex datasets and see how much faster your calculations become.

Exhausted while running your linear regression model on different pieces of data and calculating the evaluation metrics for each model? the purr package comes to your rescue.

You can also create generalized linear models (glm) for different pieces of data and calculate p-values for each feature in list form. The advantages of purr are infinite!

Let's see an example to understand its functionality. We will build a linear regression model here and subset the R-square values:

#First, read in the data mtcars data(mtcars) mtcars %>% split(.$cyl) %>% #selecting cylinder to create three sets of data using the cyl values map(~ lm(mpg ~ wt, data = .)) %>% map(summary) %>% map_dbl("r.squared")

Production

4 6 8 0.5086326 0.4645102 0.4229655

Then, Did you observe?? This example uses purr to solve a fairly realistic problem:

- Split a data frame into pieces

- Fit a model to each piece

- Calculate summary

- Finally, extract the R-squared values

It saves us a lot of time, truth? Instead of running three different models and three commands to create a subset of the R squared value, we only use one line of code.

Utilities: Other awesome R packages

Let's look at some other packages that don't necessarily fall under the umbrella of 'machine learning'. I have found them useful in terms of working with R in general.

Sentiment analysis is one of the most popular applications of machine learning. It is an inescapable reality in today's digital world. And Twitter is a prime target for extracting tweets and creating models to understand and predict sentiment..

Now, there are some R packages to extract / scrape Tweets and perform sentiment analysis. The ‘rtweet package’ does the same. Then, How does it differ from the other packages out there?

‘rtweet’ also helps you check the trends of R's own tweets. Impressive!

# install rtweet from CRAN install.packages("rtweet") # load rtweet package library(rtweet)

All users must be authorized to interact with the Twitter API. To obtain authorization, follow the instructions below:

1.Make a Twitter app

2. Create and save your access token

For a detailed step-by-step procedure to get Twitter authentication, follow this link here.

You can search for tweets with certain hashtags simply by the line of code mentioned below. Let's try searching all tweets with the hashtag #avengers as Infinity War is ready for release.

#1000 tweets with hashtag avengers tweets <- search_tweets( "#avengers", n = 1000, include_rts = FALSE)

You can even access the user IDs of people who follow a certain page. Let's see an example:

## get user IDs of accounts following marvel marvel_flw <- get_followers("marvel", n = 20000)

You can do much more with this package. Give it a try and don't forget to update the community if you find something exciting.

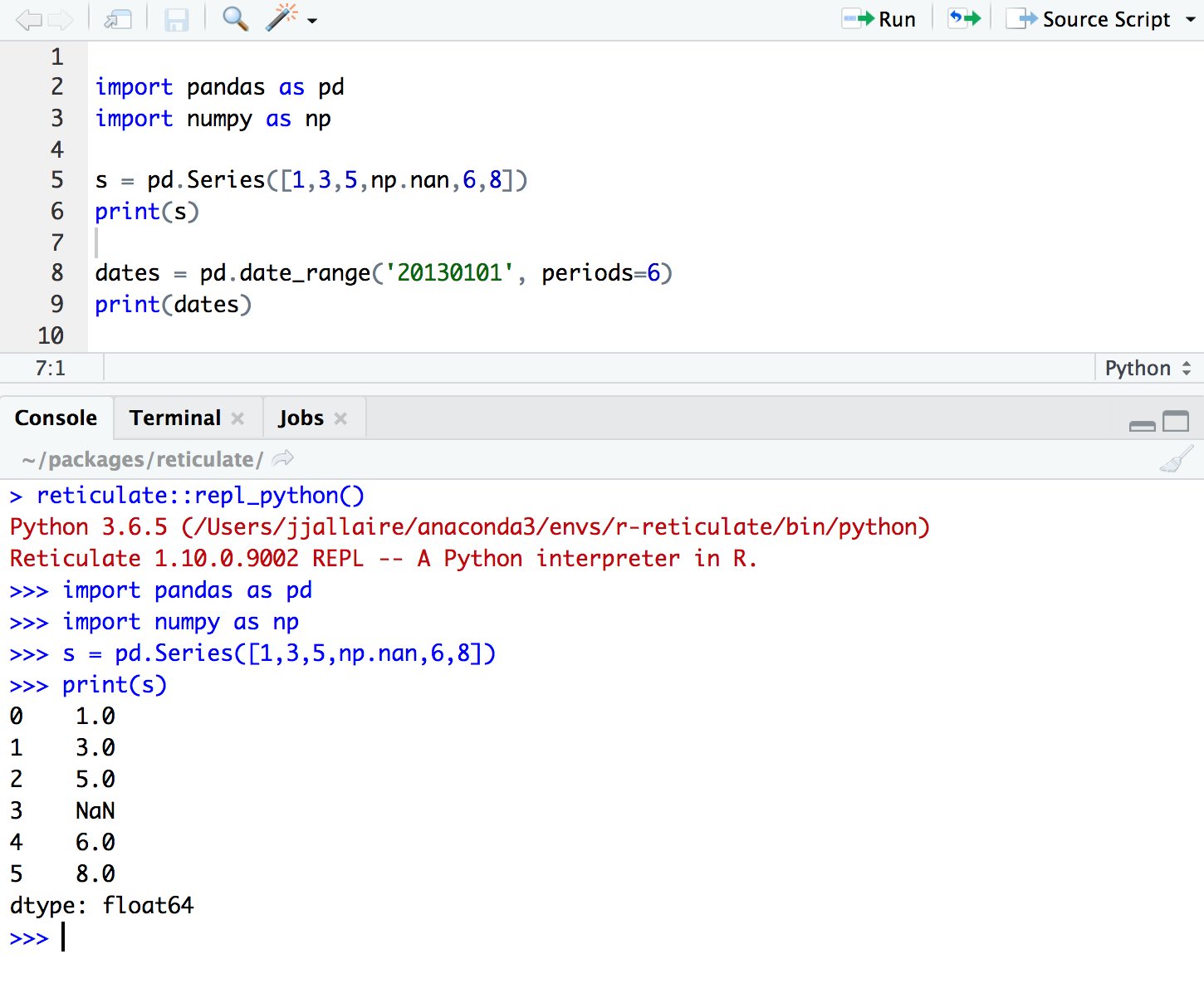

Do you love coding in R and Python, but you want to continue with RStudio? Reticulating is the answer! The package solves this important problem by providing a Python interface in R. You can easily use major Python libraries like numpy!, pandas and matplotlib inside R!

You can also transfer your progress with data easily from Python to R and from R to Python with just one line of code. Isn't it amazing? See the block of code below to see how easy it is to run Python in R.

Before proceeding with the direct installation of reticulate in R, you will first need to install TensorFlow and Keras.

install.packages("tensorflow")

install.packages("hard")

library(tensorflow)

library(hard)

install_hard()

install.packages("reticulate")

library(reticulate)

And you're ready to go! Run the commands I provided above in the screenshot and test your data science projects similarly.

FIRST

Here are two other R utility packages for all your programming nerds!!

Do you upgrade your R packages individually? It can be a tedious task, especially when there are multiple packages in play.

The 'InstallR’ allows you to update R and all its packages using a single command! Instead of checking the latest version of each package, we can use InstallR to update all packages at once.

# installing/loading the package:

if(!require(installr)) {

install.packages("installr"); require(installr)} #load / install+load installr

# using the package:

updateR() # this will start the updating process of your R installation.

# It will check for newer versions, and if one is available, will guide you through the decisions you'd need to make

What package do you use to install libraries from GitHub? Most of us trust the ‘devtools package’ for a long time. It seemed to be the only way. But there was a warning: we needed to remember the name of the developer to install a package:

With the 'githubinstall' package, the developer name is no longer required.

install.packages("githubinstall")

#Install any GitHub package by supplying the name

githubinstall("PackageName")

#githubinstall("AnomalyDetection")

Final notes

This is by no means an exhaustive list. There are many other R packages that have useful features, but most have overlooked them.

Do you know of any packages that I missed in this article? Or have you used any of the above for your project?? I'd love to hear from you!! Connect with me in the comments section below and let's talk about R!