Introduction

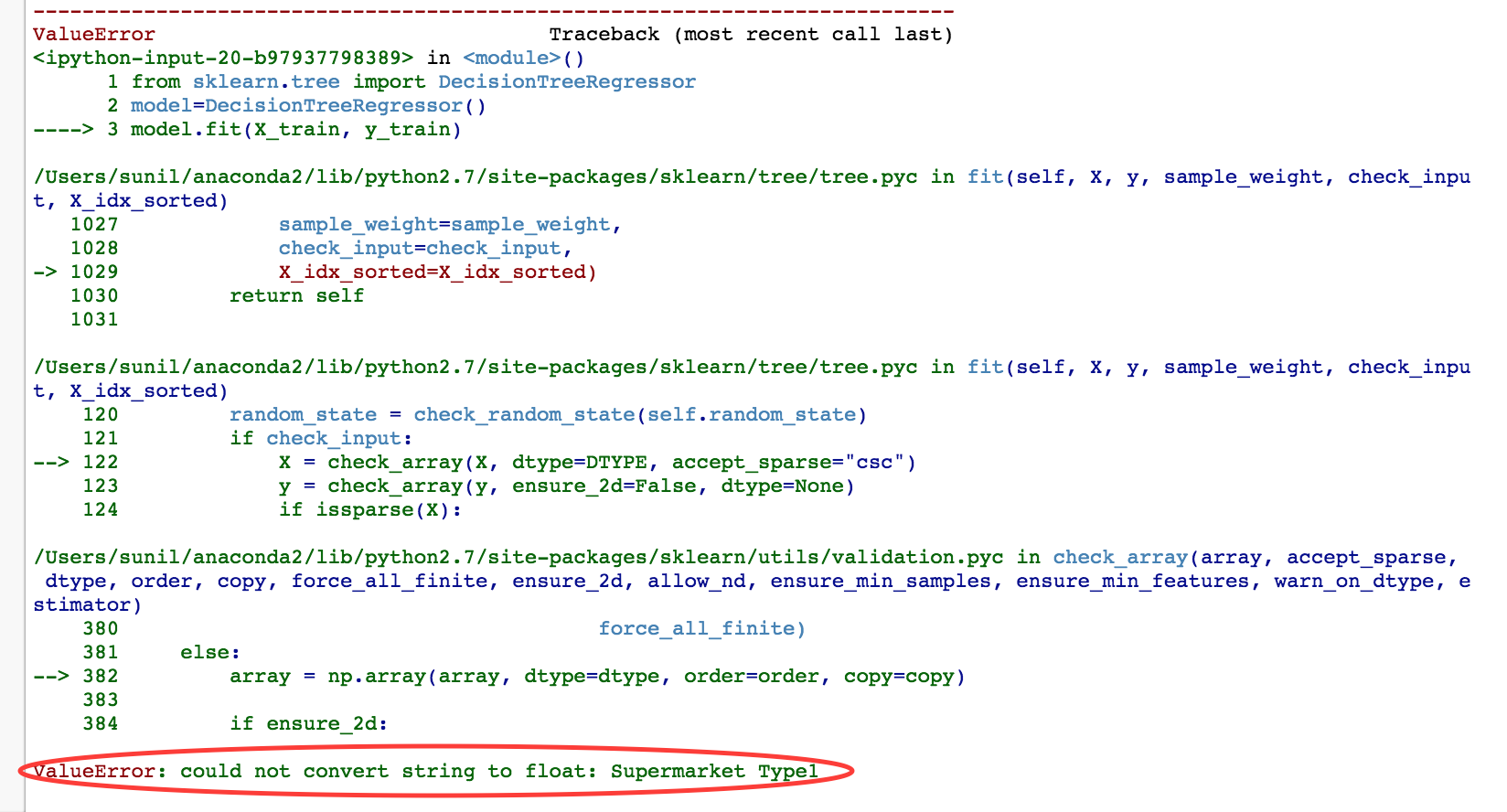

How many of you have seen this error when creating your machine learning models with “sklearn”?

I bet most of us! At least in the first days.

This error occurs when dealing with categorical variables (chains). And clear, you must convert these categories to numeric format.

To perform this conversion, we use various preprocessing methods like “tag encoding”, “hot coding” and others.

In this article, i will talk about an open source library recently ” CatBoost”Developed and contributed by Yandex. CatBoost can use categorical functions directly and is scalable in nature.

“This is the first Russian machine learning technology that is open source”, Mikhail Bilenko said, Yandex head of machine intelligence and research.

PD You can also read this article written by me before “How to deal with categorical variables?”.

Table of Contents

- What is CatBoost?

- Advantages of the CatBoost Library

- CatBoost compared to other boost algorithms

- CatBoost Installation

- Solving the ML challenge using CatBoost

- Final notes

1. What is CatBoost?

CatBoost is an open source machine learning algorithm from Yandex. Can be easily integrated with deep learning frameworks like Google's TensorFlow and Apple's Core ML. You can work with various types of data to help solve a wide range of problems faced by businesses today. To complement it, provides best-in-class accuracy.

It is especially powerful in two ways:

- Produces state-of-the-art results without extensive data training typically required by other machine learning methods, Y

- Provides powerful out-of-the-box support for the more descriptive data formats that accompany many business problems.

The name of “CatBoost” comes from two words “Category ”y“IncreaseSpooky”.

As discussed, library works fine with multiples Catdata egories, as audio, text, picture, including historical data.

“Increase”It comes from the machine learning algorithm that drives the gradient, since this library is based on a library that drives the gradient. Gradient augmentation is a powerful machine learning algorithm that is widely applied to multiple types of business challenges, such as fraud detection, recommendation items, forecasts and it works fine too. It can also return very good results with relatively less data, unlike DL models that need to learn from a large amount of data.

Here is a video message from Mikhail Bilenko, Yandex head of machine intelligence and research, y Anna Veronika Dorogush, Director of Machine Learning Systems at Tandex.

2. Advantages of CatBoost Library

- Performance: CatBoost provides state-of-the-art results and is competitive with any leading machine learning algorithm on the performance front.

- Automatic handling of categorical characteristics: We can use CatBoost without any explicit preprocessing to convert categories to numbers. CatBoost converts categorical values to numbers using various statistics on combinations of categorical characteristics and combinations of categorical and numeric characteristics. You can read more about this here.

- Robust: Reduces the need for extensive hyperparameter tuning and reduces the chances of over-tuning, which also leads to more generalized models. Even if, CatBoost has multiple parameters to adjust and contains parameters such as the number of trees, the learning rate, regularization, the depth of the tree, the fold size, bagging temperature and others. You can read about all these parameters here.

- Easy to use: You can use CatBoost from the command line, using an easy to use API for both Python and R.

3. CatBoost: comparison to other boost libraries

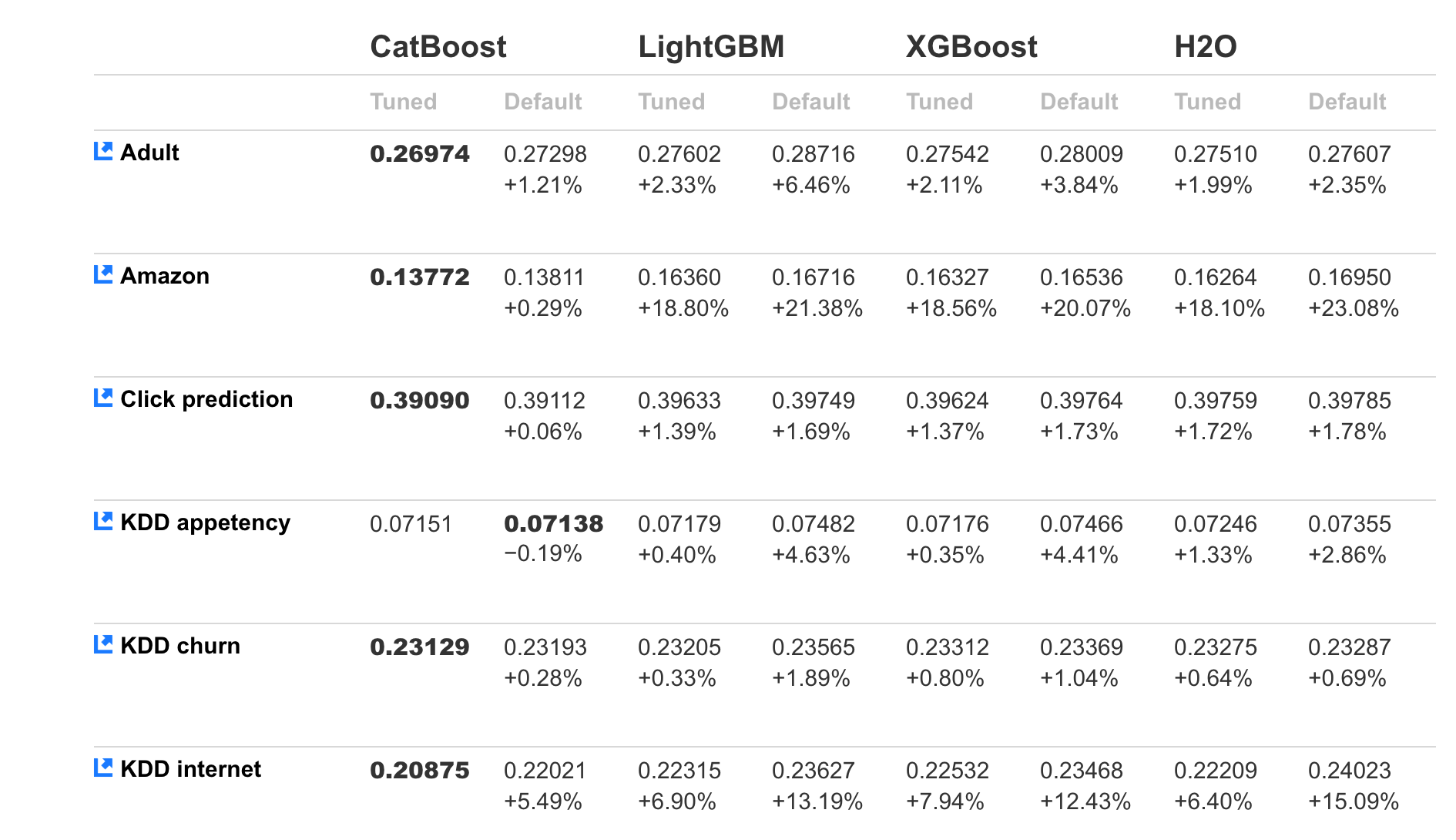

We have multiple boost libraries like XGBoost, H2O and LightGBM and they all work well on a variety of problems. CatBoost developer has benchmarked performance against competition on standard ML data sets:

The above comparison shows the log loss value for the test data and it is the lowest in the case of CatBoost In most cases. It clearly means that CatBoost works best mostly for both tuned and default models.

Besides this, CatBoost does not require conversion of the dataset to any specific format like XGBoost and LightGBM.

4. CatBoost Installation

CatBoost is easy to install for both Python and R. You need to have a version of 64 Python and R bits.

Below are the installation steps for Python and R:

4.1 Python installation:

pip install catboost4.2 R Installation

install.packages('devtools')

devtools::install_github('catboost/catboost', subdir="catboost/R-package")5. Solve the AA challenge using CatBoost

The CatBoost library can be used to solve classification and regression challenges. For classification, you can use “CatBoostClassifier“And for regression,”CatBoostReturn“.

Here is a live encoding window for you to play with the CatBoost code and see the results in real time:

In this article, i am solving “Big Mart Sales”Practice problem with CatBoost. It's a regression challenge, so we will use CatBoostRegressor, I will read the basic steps first (I will not perform feature engineering, i will just build a basic model).

import pandas as pd

import numpy as np

from catboost import CatBoostRegressor

#Read trainig and testing files

train = pd.read_csv("train.csv")

test = pd.read_csv("test.csv")

#Identify the datatype of variables

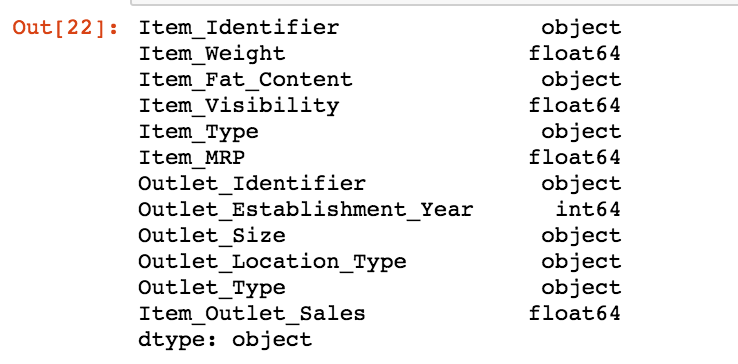

train.dtypes

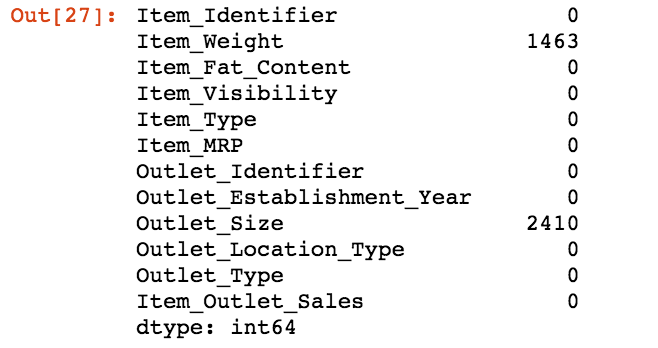

#Finding the missing values train.isnull().sum()

#Imputing missing values for both train and test train.fillna(-999, inplace=True) test.fillna(-999,inplace=True)

#Creating a training set for modeling and validation set to check model performance X = train.drop(['Item_Outlet_Sales'], axis=1) y = train.Item_Outlet_Sales from sklearn.model_selection import train_test_split X_train, X_validation, y_train, y_validation = train_test_split(X, Y, train_size=0.7, random_state=1234)

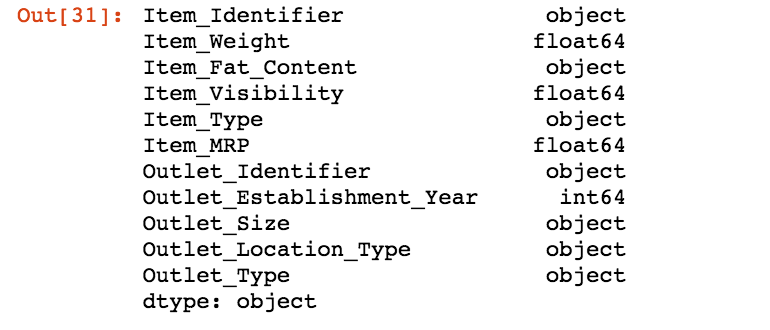

#Look at the data type of variables X.dtypes

Now, you will see that we will only identify categorical variables. We will not perform any preprocessing steps for categorical variables:

categorical_features_indices = np.where(X.dtypes != np.float)[0]

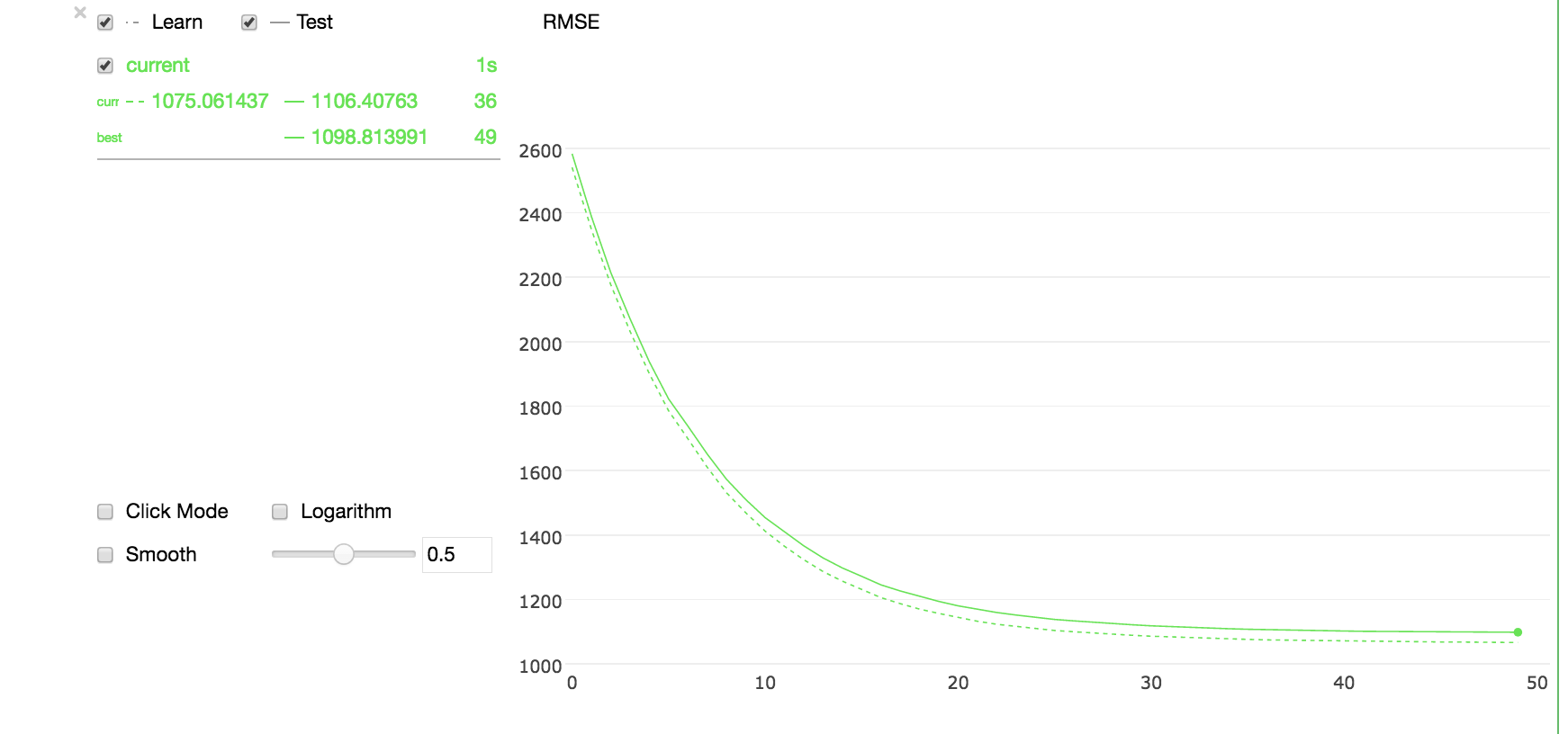

#importing library and building model from catboost import CatBoostRegressor model=CatBoostRegressor(iterations=50, depth=3, learning_rate=0.1, loss_function='RMSE') model.fit(X_train, y_train,cat_features=categorical_features_indices,eval_set=(X_validation, y_validation),plot=True)

As you can see, a basic model offers a fair solution and training and testing errors are synchronized. You can adjust the model parameters and functions to improve the solution.

Now, the next task is to predict the result of the test data set.

submission = pd.DataFrame()

submission['Item_Identifier'] = test['Item_Identifier']

submission['Outlet_Identifier'] = test['Outlet_Identifier']

submission['Item_Outlet_Sales'] = model.predict(test)

submission.to_csv("Submission.csv")

That is all! We have built the first model with CatBoost

6. Final notes

In this article, we saw an open source boost library recently “CatBoost” from Yandex that can provide a cutting-edge solution to a variety of business problems.

One of the key features that excites me about this library is the automatic handling of categorical values using various statistical methods.

We have covered basic details about this library and solved a regression challenge in this article.. I will also recommend that you use this library to solve an enterprise solution and compare the performance with other next generation models..