This article was published as part of the Data Science Blogathon

Introduction

Data cleansing is the process of analyzing data to find incorrect values, corrupt and missing and remove them to be suitable for input to data analysis and various machine learning algorithms.

It is the main and fundamental step that is performed before any analysis of the data can be performed.. There are no set rules to follow for data cleansing. It totally depends on the quality of the data set and the level of precision to be achieved.

Reasons for data corruption:

- Data is collected from various structured and unstructured sources and then combined, leading to duplicate and mislabeled values.

- Different data dictionary definitions for data stored in multiple locations.

- Manual input error / typographical errors.

- Wrong capitalization.

- Category: / mislabeled classes.

Data quality

Data quality is of utmost importance for analysis. There are several quality criteria that need to be checked:

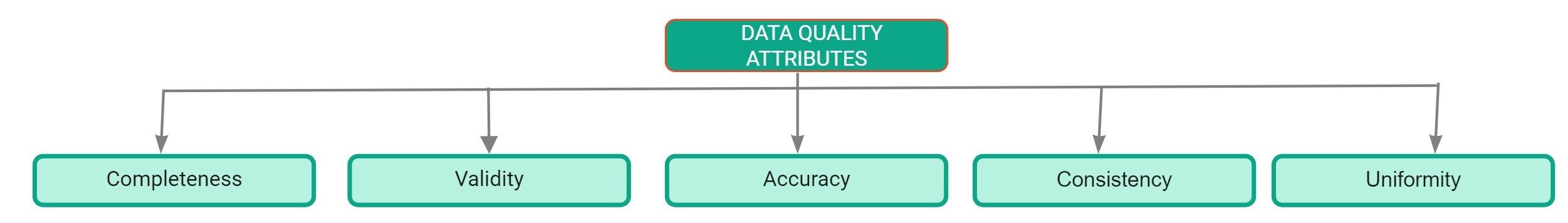

Data quality attributes

- I complete it: Defined as the percentage of entries that are completed in the data set. The percentage of missing values in the data set is a good indicator of the quality of the data set..

- Precision: It is defined as the extent to which the inputs in the data set are close to their actual values.

- Uniformity: Defined as the extent to which data is specified using the same unit of measure.

- Consistency: It is defined as the extent to which data is consistent within the same dataset and across multiple datasets.

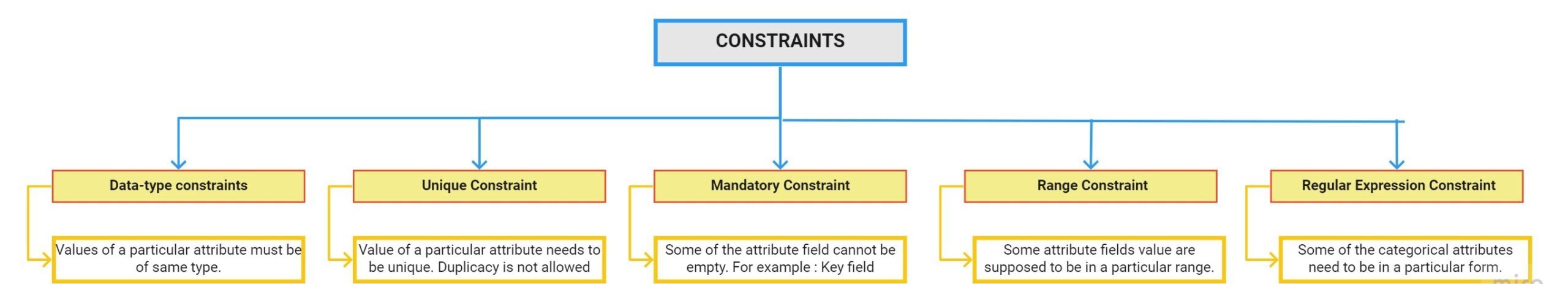

- Validity: It is defined as the extent to which the data conforms to the restrictions applied by business rules. There are several limitations:

Data Profile Report

Data profiling is the process of exploring our data and finding information from it. Pandas Profiling Report is the fastest way to extract comprehensive information about your dataset. The first step to data cleansing is to perform exploratory data analysis.

How to use pandas profiling:

Paso 1: The first step is to install the pandas profiling package using the pip command:

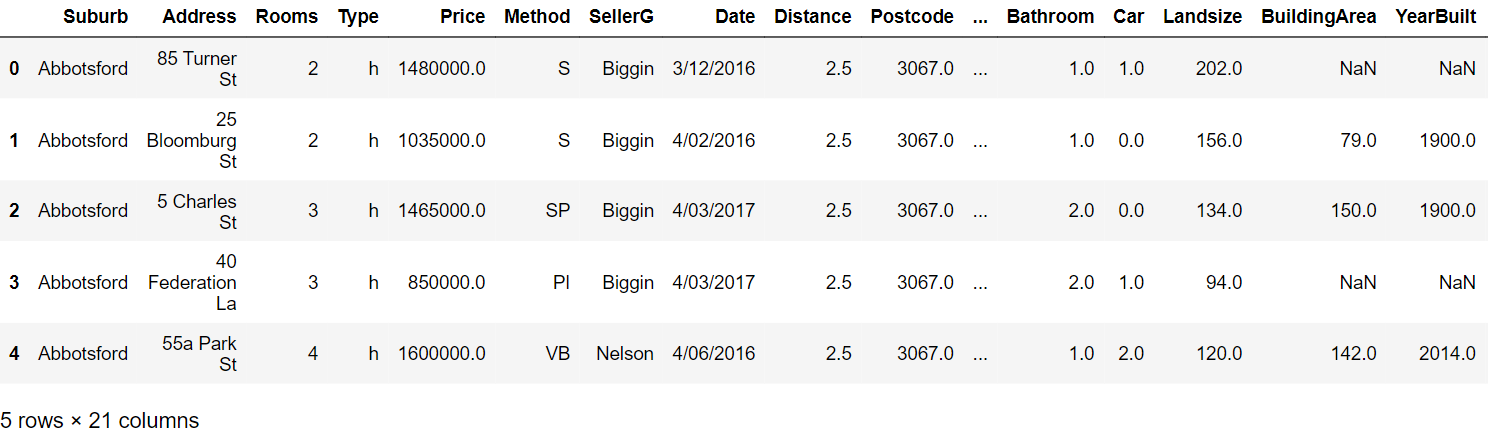

pip install pandas-profilingPaso 2: Load the dataset using pandas:

import pandas as pddf = pd.read_csv(r"C:UsersDellDesktopDatasethousing.csv")

Paso 3: Read the first five rows:

Paso 4: Generate the profiling report with the following commands:

from pandas_profiling import ProfileReportprof = ProfileReport(df)prof.to_file(output_file="output.html")

Profiling report:

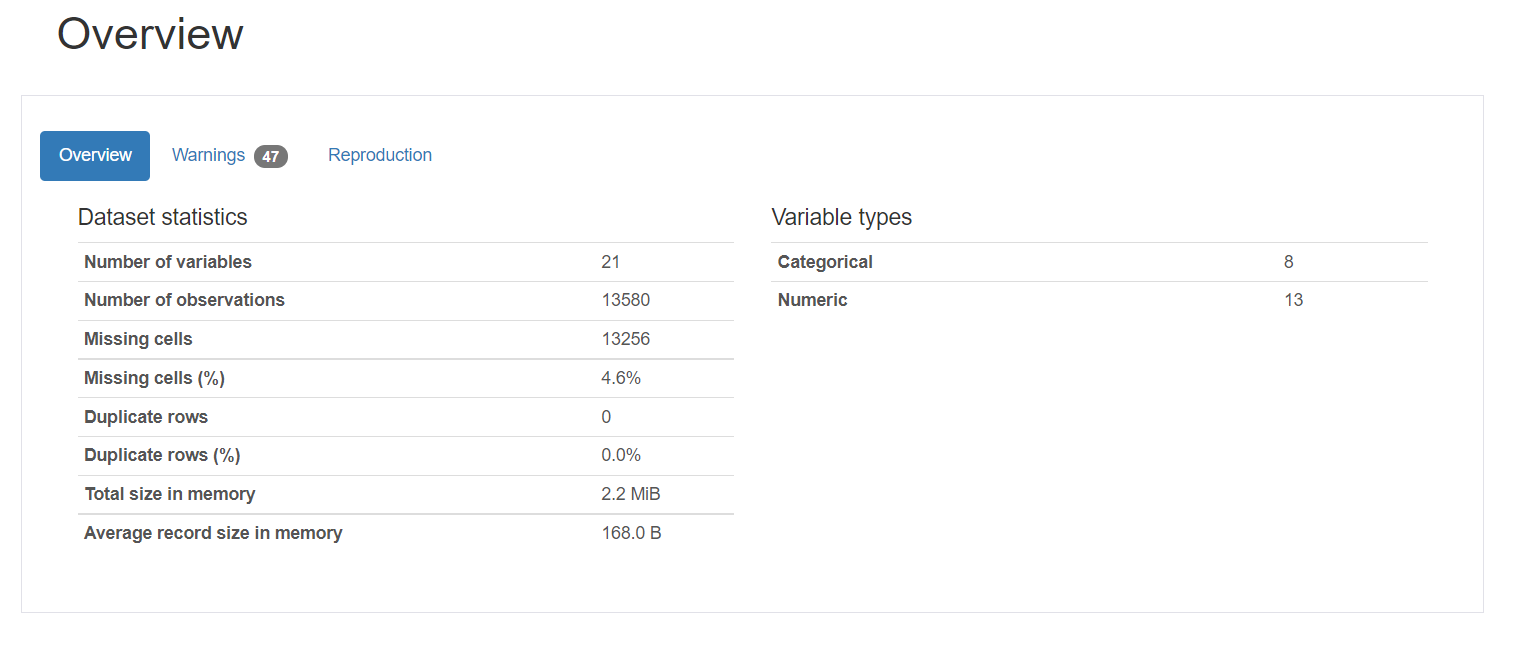

The profiling report consists of five parts: general description, variables, interactions, correlation and missing values.

1. Overview provides general statistics on the number of variables, the number of observations, the missing values, duplicates and the number of categorical and numeric variables.

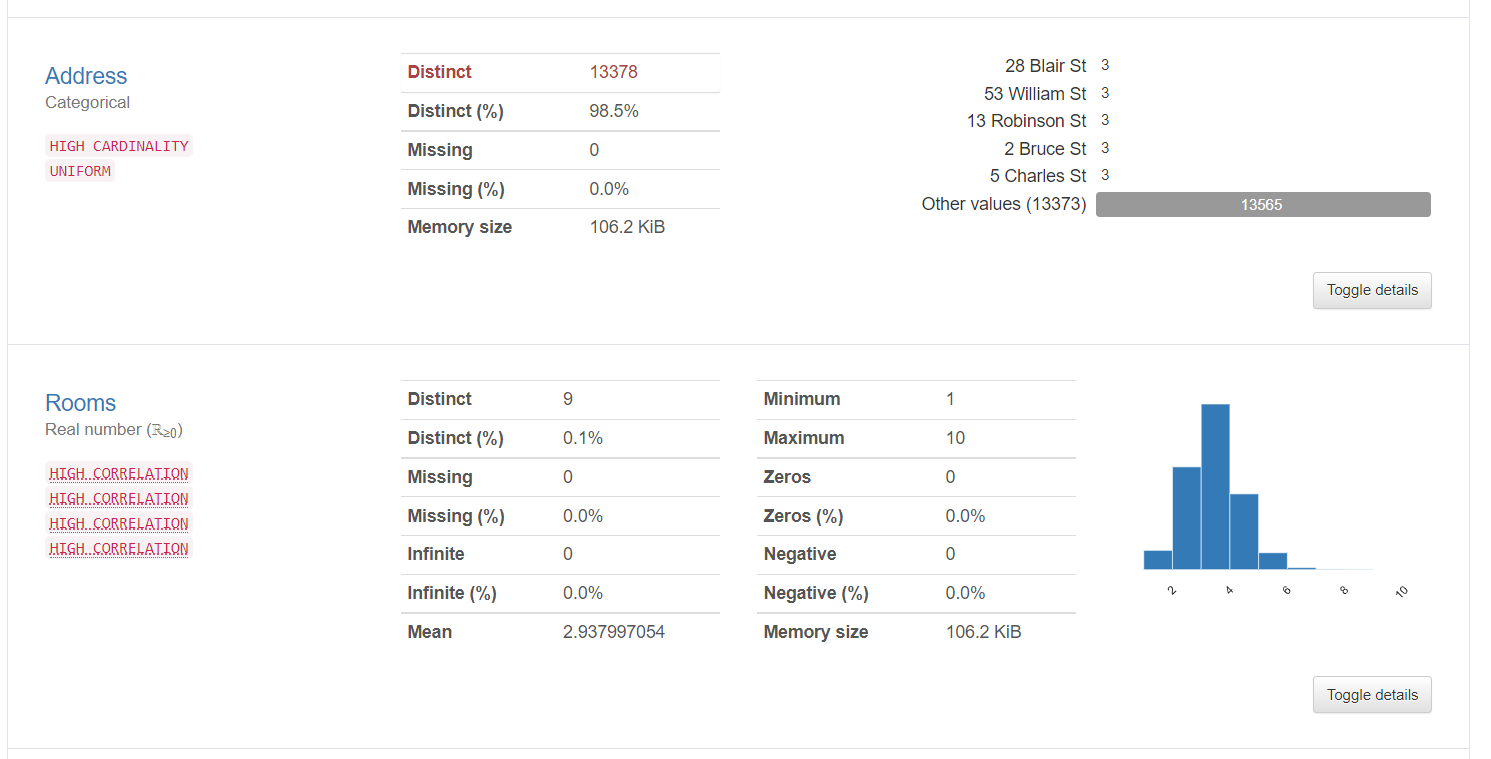

2. Variable information provides detailed information about distinct values, the missing values, average, the median, etc. Here are the statistics about a categorical variable and a numeric variable:

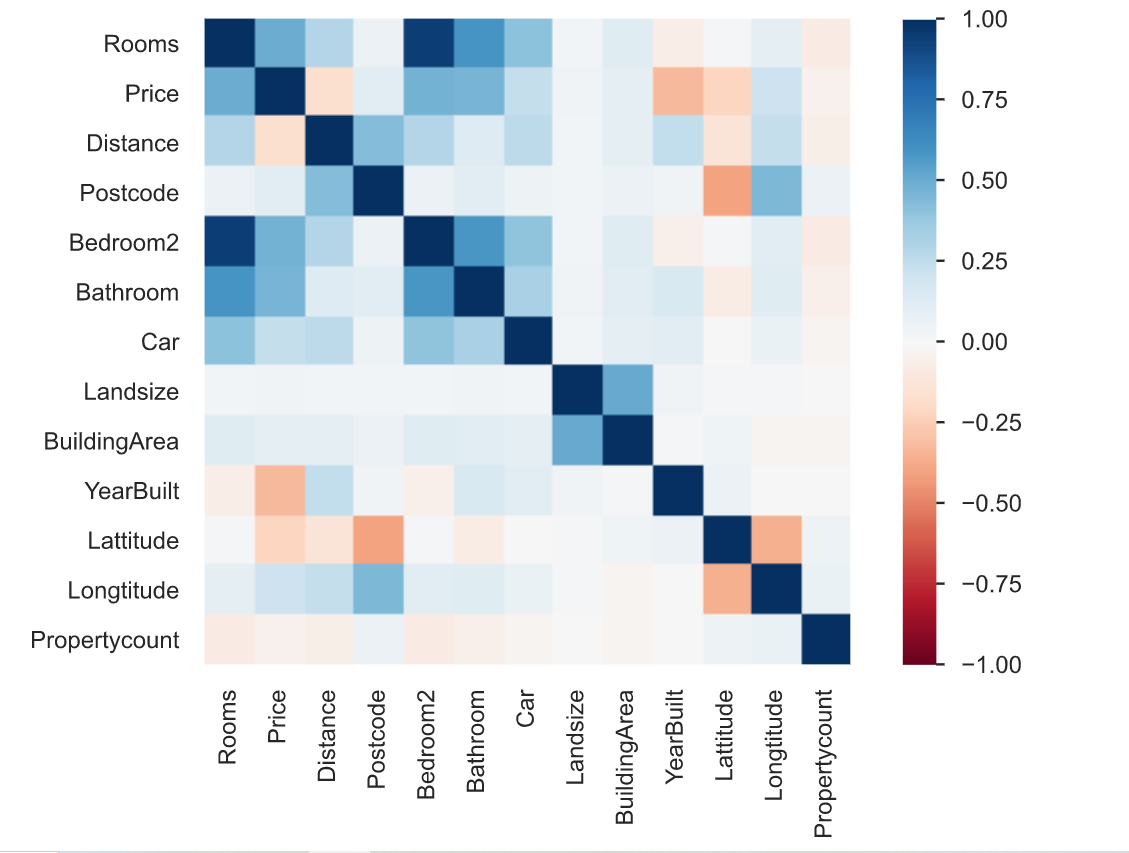

3. Correlation is defined as the degree to which two variables are related to each other. The profiling report describes the correlation of different variables with each other in the form of a heat map.

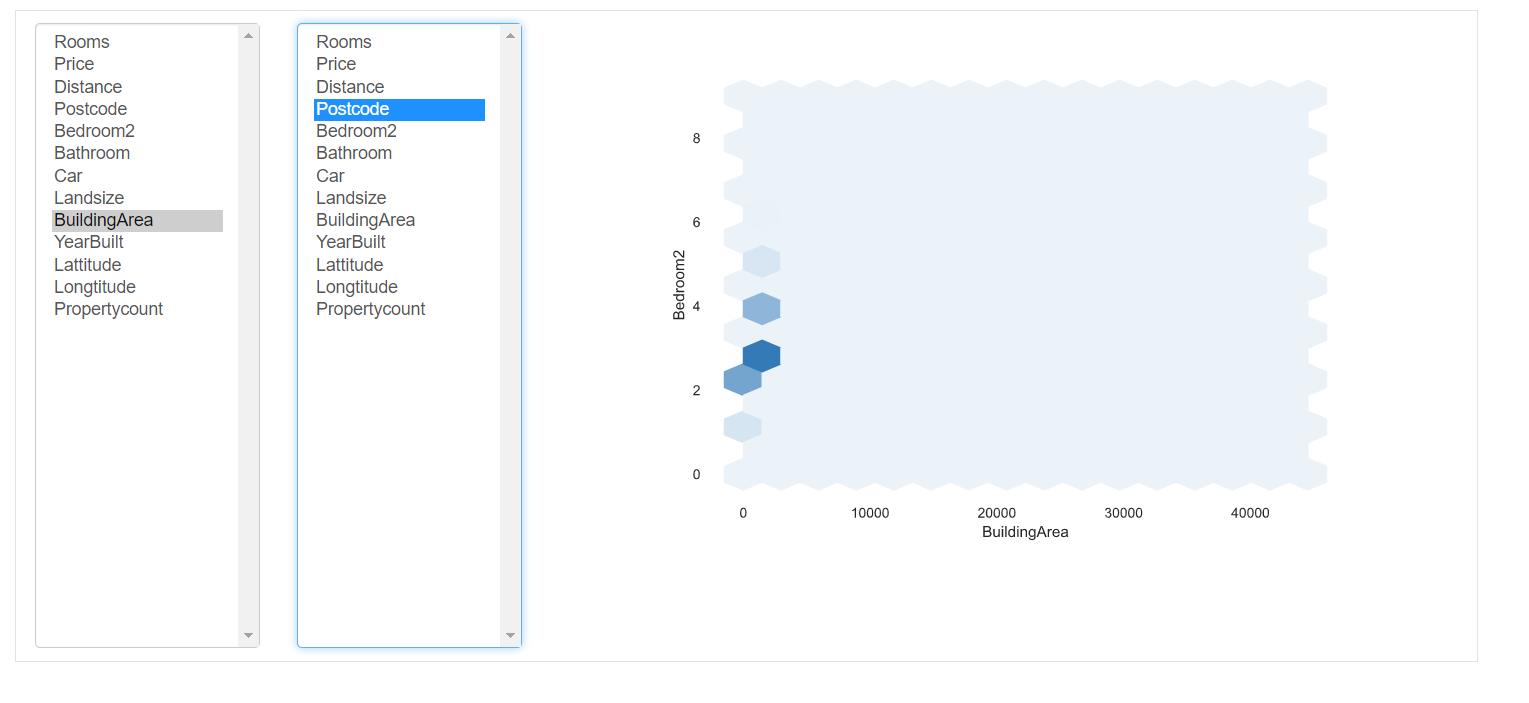

Interactions: this part of the report shows the interactions of the variables with each other. You can select any variable on the respective axes.

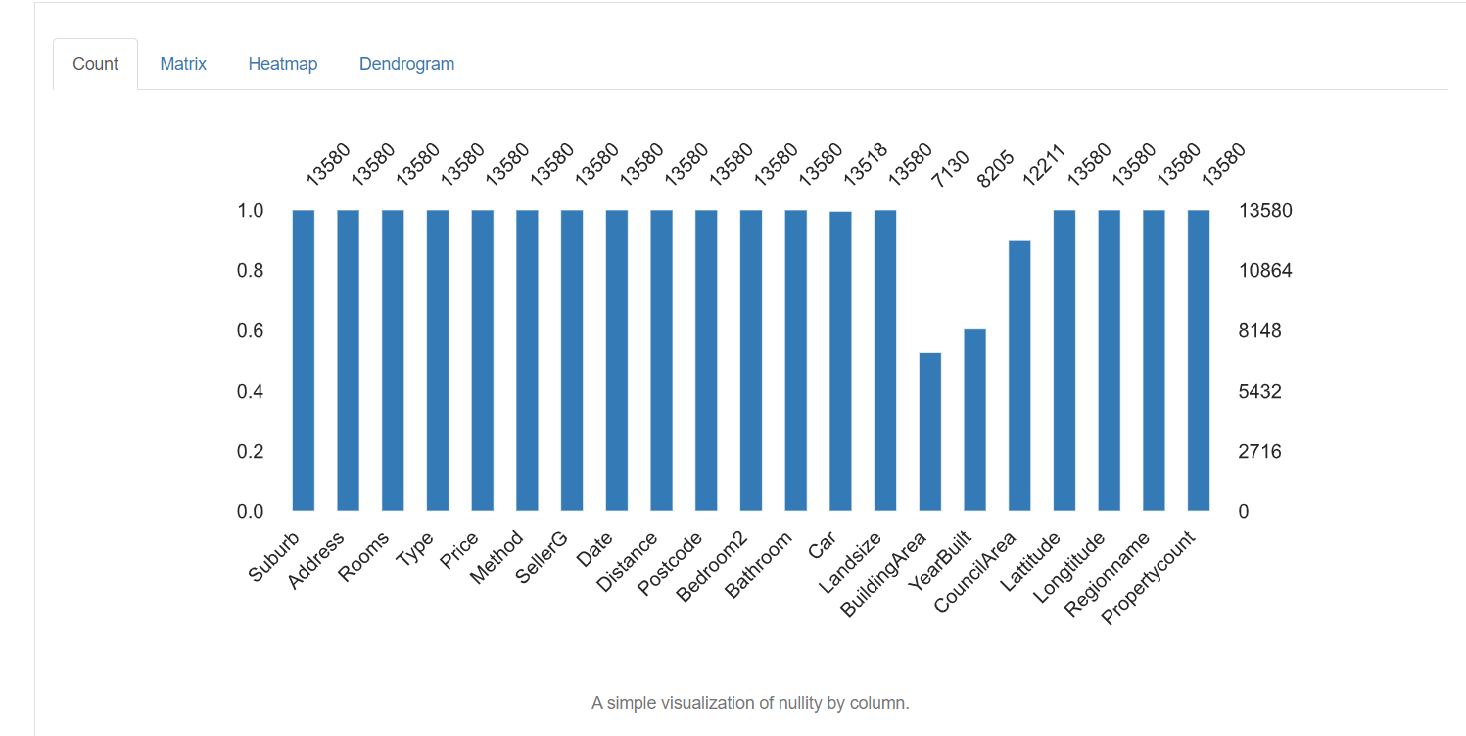

5. Missing values: represents the number of missing values in each column.

Data cleaning techniques

Now we have detailed knowledge about the missing data, incorrect values and mislabeled categories in the dataset. Now we will see some of the techniques used to clean data. It totally depends on the quality of the dataset, the results to be obtained from how you handle your data. Some of the techniques are as follows:

Handling missing values:

Handling missing values is the most important step in data cleansing. The first question to ask yourself is why is the data missing?? Missing just because the data entry operator didn't record it or was it intentionally left empty? You can also review the documentation to find the reason for it.

There are different ways to handle these missing values:

1. Eliminate missing values: The easiest way to handle them is to just remove all the rows that contain missing values. If you don't want to find out why the values are missing and you only have a small percentage of missing values, you can remove them using the following command:

But nevertheless, it is not advisable because all data is important and has great importance for the overall results. As usual, the percentage of entries missing in a particular column is high. So quitting is not a good option.

2. Imputation: Imputation is the process of replacing null values / lost for some value. For numeric columns, one option is to replace each missing entry in the column with either the mean value or the median value. Another option could be to generate random numbers between a range of suitable values for the column. The range could be between the mean and standard deviation of the column. You can simply import an imputer from the scikit-learn package and do the imputation as follows:

from sklearn.impute import SimpleImputer

#Imputation

my_imputer = SimpleImputer()

imputed_df = pd.DataFrame(my_imputer.fit_transform(df))Handling duplicates:

Duplicate rows generally occur when data is combined from multiple sources. Sometimes it replicates. A common problem is when users have the same ID number or the form has been submitted twice.

The solution to these duplicate tuples is to simply remove them. You can use the unique function () to find out the unique values present in the column and then decide which values need to be removed.

Coding:

Character encoding is defined as the set of rules defined for the one-to-one mapping of raw binary byte strings to human readable text strings. Various encodings are available: ASCII, utf-8, US-ASCII, utf-16, utf-32, etc.

You may notice that some of the text character fields have irregular and unrecognizable patterns. This is because utf-8 is the default Python encoding. All code is in utf-8. Therefore, when data comes from multiple structured and unstructured sources and is kept in a common place, irregular patterns are observed in the text.

The solution to the above problem is to first find out the character encoding of the file with the help of the chardet module in Python as follows:

import chardetwith open("C:/Users/Desktop/Dataset/housing.csv",'rb') as rawdata:result = chardet.detect(rawdata.read(10000))# check what the character encoding might beprint(result)

After finding the encoding type, if different from utf-8, save the file using encoding “utf-8” using the following command.

df.to_csv("C:/Users/Desktop/Dataset/housing.csv")Scaling and normalization

Scale refers to transforming the data range and changing it to some other range of values. This is beneficial when we want to compare different attributes on the same basis.. A useful example could be currency conversion.

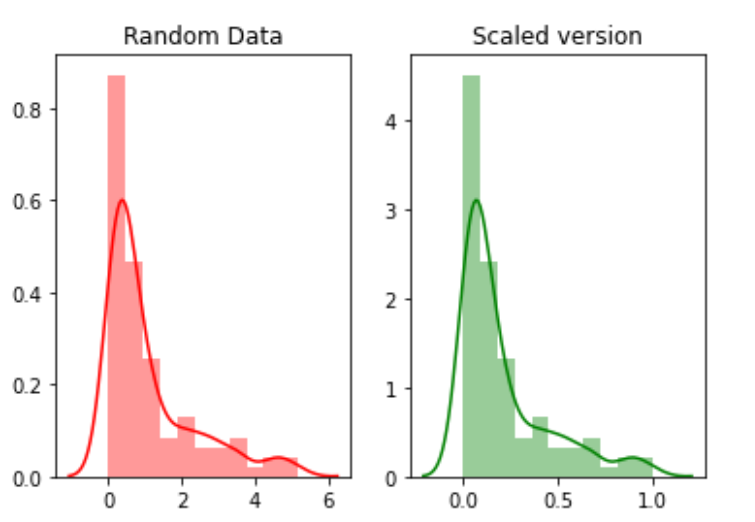

For instance, we will create 100 random points from an exponential distribution and then we will plot them. Finally, we will convert them to a scaled version using the python mlxtend package.

# for min_max scalingfrom mlxtend.preprocessing import minmax_scaling# plotting packagesimport seaborn as snsimport matplotlib.pyplot as plt

Now scaling the values:

random_data = np.random.exponential(size=100)# mix-max scale the data between 0 and 1scaled_version = minmax_scaling(random_data, columns=[0])

Finally, graphing the two versions.

Normalization refers to changing the distribution of the data so that it can represent a bell curve where the attribute values are equally distributed on the mean. The value of the mean and the median is the same. This type of distribution is also called a Gaussian distribution.. It is necessary for those machine learning algorithms that assume that the data is normally distributed.

Now, we will normalize the data using the boxcox function:

from scipy import statsnormalized_data = stats.boxcox(random_data)# plot both together to comparefig,ax=plt.subplots(1,2)sns.distplot(random_data, ax=ax[0],color="pink")ax[0].set_title("Random Data")sns.distplot(normalized_data[0], ax=ax[1],color="purple")ax[1].set_title("Normalized data")

Date handling

The date field is an important attribute that needs to be handled during data cleansing. There are several different formats in which data can be entered into the dataset. Therefore, standardizing the date column is a critical task. Some people may have treated the date as a string column, others as a DateTime column. When the data set is combined from different sources, this can create a problem for analysis.

The solution is to find the date column type first using the following command.

df['Date'].dtype

If column type is different from DateTime, convert it to DateTime using the following command:

import datetimedf['Date_parsed'] = pd.to_datetime(df['Date'], format="%m/%d/%y")

Handling inconsistent data entry issues

There are a lot of inconsistent entries that cannot be found manually or by direct calculations. For instance, whether the same entry is written in upper or lower case or a mix of upper and lower case. Then, this entry should be standardized across the entire column.

One solution is to convert all entries in a column to lowercase and trim the extra space from each entry. This can be reversed later once the analysis is complete.

# convert to lower casedf['ReginonName'] = df['ReginonName'].str.lower()# remove trailing white spacesdf['ReginonName'] = df['ReginonName'].str.strip()

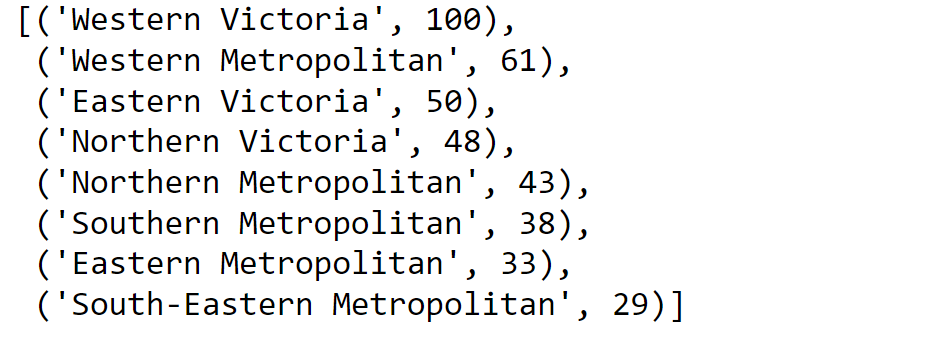

Another solution is to use fuzzy matching to find which strings in the column are closest to each other and then replace all those entries with a particular threshold with the leading entry.

First, we will discover the unique names of the regions:

region = df['Regionname'].unique()Then we calculate the scores using the approximate match:

import fuzzywuzzyfromfuzzywuzzy import processregions=fuzzywuzzy.process.extract("WesternVictoria",region,limit=10,scorer=fuzzywuzy.fuzz.token_sort_ratio)

Validating the process.

Once the data cleaning process is complete, it is important to verify and validate that the changes you have made have not hindered the restrictions placed on the dataset.

And finally, … No need to say,

Thank you for reading!

The media shown in this article is not the property of DataPeaker and is used at the author's discretion.