This article was published as part of the Data Science Blogathon.

Overview

became, truth? We group the data points into 3 groups based on their similarity or closeness.

Table of Contents

1.Introduction to K means

2.K means ++ algorithm

3.How to choose the K value in K means?

4.Practical considerations in mean K

5.Cluster trend

1. Introduction

Let's just understand the grouping of K-means with examples from daily life. We know that these days everyone loves to watch web series or movies on Amazon Prime, Netflix. Have you ever observed something every time you open Netflix? namely, group movies based on their genre, namely, crime, suspended, etc., I hope you have observed it or already know it. so the Netflix genre grouping is an easy to understand example of grouping. let's understand more about k means clustering algorithm.

Definition: Group data points based on their similarity or closeness to each other, in simple terms, the algorithm needs to find the data points whose values are similar to each other and, Thus, these points would belong to the same group.

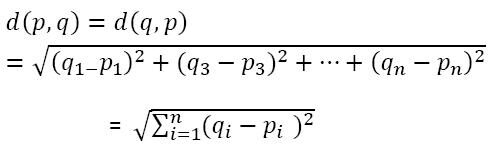

Then, How does the algorithm find the values between two points to group them? The algorithm finds the values using the 'Distance measurement' method. here the distance measure is ‘Euclidean distance’

Observations closest to or similar to each other would have a low Euclidean distance and then be grouped.

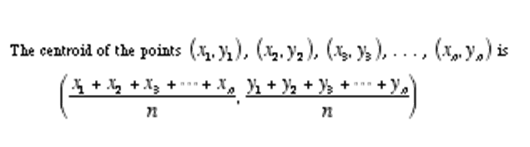

one more formula you need to know to understand the means of K is ‘Centroid’. The k-means algorithm uses the concept of centroid to create ‘k groups’.

So now you are ready to understand the steps of the k-means clustering algorithm.

Steps in K-means:

paso 1: choose the k value for ex: k = 2

paso 2: randomly initialize centroids

Paso 3: calculate the Euclidean distance from the centroids to each data point and form groups that are close to the centroids

paso 4: find the centroid of each group and update the centroids

paso: 5 repeat step 3

Every time groups are made, centroids update, the updated centroid is the center of all points that fall in the group. This process continues until the centroid no longer changes, namely, the solution converges.

You can play around with the K-means algorithm using the link below, try it.

https://stanford.edu/class/engr108/visualizations/kmeans/kmeans.html

Then, Whats Next? How do you choose the initial centroids at random?

Here comes the concept of the k-Means algorithm ++.

2. Algoritmo K-Means ++:

I'm not going to stress you over for this, so don't worry. It is very easy to understand. Then, What is k-means ++ ??? Let's say we want to choose two centroids initially (k = 2), you can choose a centroid at random or you can choose one of the data points at random. Simple truth? Our next task is to choose another centroid, How do you choose it? any ideas?

We choose the next centroid of the data points that is a great distance from the existing centroid or the one that is a great distance from an existing group that has a high probability of capturing.

3.How to choose the K value in K-means:

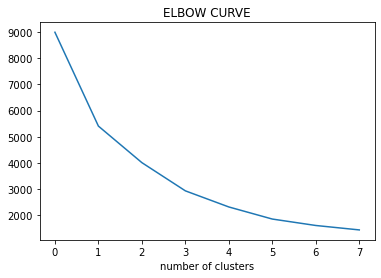

1.Elbow method

Steps:

Paso 1: compute clustering algorithm for different values of k.

for example k =[1,2,3,4,5,6,7,8,9,10]

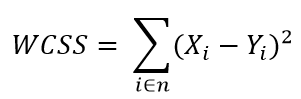

paso 2: for every k, calculate the sum of squares within the cluster (WCSS).

Paso 3: plot the WCSS curve according to the number of clusters.

Paso 4: The location of the curve on the plot is generally considered an indicator of the approximate number of clusters.

Practical considerations in K-means:

- A number of clusters chosen in advance (K).

- Data standardization (scaled).

- Categorical data (can be solved with K mode).

- Impact of initial centroids and outliers.

5. Cluster trend:

Before applying a clustering algorithm to the given data, it is important to check if the given data has some significant clusters or not. The process for evaluating the data to verify whether the data is feasible for clustering or not is known as 'Clustering trend', so we should not blindly apply the grouping method and check the grouping trend. How?

We use the 'Hopkins Statistic’ to know whether to perform clustering or not for a given dataset. Examine whether data points differ significantly from data uniformly distributed in multidimensional space.

This concludes our article on the k-means clustering algorithm.. In my next article, I will talk about Python implementation of K-means clustering algorithm.

Thanks!

The media shown in this article is not the property of DataPeaker and is used at the author's discretion.